The End of Auftragstaktik?

Lieutenant Colonel Rosario M. Simonetti, Italian Army Marine; and Paolo Tripodi, PhD1

https://doi.org/10.21140/mcuj.2020110106

PRINTER FRIENDLY PDF

EPUB

AUDIOBOOK

Abstract: The impact of new technologies and the increased speed in the future battlespace may overcentralize command and control functions at the political or strategic level and, as a result, bypass the advisory role played by a qualified staff. Political and/or strategic leaders might find it appealing to pursue preemptive or preventive wars as a strategy to acquire asymmetric advantage over the enemy. This article investigates the roots of this trend, connecting historical perspectives with implications that next-generation technology may have on command and control.

Keywords: command and control, technological innovation, mission command, automation

The impact of new technologies and the increased tempo of the future battlespace may overcentralize command and control functions at the political or strategic level. Political and strategic leaders might pursue preemptive or preventive wars as a strategy to acquire asymmetric advantage over the enemy, not because they must but because they can. As a result, senior leaders may be encouraged to bypass the advisory role played by their qualified staff and undermine the autonomy of lower level commanders. The advancement of technological systems may end mission command, Auftragstaktik. Donald E. Vandergriff defines Auftragstaktik as a cultural philosophy of military professionalism:

The overall commander’s intent is for the member to strive for professionalism, in return, the individual will be given latitude in the accomplishment of their given missions. Strenuous, but proven and defensible standards will be used to identify those few capable of serving in the profession of arms. Once an individual has been accepted into the profession, a special bond forms with their comrades, which enables team work and the solving of complex tasks. This kind of command culture . . . must be integrated into all education and training from the very beginning of basic training.2

This article explores the roots of this trend, connecting historical perspectives with implications that next-generation technology may have on command and control.

Technological innovation plays a critical role in the conduct of war. The adoption of new technologies in warfare has been instrumental in replacing roles traditionally played by humans. During the interwar period, between World War I and World War II, warfare was optimized to cope with greater distances and faster execution through increasingly complex machines. The armed forces general staffs became more sophisticated and complex to process a greater amount of information. The battlefield gradually moved away from the commander, while command and control, a critical function for warfare, moved toward automation.

Current military capabilities are the result of an evolutionary trend in which technology and information have constantly played a central role. With the introduction of the network-centric warfare (NCW) concept of operations, or the employment of networked forces at all levels, commanders can now access a network of sensors, decision makers, and soldiers, which provides shared awareness, higher tempo, greater lethality, and survivability on an almost global scale.3 The development and adoption of new technologies has allowed political and strategic decision makers to control the battlefield in real time even at the tactical level. The impact of new technologies and the increased speed in the future battlespace may overcentralize command and control functions at the political or strategic level. The consequences might be detrimental to the conduct of military operations at the operational and tactical level. In addition, autonomous weapons and artificial intelligence are the next step toward the automation of warfare with critical implications for command and control.

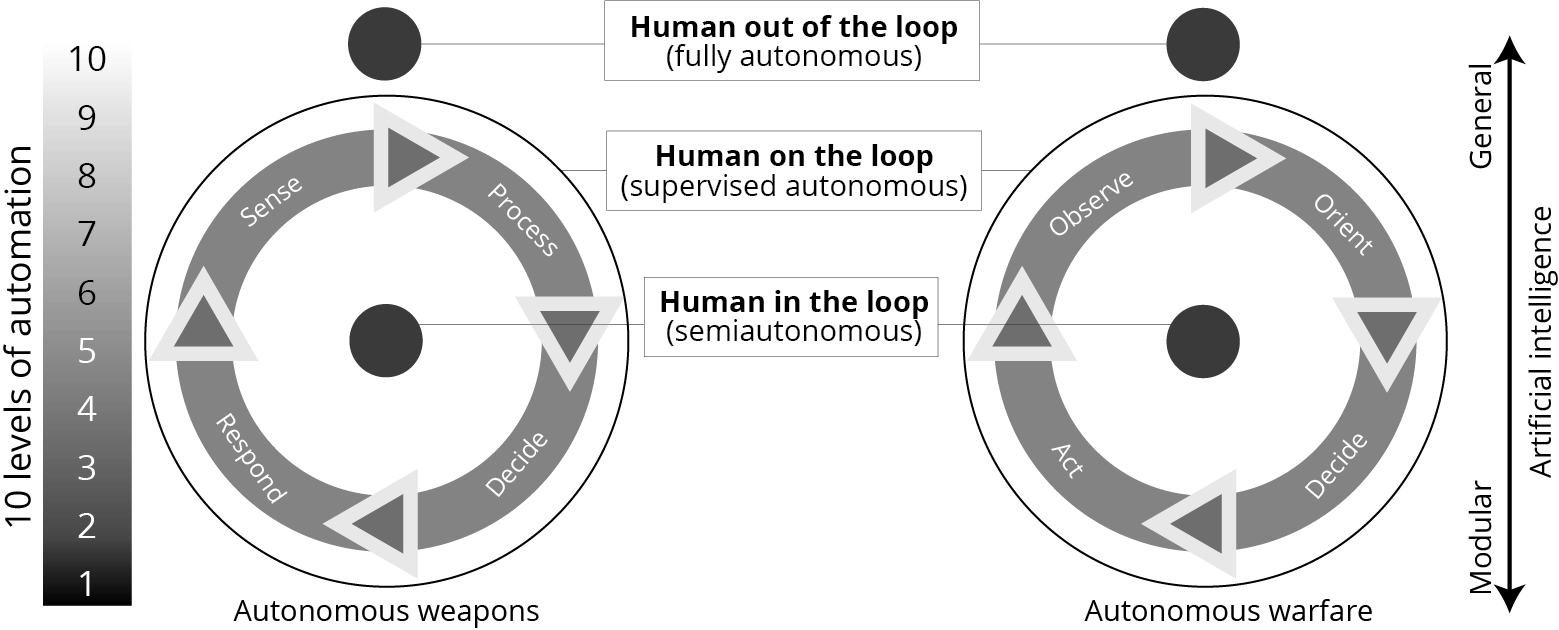

In an investigation of command and control, the authors followed the approach taken by Command and Control, Marine Corps Doctrine Publication (MCDP) 6, and used U.S. Air Force colonel John R. Boyd’s OODA loop (observation, orientation, decision, and action) as, in the words of Command and Control, it “describes the basic sequence of the command and control process.”4 In addition, the OODA loop has an important role for effective decision making. In the authors’ view, while the introduction of highly autonomous technologies has and will continue to have a significant impact on the observation (O), orientation (O), and action (A) phases, the decision (D) phase will continue to require a human “on the loop” to control the conduct of operations.5 The adoption of new technologies will make the OOA phases much faster, and the decision phase will receive direct and immediate benefit from it, yet the ability to apply judgment and professional experience will remain a critical factor of such a phase. Warfighting, MCDP 1, rightly stressed that

A military decision is not merely a mathematical computation. Decision-making requires both the situational awareness to recognize the essence of a given problem and the creative ability to devise a practical solution. These abilities are the products of experience, education and intelligence.6

Yet, the quest for reliable, accurate, and fast military options may remove humans from many processes and procedures. Indeed, machines might replace humans in many critical phases of the decision-making process. This possibility has raised some concerns among military practitioners and scholars. In the future of warfare, often described as a hyperwar, human decision making may be almost entirely absent from the OODA loop due to the near-instantaneous responses from the competing elements.7 The description deals with the side effect of the enhanced speed caused by increased automation of the future operating environment: the inevitable and necessary compression of the OODA loop.

Doctrinal Aspects

The conduct of warfare is intrinsically linked to the translation of the commander’s intent into actions. Commanders observe the surrounding situation, process the information, develop a plan, and execute it using the organizational structures and technological systems available. Indeed, how a battle is conducted is the commander’s prerogative. The interrelation and interconnectedness between command and control is so critical that in almost all Western doctrines the two functions are always mentioned together. Command and control, therefore, is a critical element of a military leader’s professional development. Military leaders understand that issuing an order comes at the end of a process through which they have gathered and analyzed information, assessed and organized resources, planned, communicated instructions, shared information, coordinated, monitored results, and supervised execution. Finally, they assess the plan’s effectiveness.8

Military doctrine and academic studies have provided several definitions of command and control. Martin van Creveld in Command in War wrote:

I will use the word “command” [instead of Command, Control, and Communication] throughout in much the same way as people commonly writing out the term “management” to describe the manifold activities that go into the running of a business organization.9

Martin van Creveld assumes that control activities are intrinsic to the attributions of command. As a manager of a business gives purpose and direction to their commercial activities, so a leader must be able to do the same in the warfighting business. The United Kingdom’s Defence Doctrine for the conduct of operations states, “Complex operations demand a Command and Control philosophy that does not rely upon precise control, but is able to function despite uncertainty, disorder and adversity.”10 The definition describes the fog of warfare and therefore deals with the uncertainty of a high level of control. Given the human, violent, and unpredictable nature of war, a level of uncertainty must be accepted and shall not limit the initiative at the tactical level.

In Command and Control, the Marine Corps considers command and control as a loop: “Command and Control are the means by which a commander recognizes what needs to be done and sees to it that appropriate actions are taken.”11 In the authors’ view, the features of command and control as warfighting functions are: leadership, authority, resources, feedback, and mission objectives. For the purpose of this article, the authors refer to the U.S. DOD Dictionary of Military and Associated Terms’ definition of command and control as the most effective: “the exercise of authority and direction by a properly designated commander over assigned and attached forces in the accomplishment of the mission. Also called C2.”12

Roots of Modern Command and Control

The early development of modern command and control can be traced back to the Napoleonic Wars period and its effect on the French and Prussian military forces in the nineteenth century. However, it was during World War II that command and control became an important function of warfare. The 1920s and 1930s were critical, as new technologies, and the impact that such technologies had on military thinking, shaped the evolution of command and control. During this period, armies developed the ability to mobilize large masses of soldiers in a relatively quick time and military formations could engage the enemy from greater distances, while the operational, and to a greater extent the strategic command, were increasingly more removed from the battlefield.

At the foundation of the mobilization of a fighting force, there were at least three critical factors that military professionals had to consider: the effect of speed and distance, the combined arms approach, and the role of information and communication. These three elements of modern warfare had a direct impact on command and control functions.

According to Williamson Murray and Allan R. Millett, innovation in the interwar period was characterized by, but not limited to, the development of amphibious warfare, armored warfare, strategic bombardment, and aircraft carrier development.13 The common driver in each of these innovations was the pursuit of the ability to rapidly maneuver large armies to avoid the exhausting and costly trench warfare of World War I. Speeding up and broadening the battlefield had a direct impact on both leaders’ and commanders’ ability to remain in control of the tactical level of war. Scholars have identified the German approach as the most visionary and creative. A strong military and forward-thinking mindset was developed by insightful leaders, such as Major General Gerhard von Scharnhorst, Field Marshal August Neidhardt von Gneisenau, and Major General Carl von Clausewitz. They played a critical role in reforming the Prussian Army. General Helmuth Von Moltke the elder was probably the one that developed the most effective approach to deal with a vast battlefield. In Moltke’s view, commanders should have the freedom to conduct military operations following general directives rather than detailed orders. As a result, Moltke was instrumental in the development of mission command. He strongly encouraged the development of independent thinking and action among subordinates.14

From the experience of the nineteenth century through the 1920s and 1930s, the German doctrine Die Truppenführung (troop leading) pushed decision-making authority to the lower levels of command.15 Junior leaders were required to assume responsibilities, take the initiative, and exercise judgment. The German officer corps adopted a mission tactic command philosophy, the Auftragstaktik, and enjoyed a significant amount of autonomy at every level of command.16 The adoption of this command philosophy has been instrumental in dealing with the faster pace of maneuver warfare in a geographically and technically extended battlefield and with the increased physical distance between the tactical and strategic commands. Such a new reality in the conduct of warfare required the delegation of control in favor of smaller unit commanders and leaders.

The interwar period saw also the development of a more effective integration of arms under a unified commander. At the beginning of the 1920s, General Hans von Seeckt, a strong advocate of the combined arms approach, assumed command of the German Army. The German general moved away from the traditional vision of a mass army and replaced it with a more agile and combined formation capable of breaking through the enemy defensive lines by maneuvering and massing combat power at decisive points. He tested his innovative approach in frequent and realistic training exercises that were beneficial to improve tactical commanders’ ability to appreciate the full potential and power of a combined arms approach to warfare.17 Von Seeckt’s professional intuition and groundbreaking vision led to the development of the German Army field service regulation Führung und Gefecht der Verbundenen Waffen (Combined Arms Leadership and Battle). German military leaders understood that the key to maneuver was the integration of all weapons, even at the lower levels of command.18 The modern vision of the combined arms integrates different arms to achieve jointness and enable cross-Service cooperation in all stages of military operations. 19 To achieve such an ambitious objective, the German Army placed great emphasis on the education of the officer corps; officers were to learn insightful lessons from World War I.20

Two important technological innovations—the radio and the radar—changed the operating environment by integrating all arms and helping to monitor the battlefield even at a distance.21 The German military quickly realized the potential that these new technologies offered to improve command and control systems in particular between the tactical and operational levels. The radio became critical to disseminate orders, share information, and make all the necessary coordination to maximize military efforts. This is a concept that remains critically valid today: the rapid sharing of information at all levels is essential for an effective conduct of maneuver warfare. In addition, the sharing of relevant, accurate information and facilitating collaborative planning assisted all levels of situational awareness; it was the progeny of the modern common operational picture.22

Contemporary Command and Control

The introduction of Auftragstaktik has made an impact on modern command philosophy. Mission command or mission tactics are the evolution of the Auftragstaktik concept emphasized in the Doctrine for the Armed Forces of the United States, Joint Publication (JP) 1, of “conduct of military operations through decentralized execution based upon mission-type orders.”23 However, the current approach to warfare built on technology-centric concepts is changing or at least complicating the proper application of mission command. Current warfare is characterized by, although not limited to, standoff precision attack, efficient platforms, and information dominance.

According to Ron Tira, current doctrines look at enhanced standoff and precision weapons to reduce the risk of loss, induce shock on the enemy, and gain an asymmetrical advantage.24 The standoff precision attack concept aims at creating enough distance between our center of gravity and the enemy outreach capacity—gaining valuable additional time—through the execution of multiple and synchronized actions (kinetic and nonkinetic) to achieve physical and cognitive effects on the enemy. The logical consequence is that most of the current military plans for conventional warfare are organized around a linear/phased approach. This approach seeks engagements from great distances and allows ground and maneuver forces intervention only when the enemy is weakened enough to not pose an unacceptable risk.

Generally, each military capability is built to be efficient in a particular environment or for a specific purpose. This method generates efficient platforms to deal with a rather narrow spectrum of types of warfare. As a consequence of this efficiency, often driven by technology, the decision-making process is influenced by the technology and equipment available rather than by commanders and their staff’s problem-solving creativity. The risk associated with this approach is the adoption of a mindset that self-imposes limitations on the conduct of warfighting. Such limitations are driven by the technology available and operating concept linked to them. Moreover, the enemy could exploit the limits of the current system and bring the confrontation below the threshold of the force-on-force, undermining the technical advantage of developed countries.25

Another critical feature of current warfighting is information dominance. Strictly related to the reduction of uncertainty, the introduction of the internet has increased the capability to gain superior situational awareness either in peace or wartime. In August 1962, J. C. R. Licklider of the Massachusetts Institute of Technology (MIT) introduced the Galactic Network concept intended to enable social interactions through the global networking of a series of interconnected computers. Licklider became the first head of the computer research program at the Defense Advanced Research Projects Agency (DARPA) and continued that project for military purposes, which was the initiative for the foundations of the internet.26 The distributed connection today allows not only voice and sound communication but also image transmission from and to every remote corner of the globe with high resolution. As a result, current technology has virtually reduced the communication distance between all levels of command. Global communication gives a commander the capability to directly observe events and interact with tactical agents on the battlefield with minimal delay or distortion. However, warfighters must be aware that “directed telescopes can damage the vital trust a commander seeks to build with subordinates.”27

Despite the adoption of mission command philosophy, which provides the delegation of the authority at the lower level of command, command and control systems are technologically built to control in detail the battlefield from distant headquarters. As a result, they might leave less latitude and initiative to commanders and leaders at the tactical level. The comprehensive perspective of modern command and control systems are envisioned in the network-centric warfare (NCW). The term network-centric warfare broadly describes the combination of strategies, tactics, techniques, procedures, and organizations that a fully or even a partially networked force can employ to create a decisive warfighting advantage.28 With the NCW operating concept, the U.S. defense forces in particular must pursue “the shift in focus from the platform to the network; the shift from viewing actors as independent to viewing them as part of a continuously adapting ecosystem; and the importance of making strategic choices to adapt or even survive in such changing ecosystems.”29 Current technologies have allowed development of the combined arms approach to a higher level. In fact, with the introduction of global communication and the advance in high-precision and standoff-weapons systems, all connected within the information domain, contemporary commanders can synchronize operations in different domains, using several weapon systems in an increasingly fast decision-making loop.

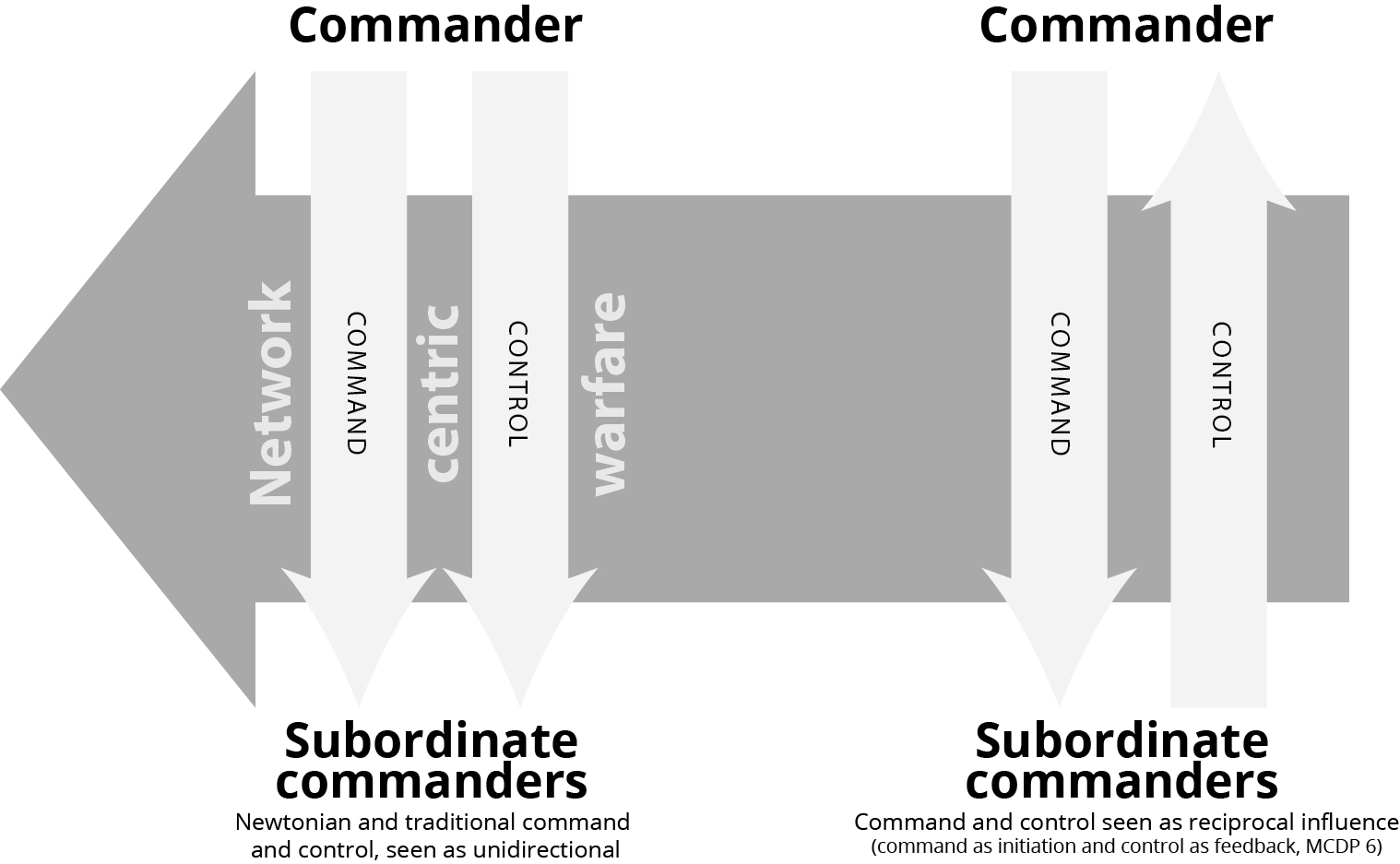

Yet, a commander’s potential ability to communicate with almost all their subordinate units may change the commander’s role from one of a coach, who gives their team guidance, to one of a chess player with direct control over the chess pawns. Improved battlefield insight provided by NCW allows commanders to grasp the battlefield much more precisely, quickly, and distantly. Technology has made the conduct of warfare, deceivingly, more certain and precise than before. It is believed that Clausewitz’s fog of war can be minimized, redoubling acquisition efforts on technological and exquisite equipment.30 The possible outcome of such a development is a return to a traditional command and control approach, in which both command and control might be seen as unidirectional rather than as a virtuous feedback loop. For example, the potential risk associated with this trend is the micromanagement of warfare with a detrimental impact on mission command philosophy (figure 1).

Figure 1. Network-centric warfare and command and control functions

Source: Courtesy of the author, adapted by MCUP.

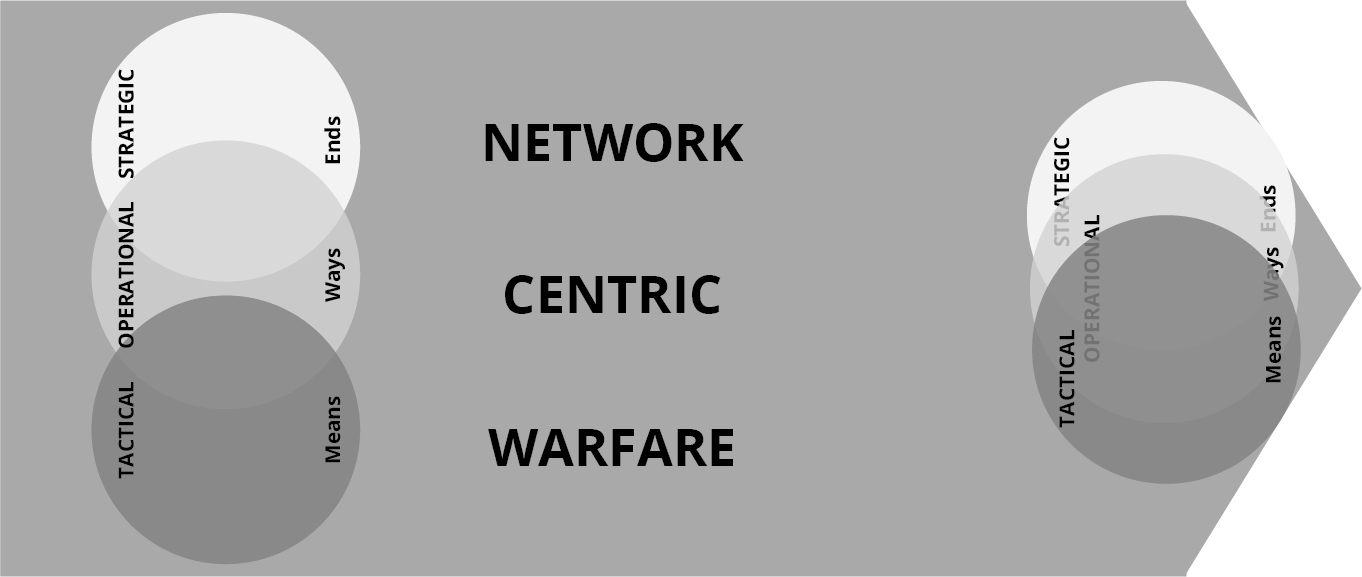

Another effect of the NCW is the compression of operations and levels of war. Given the option that operations could be potentially conducted from a remote station, such as a pilot of a General Atomics RQ-1 Predator flying their unmanned aircraft, there might be less appetite to involve ground forces in a conflict, and consequently there might be less need for delegation and relocation of operational headquarters on or close to the battlefield. Indeed, if the strategic command is virtually colocated with the tactical agents of the war, the operational level might disappear or become bypassed by the other two levels (figure 2).

Figure 2. Network-centric warfare and levels of warfare

Source: Courtesy of the author, adapted by MCUP.

Trends between the interwar period and the current period identify discontinuity in command and control. Regardless of what doctrine advised and what the historical examples demonstrated, today there is a constant attempt to attain certainty and understand the battlefield before commitment of military forces.31 Moreover, technologically driven solutions to deal with uncertainty are the best options available. The hyper integration of all means in the battlefield initiated by NCW is achieved not only with forces in the field but also by coordinating with a shared view of the battlefield. However, if all critical agents, from the squad to the geographic combat command see the same picture, there might be the desire to micromanage the force in the battlefield, disrupting the virtuous cycle of feedback.32 At every level of war, leaders aim to minimize the direct involvement of ground forces in the battlefield to reduce friendly losses while maximizing the enemy casualties. As during the interwar period when strategists promoted the mechanization of the battlefield to both improve the firepower and protect soldiers, today’s emphasis on the automatization of warfare aims to limit or avoid completely the deployment of ground troops, at least during the initial phases of a conflict. This trend seems to be unstoppable and potentially dangerous, because it relies on the supposed perfection of the automated execution of a command.

The Future of Command and Control

As the world rapidly moves toward increasing automation, it has been suggested that a revolutionary breakthrough in warfare is about to happen, a discovery that “may even challenge the very nature of warfare itself.”33 The intensity of the dispute between advocates and opponents of the autonomous revolution share similarities with the debate generated by Italian general Giulio Douhet with his absolutist vision of air power in the 1920s. While Douhet believed that future wars would be fought and won by large aircraft, advocates of the disruptive role of autonomous weapons are confident that the race to achieve superiority in automation will eventually lead to a war fought without humans. On the one side, Paul Scharre in Army of None: Autonomous Weapons and the Future of War envisions the combination between a developed artificial intelligence and autonomous machines able to plan and execute military operations without any interaction with military operators.34 On the other side, some authors believe that the development of a general artificial intelligence able to replace the human decision-making process is still far away.35 Yet, advocates of autonomy in the battlefield continue to strongly promote their vision of warfare, as the absolutists of the air power did during the interwar period. If the next conflict will be conducted combining automation with the traditional human-led platforms and systems, the level of reliance on autonomous weapon systems will present a dilemma to the next generation of leaders.

According to Merriam-Webster, autonomy refers to the right or condition of self-government.36 However, self-government implicitly underlies the presence of someone else or something else that can influence autonomous actions. When the notion of autonomy is applied to the relation between human beings and weapon systems, the concept is less clear than it seems. The implication of self-governance and weapons spans theoretically from the automatic rifle to the U.S. Navy aegis (shield) combat system.37 According to Paul Scharre:

Machines that perform a function for some period of time, then stop and wait for human input before continuing, are often referred to as “semiautonomous” or “human in the loop.” Machines that can perform a function entirely on their own but have a human in a monitoring role, who can intervene if the machine fails or malfunctions, are often referred to as “human-supervised autonomous” or “human on the loop.” Machines that can perform a function entirely on their own and humans are unable to intervene are often referred to as “fully autonomous” or “human out of the loop.”38

Scharre’s definition provides three elements of interest. First, from the machine gun to the robot, every system has a given level of autonomy to perform a specific task whose complexity defines whether human intervention is in, on, or out of the loop. Second, the loop is the cognitive and physical process by which operators articulate their will to achieve an objective; it is the decision-making cycle that John Boyd has synthesized with the famous OODA acronym. Third, the definition recognizes the interaction between a machine and human being. Yet, the machine is “an apparatus using mechanical power and having several parts, each with a definite function and together performing a particular task.”39 Therefore, the machine is a system of elements. Warfighting describes war as a clash between opposing wills where each belligerent is not guided by a single intelligence because it is a complex system consisting of numerous individual parts.40 To achieve full autonomy in warfare, the critical factor is the development of general all-encompassing artificial intelligence (AI) able to coordinate multiple modular artificial intelligences integrated in every subsystem of warfare.41 As a result, with the sophistication of AI, the future of warfare may involve operations in which the human decision maker is almost out of the loop, thus fully autonomous (figure 3).

Figure 3. Parallelism between autonomous weapons and autonomous warfare

Source: Courtesy of the author, adapted by MCUP.

The idea of autonomous warfare carried out by autonomous systems raises critical concerns of ethical and existential nature. In 2014, Stephen Hawking warned us that “the development of full artificial intelligence could spell the end of the human race.”42 The competition among major powers is accelerating the race for the acquisition of autonomous weapon systems more powerful than their peers. China aims to use AI to exploit large troves of intelligence, with the objective of generating a common operating picture, thereby accelerating battlefield decision making.43 Russia continues to pursue its defense modernization agenda, with the aim of robotizing 30 percent of its military equipment by 2025. In addition, Russia is actively integrating different platforms to develop a swarming capability, the autonomous and deliberate integration of sensors, kinetic, and nonkinetic platforms that will allow it to operate in absence of human interaction.44 The U.S. Department of Defense has a more conservative approach to AI. The Summary of the 2018 Department of Defense Artificial Intelligence Strategy: Harnessing AI to Advance Our Security and Prosperity has directed the use of AI in a human-centered manner, in particular used to enhance military decision making and operations across key mission areas. This approach will improve situational awareness and decision making, increasing the safety of operating equipment, implementing predictive maintenance and supply, and streamlining business processes. In addition, the strategy states: “We will prioritize the fielding of AI systems that augment the capabilities of our personnel by offloading tedious cognitive or physical tasks and introducing new ways of working.”45 Considering all these approaches together, the trends are:

- automation of information gathering and situational awareness;

- enhanced robotization of the battlefield and integration of platforms;

- augmented decision-making processes to increase the tempo of machine execution of missions.46

In the near future, it is unlikely that a general artificial intelligence able to solve autonomously every problem in warfare will be effectively deployed in the battlefield and exclude humans entirely from the battlefield.47 Current capabilities, for example the Navy’s Lockheed Martin Aegis weapon system, can operate and in some ways outperform human operators, but only in specific domains. However, technological developments have enabled autonomous systems to coordinate under the supervision of humans and swarm against a given threat, as demonstrated by the U.S. Navy’s Control Architecture for Robotic Agent Command and Sensing.48

At the tactical level, autonomous capabilities give clear advantages to whomever will be able to deploy them. In a 2016 video, Semenov Dahir Kurmanbievich, a futurist and visionary Russian inventor, has tried to demonstrate in a fictional yet very realistic clip how autonomous weapons could easily destroy adversaries’ conventional forces.49 The main features of autonomous weapons in battle are a low signature, low visibility, low cost, absence of direct human involvement, high precision, increased durability, interconnection among tactical agents, self-repair, and adaptability. As a result, at the tactical level, it is possible to envision a tapestry of interconnected platforms that are able to deliver the same or greater fire power with less human and economic costs.50 The Marine Corps is testing robotic war balls, an unmanned device that supports the establishment of the beachhead during the most dangerous phase of amphibious operations—the ship-to-shore movement.51 These autonomous systems might help set conditions for a safer landing of forces by swarming and storming the enemy’s defense systems ashore.52 An attack of this kind can only be defended by systems that operate quickly, with autonomy and intelligence, accelerating the need for automation.53

Domain-specific AI will transform conflict, and like previous innovations in military capability, AI has the potential to profoundly disrupt the strategic balance. At the strategic level, AI may play two different roles. First, the realization of the most efficient and effective AI will be critical to achieve the asymmetrical advantage against competitors; therefore, it may redesign the balance of power on the global scale. Indeed, one of the objectives of the 2018 National Defense Strategy is to “invest broadly in military application of autonomy, artificial intelligence, and machine learning, including rapid application of commercial breakthroughs, to gain competitive military advantages.”54 Second, the race to field autonomous weapons in the battlefield may jeopardize civil-military relationships over the control of the development of AI. Businesses and industries in the sector have already surpassed the military world in the research and application of autonomous systems, raising concerns and tensions.55 In this regard, the U.S. National Security Strategy recognizes the strategic impact of AI, calling for a shared responsibility with the private sector in those instances that can affect national security.56

Critical applications of AI and autonomous systems may serve to augment the ability to predict patterns and visualize potential threats.57 An augmented operational planning team may develop courses of actions or test military contingency plans, providing unanticipated recommendations due to the unparalleled amount of information that an AI can process.58

It is likely that in the future the decision-making process will see the introduction of autonomous technologies that will significantly impact many facets of the OODA loop: the observation (O), orientation (O), and action (A) phases. It will, however, result in further centralization of the decision (D) phase. The automation of armed conflict offers such clear opportunities as to represent the next asymmetrical advantage. In broader terms, autonomous systems are considered the solution for uncertainty, power projection in contested environments, and less dependency on human personnel. The relationship between humans and autonomous systems may change the dynamics of command and control functions. A traditional staff assessing the risk of a military intervention is influenced by imperfect information. Modular AI might help in the near future to analyze and assess risks, with a smaller percentage of error. Such technology is already available in the medical field, increasing diagnostic accuracy.59 From a political perspective, a potentially risk-free operation with a limited domestic impact might make the decision to use military power easier and more likely to occur.60

In 2007, U.S. Marine Corps General James E. Cartwright predicted that “the decision cycle of the future is not going to be minutes. . . . The decision cycle of the future is going to be microseconds.”61 In the near term, engagement of forces will probably be made in split seconds for every entity that owns that capability. Future command and control architectures will see combined ground- and space-based sensors, unmanned combat aerial vehicles, and missile defense technologies, augmented by directed energy weapons. In addition, the human-based decision-making process will be affected by the data overload produced by the proliferation of information-based systems.62 Given the ability to engage faster and with smaller systems, defenders will not be able to observe the activity, orient themselves, decide how to respond, or act on that decision. Attackers will try to place themselves inside the defender’s OODA loop, shattering the adversary’s ability to react.63 The loop of action-reaction-counteraction that has informed the military decision-making process so far will become too fast and unpredictable for humans to manage in a traditional way.64 At the strategic and operational levels, the centralization of the decision-making process might be the most favored to deal with a “flash war” and its required reactivity, the short time available, and the force dispersion.65

Autonomous agents can cope far better than human beings—and more efficiently—with huge quantities of information. Without susceptibility to cognitive biases, they are not affected by physical factors such as fatigue or to the adoption of human heuristics to make connections in data that may not be warranted.66 At the strategic level, decision makers assisted by an AI able to offer recommendations may perceive the function of automated systems as an all-seeing oracle, which could result in replacing the advisory function of qualified staff.67 The critical implication is the enhancement of two psychological aspects linked to the decision-making process. On one side, the oracle may augment the sense of agency of the decision maker, even if not directly experienced in warfare.68 On the other side, it may supplant the arduous mental activities that a critical decision demands, reducing the relevance of the experienced staff in favor of the speed of computer-based advice.

Autonomous warfare will be characterized by integration of systems, information dominance, amplified standoff weapon technologies, and a misleading perception of risk-free implications (e.g., reduced risk to friendly ground troops in a war waged by autonomous systems). Modular AI can be programmed to deal with a full range of strategic issues. It is not difficult to envision a tendency to escalation dominance with the aim to force the adversary to surrender.69 All this can be highly destabilizing and might encourage preemptive attacks, as well as prompting developments in new forms of asymmetric warfare.70 The instantaneous decision making implied in high-intensity operations, in cyberspace, and in the employment of missiles and unmanned vehicles moving at velocities exceeding the speed of sound have led to warnings about hyper war.71 Clausewitz rightly noted,

the maximum use of force is in no way incompatible with the simultaneous use of intellect. If one side uses forces without compunction, undeterred by the bloodshed it involves, while the other side refrains, the first will gain the upper hand. That side will force the other to follow suit; each will drive its opponent toward extremes, and the only limiting factors are the counterapproaches inherent in war.72

Conclusions

Technological innovations give an effective advantage to the ones who possess the technology. The important role of technological innovations during World War II, such as the radio, radar, tanks, and others is indisputable. Nevertheless, technological innovation in isolation will have a limited impact if it is not well integrated into an overarching culture and philosophy of warfare. During the interwar period, the German Reichswehr was able to capitalize on technological innovations by integrating them into a doctrine that pursued fighting at a greater distance, with faster execution, and through increasingly combined units of different arms. The German General Staff became a critical asset to cope with and properly process a great amount of information. The Auftragstaktik, the command philosophy of the Reichswehr, was improved to serve the concept of “short and lively” warfare.73 But the idea of seeking “short and lively” campaigns was indeed a traditional approach in the German Army, and its roots went far deeper than during the interwar period. The strong German military culture played a primary role in the development of modern and effective tactics that gave German soldiers a significant advantage over their opponents at the beginning of World War II.

Indeed, the Auftragstaktik has influenced the contemporary mission command philosophy in many modern doctrines as the most effective approach to deal with the uncertainty of warfare.74 With current military capabilities, commanders can get a technological and almost omniscient view of the entire battlespace with a near global reach. This very aspect informs the net-centric warfare. However, this all-seeing view might clash with the original idea of Auftragstaktik. The difference between the application of decisional autonomy or mission command in past and modern warfare could not be more striking. It is interesting to note that while the German 7th Panzer Division in the invasion of France at the beginning of World War II enjoyed decisional autonomy, during the 2003 march up in Iraq, the 1st Marine Division’s entire chain of command observed from afar the maneuver because the higher headquarters “wanted to know where Land Component units were.”75 In the latter case, the autonomy of the 1st Marine Division commander was a matter of choice of the upper command echelons. The NCW structure, in fact, could have allowed the detailed control of the fighting force, something not applicable to the German 7th Panzer Division in World War II given its available technology. In military operations other than war, such as counterinsurgency operations in Afghanistan, the amount of control at the lowest level is even more critical. For example, in many cases, the targeting approval authority is the theater commander even if the tactical operation is performed by units at the squad and platoon level.76

Technology advancement is adopted to address military leadership’s need for certainty, even though the defining problem of command and control that overwhelms all others is the need to deal with uncertainty.77 This is an irreversible trend ingrained at every level of warfare. It may also be the result of a Western military culture eager to commit forces to fight quickly, precisely, and distantly but also be less prone to the indiscriminate use of violence to prevent excessive human casualties (friendly, enemy, or civilians). In this context, the natural likely result might be a return to traditional command and control, where both command and control are possibly seen as unidirectional rather than as a reciprocal influence. Moreover, if the strategic commander is virtually colocated with the tactical one, the operational commander may disappear or at least might be bypassed by the overlapping of the other two levels. The potential risk associated with this trend is the micromanagement of warfare at the expense of mission command.

The integration of autonomous weapons is a key aspect of future warfare. Automation augments the decision-making process and the tactical execution of military actions. Current technologies still need a human on the loop at least. Soon, the creation of effective autonomous systems, with humans nearly out of the loop, will have dangerous consequences at the strategic level and a possible detrimental impact on the balance of power. The possibility of a risk-free war based on oracle-like advice from autonomous machines and tireless autonomous weapon systems might make the pursuit of preemptive and preventive war appealing as a strategy to acquire an asymmetric advantage over the enemy. Clausewitz warned that it is possible that such an approach might escalate the confrontation among competitors, rather than achieving a prompt surrender.78

The same idea of bias-free artificial intelligence is mistaken, invalidating overreliance on a perfect solution. Modular artificial intelligence and machine learning, the foundation of autonomous systems, are limited by the dataset that a human programmer has integrated in the development of the algorithm (therefore potentially biased from humans from the start). In fact, scientific articles caution the use of artificial intelligence in risk-related matters.79 At the tactical level, important questions rise from an ethical standpoint. In an information-degraded battlefield, autonomous agents will have the delegation of the control of tactical actions, based on a programmed artificial intelligence that might diverge from the application of the just-war criteria. Political and strategic leaders will face critical ethical dilemmas, as allowing autonomous systems to perform their warfare tasks freely may result in an escalation of the uncontrollable (and possibly indiscriminate) use of violence. On the contrary, restraining the development and use of autonomous systems leaves opposing powers in a position of strategic advantage. The ability to balance the decision-making process between the indiscriminate use of automation or its blind confinement, therefore, can only be achieved through the advisory role of senior and experienced military leaders who will fill the gap between the oracle-

like use of the autonomous systems and the personal human judgment of the political and strategic decision maker.

Endnotes

- The authors would like to thank the anonymous reviewers and Col C. J. Williams for their insightful comments on an earlier version of this article. Additional thanks go to Jason Gosnell for his impeccable editorial skill that has been extremely beneficial to the article.

- Donald E. Vandergriff, “How the Germans Defined Auftragstaktik: What Mission Command Is—AND—Is Not,” Small Wars Journal, 2017.

- David S. Alberts, John J. Garstka, and Frederick P. Stein, Network Centric Warfare: Developing and Leveraging Information Superiority, 2d ed. (Washington, DC: C4ISR Cooperative Research Program, 2000), 2.

- Command and Control, MCDP-6 (Washington, DC: Headquarters Marine Corps, 1996), 63.

- Andrew P. Williams and Paul D. Scharre, eds., Autonomous Systems: Issues for Defence Policymakers (Norfolk, VA: Capability Engineering and Innovation Division, Headquarters Supreme Allied Commander Transformation, 2017), 10.

- Warfighting, MCDP-1 (Washington, DC: Headquarters Marine Corps, 1997), 86.

- Gen John R. Allen, USMC (Ret), and Amir Husain, “On Hyperwar,” Fortuna’s Corner (blog), July 2017.

- Command and Control, 52.

- Martin Van Creveld, Command in War (Cambridge, MA: Harvard University Press, 1985), 1.

- UK Defence Doctrine, Joint Doctrine Publication 0-01 (Shrivenam, UK: Development, Concepts and Doctrine Centre, 2014), 42.

- Command and Control; and UK Defence Doctrine, 37.

- DOD Dictionary of Military and Associated Terms (Washington, DC: Department of Defense, 2020), 41.

- Williamson Murray and Allan R. Millett, eds., Military Innovation in the Interwar Period (New York: Cambridge University Press, 2009), 1–5.

- R. R. (Dicky) Davis, “VII. Helmuth von Moltke and the Prussian‐German Development of a Decentralised Style of Command: Metz and Sedan 1870,” Defence Studies 5, no. 1 (2005): 86, https://doi.org/10.1080/14702430500097242.

- Williamson Murray, “Comparative Approaches to Interwar Innovation,” Joint Force Quarterly, no. 25 (Summer 2000): 83.

- MajGen Werner Widder, German Army, “Auftragstaktik and Inner Furung: Trademarks of German Leadership,” Military Review 82, no. 5 (September–October 2002): 4–9.

- James S. Corum, The Roots of Blitzkrieg: Hans von Seeckt and German Military Reform (Lawrence: University Press of Kansas, 1992), 37.

- Capt Jonathan M. House, USA, Toward Combined Arms Warfare: A Survey of 20th-Century Tactics, Doctrine, and Organization (Fort Leavenworth, KS: U.S. Army Command and General Staff College, 1984), 43–48.

- Doctrine for the Armed Forces of the United States, JP 1 (Washington, DC: Joint Chiefs of Staff, 2017), ii.

- Murray, “Comparative Approaches to Interwar Innovation,” 84.

- Alan Beyerchen, “From Radio to Radar, Interwar Adaptation to Technological Change in Germany, the United Kingdom, and the United States,” in Military Innovation in the Interwar Period, 267–78.

- Department of Defense Dictionary of Military and Associated Terms, 42.

- Doctrine for the Armed Forces of the United States, V-15.

- Ron Tira, The Limitations of Standoff Firepower-Based Operations: On Standoff Warfare, Maneuver, and Decision, Memorandum 89 (Tel Aviv, Israel: Institute for National Security Studies, 2007).

- T. X. Hammes, “The Future of Warfare: Small, Many, Smart vs. Few/Exquisite?,” War on the Rocks, 16 July 2014.

- Barry M. Leiner et al., “Brief History of the Internet,” Internet Society, 13 September 2017.

- Command and Control, 76.

- John J. Garstka, “Network-Centric Warfare Offers Warfighting Advantage,” Signal, May 2003.

- VAdm Arthur K. Cebrowski, USN, and John H. Garstka, “Network-Centric Warfare: Its Origin and Future,” U.S. Naval Institute Proceedings 124, no. 1 (January 1998).

- John F. Schmitt, “Command and (Out of) Control: The Military Implications of Complexity Theory,” in Complexity, Global Politics, and National Security, ed. by David S. Alberts and Thomas J. Czerwinski (Washington, DC: National Defense University, 1997), 101.

- Command and Control, 104.

- Command and Control, 42.

- The Operational Environment and the Changing Character of Future Warfare (Fort Eustis, VA: U.S. Army Training and Doctrine Command, 2017), 6.

- Paul Scharre, Army of None: Autonomous Weapons and the Future of War (New York: W. W. Norton, 2018), 6.

- Kareem Ayoub and Kenneth Payne, “Strategy in the Age of Artificial Intelligence,” Journal of Strategic Studies 39, nos. 5–6 (November 2015): 780, https://doi.org/10.1080/01402390.2015.1088838.

- “Autonomy,” Merriam-Webster, accessed 16 March 2020.

- “Aegis: The Shield of the Fleet,” Lockheed Martin, accessed 18 February 2019.

- Williams and Scharre, Autonomous Systems Issues for Defence Policymakers, 10.

- Oxford Dictionaries, accessed 17 March 2019.

- Warfighting, 12.

- Ayoub and Payne, “Strategy in the Age of Artificial Intelligence,” 795.

- Rory Cellan-Jones, “Stephen Hawking Warns Artificial Intelligence Could End Mankind,” BBC News, 2 December 2014.

- Artificial Intelligence and National Security (Washington, DC: Congressional Research Service, 2019), 20.

- Tamir Eshel, “Russian Military to Test Combat Robots in 2016,” DefenseUpdate, 31 December 2015; and Artificial Intelligence and National Security, 24.

- Summary of the 2018 Department of Defense Artificial Intelligence Strategy: Harnessing AI to Advance Our Security and Prosperity (Washington, DC: Department of Defense, 2018), 6–7.

- Summary of the 2018 Department of Defense Artificial Intelligence Strategy, 7.

- Robert Richbourg, “ ‘It’s Either a Panda or A Gibbon’: AI Winters and the Limits of Deep Learning,” War on the Rocks, 10 May 2018.

- David Szondy, “US Navy Demonstrates How Robotic ‘Swarm’ Boats Could Protect Warships,” New Atlas, 6 October 2014.

- “New Weapon Designed by Russian Inventor Demonstrating of [sic] Destroying US, Israel, and Russian Tank,” YouTube video, 6:15, 26 March 2016.

- John Paschkewitz, “Mosaic Warfare: Challenges and Opportunities” (working draft, DARPA, June 2018).

- Patrick Tucker, “The Marines Are Building Robotic War Balls,” DefenseOne, 12 February 2015.

- Tucker, “The Marines Are Building Robotic War Balls.”

- Ayoub and Payne, “Strategy in the Age of Artificial Intelligence,” 807.

- Summary of the 2018 National Defense Strategy of the United States of America, 7.

- Scott Shane and Daisuke Wakabayashi, “ ‘The Business of War’: Google Employees Protest Work for the Pentagon,” New York Times, 4 April 2018.

- The National Security Strategy of the United States of America (Washington, DC: White House, 2017), 35.

- Devin Coldewey, “DARPA Wants to Build an AI to Find the Patterns Hidden in Global Chaos,” TechCrunch, 7 January 2019.

- Michael Peck, “The Return of Wargaming: How DoD Aims to Re-Imagine Warfare,” GovTechworks, 5 April 2016; and Ayoub and Payne, “Strategy in the Age of Artificial Intelligence,” 807.

- Lauren F. Friedman, “IBM’s Watson Supercomputer May Soon Be the Best Doctor in the World,” Business Insider, 22 April 2014.

- James D. Fearon, “Domestic Political Audiences and the Escalation of International Disputes,” American Political Science Review 88, no. 3 (September 1994): 3, https://doi.org/10.2307/2944796.

- Gen James E. Cartwright, as quoted in Peter W. Singer, “Tactical Generals: Leaders, Technology, and the Perils,” Brookings, 7 July 2009.

- Thomas K. Adams, “Future Warfare and the Decline of Human Decisionmaking,” Parameters 31, no. 4 (Winter 2001–2): 2.

- Adams, “Future Warfare and the Decline of Human Decisionmaking,” 5.

- Staff Organization and Operations, Field Manual 101-5 (Washington, DC: Department of the Army, 1997), 5-22.

- Flash wars are defined as “a quickening pace of battle that threatens to take control increasingly out of the hands of humans.” Williams and Scharre, Autonomous Systems Issues for Defence Policymakers, 15.

- Ayoub and Payne, “Strategy in the Age of Artificial Intelligence,” 808.

- Ayoub and Payne, “Strategy in the Age of Artificial Intelligence,” 807.

- Singer, “Tactical Generals.”

- Robert Jervis, “The Madness beyond MAD. Current American Nuclear Strategy,” PS: Political Science & Politics 17, no. 1 (Winter 1984): 34–35, http://doi.org/10.2307/419118.

- Ayoub and Payne, “Strategy in the Age of Artificial Intelligence,” 810.

- Gen John R. Allen, USMC (Ret), “On Hyperwar,” U.S. Naval Institute Proceedings 143, no. 7 (July 2017).

- Carl Von Clausewitz, On War, ed. and trans. Michael Howard and Peter Paret (Princeton, NJ: Princeton University Press, 1989), 75.

- Robert M. Citino, The German Way of War: From the Thirty Years’ War to the Third Reich (Lawrence: University Press of Kansas, 2005), 102.

- Mission Command: Command and Control of Army Forces, Army Doctrine Reference Publication 6-0 (Washington, DC: Department of the Army, 2012), v.

- LtGen David D. McKiernan, USA, as quoted in A Network-Centric Operations Case Study: US/UK Coalition Combat Operations during Operation Iraqi Freedom (Washington, DC: Office of Force Transformation, Office of the Secretary of Defense, Department of Defense, 2005), 3–4.

- Joint Targeting, JP 3-60 (Washington, DC: Joint Chiefs of Staff, 2013), II-17.

- Command and Control, 54.

- Clausewitz, On War, 75.

- Osonde A. Osoba and William Welser IV, An Intelligence in Our Image: The Risks of Bias and Errors in Artificial Intelligence (Santa Monica, CA: Rand, 2017), 4, https://doi.org/10.7249/RR1744.