Major P. C. Combe II, USMC

https://doi.org/10.21140/mcuj.20211202004

PRINTER FRIENDLY PDF

EPUB

AUDIOBOOK

Abstract: In light of the Commandant’s Planning Guidance, there is a renewed emphasis on educational wargaming in professional military education (PME). While wargaming has a long history in PME, there is currently a gap in the academic literature regarding wargaming as an adult educational tool. Scientific study has focused on adult education theory and models generally, highlighting the identification of four different learning experiences, each tied to a learning style: concrete experience, which suits those with a diverging earning style; abstract conceptualization, which suits those with the converging learning style; reflective observation, for those with an assimilating learning style; and active experimentation, which works well for those with an accommodating learning style. By effectively engaging each of these four experiences, educational wargaming can have utility for a diverse array of learning styles.

Keywords: wargaming, adult education, professional military education, PME, adult learning

The Commandant of the Marine Corps has called for an increased emphasis on wargaming as both a tool to assess new concepts and as a means to get Marines “reps and sets” in education and training, thereby facilitating improved combat decision-making skills.1 The Commandant has recognized the value of wargaming not only as a means to evaluate and refine various courses of action or to test new concepts, but also as a means to teach and evaluate student learning outcomes in a professional military education setting.2 This is the essence of educational wargaming, the purpose of teaching or evaluating the extent to which students have learned and can apply material as a means of professional development.

While wargaming has a long history in military education, a trend that spans more than a century across multiple nations, there does not appear to be a holistic approach to understanding how best to develop and implement wargames as educational tools within a larger curriculum. A student-designed wargame, Able Archer 83 (AA83) was designed as part of a pilot program at Marine Corps University, Command and Staff College (CSC).3 The ostensible purpose of the program was to design a prototype educational wargame and then assess the game’s utility as an educational tool as well as student learning outcomes. While preliminary data collection indicates that the design team was successful in this effort, the team’s experience provides additional insight into how best to design and implement educational wargames as part of a comprehensive educational curriculum.4 The purpose of this article is to highlight lessons learned by the student design team in how best to design and implement educational wargaming as a component of professional military education.

In particular, the team gleaned three overarching lessons. First, educational wargames must be designed to accommodate all learning styles, which can be seen as analogous to the phases of Alice Y. Kolb and David A. Kolb’s learning cycle.5 In doing so, more student activities than just game play sessions may be necessary and may include post-play reflection in the form of seminar or group discussions. Second, game materials should complement the concepts as well as the verbiage used in other educational materials to ensure both maximum utility as well as ability to assess learning outcomes. Finally, to accomplish this second goal, educational wargames should be designed using a combination of sequential and iterative design. Learning objectives, game mechanics and design, and assessment tools should be developed sequentially, in that order, once the previous component is as near to complete as possible. However, each individual component should be designed iteratively in order to continuously refine and improve the educational and assessment utility.

This article begins with a description of educational wargames, as compared to wargames designed for other purposes. Following that is an overview of adult education theory, serious games, and wargaming within professional military education. The article then provides an overview of the design process of student-designed wargame AA83 and how the design team attempted to design a game to stimulate a variety of learning styles. Finally, the article will highlight lessons learned by the design team in the effective design and implementation of educational wargames into a larger curriculum of professional military education.

Adult Educational Theory and Models

The value of experiential learning is well known and highlighted as a critical component for lifelong learning as a component of professional development in the Marine Corps.6 Key concepts, which contribute to the effectiveness of experiential learning, include individual factors, instructional factors, and environmental factors, all of which must be considered when designing a curriculum to educate military professionals.7 These concepts are all tied to the science of learning, within which there is a particular discipline related to adult education (andragogy) as opposed to childhood education (pedagogy).8 In particular, experiential learning can prove valuable to military professionals, as it fosters adaptability and problem solving.9

Adult Education Theory

Andragogy makes a series of assumptions about adult learners. These assumptions are rooted in increased maturity, experience, desire to learn, and a focus on practical- or problem-centric learning.10 Based on these assumptions, there are a number of steps that educators may implement to improve the adult learning experience. These measures include setting a cooperative environment in which educators and learners work collaboratively to achieve objectives (solve problems) aligned with the learner’s particular interests.11 Fundamental to this approach is that adults desire to understand why they are learning and that they learn more effectively by doing rather than memorization of facts. One criticism of andragogy as a theory is that it can lead to culture blind approaches, which minimizes the value of an authoritative instructor central to many cultures.12

Another approach to adult education is transformational learning, or trying to effect changes in the way individuals think about themselves or their environment.13 Transformational learning has been described as a rational process in which learners reflect on and discuss their learning experience.14 To facilitate this reflection and discussion, it is imperative that the learning environment be free from bias, takes place in an accepting environment, and is led by an instructor who ensures that all participants have free and complete information.15 However, there have been two main critiques leveled at transformational learning. The first is that it fails to account for different frames of experience based on race, culture, or historical experience of varied learners in a single learning environment.16 The other critique is that transformational learning is hyper-rational and minimizes intangible aspects of learning such as relationships and emotion.17 Critical aspects of transformational learning include the provision of immediate and helpful feedback, tailoring learning activities to student strengths and weaknesses, and developing learning strategies that incorporate different perspectives and “frames.”18 Regardless of the approach, authors have attempted to articulate practical advice to achieve best outcomes in adult education.19

These tools include efforts to make the learning environment mirror the working environment. The more the educational environment adheres to the learner’s work environment, the greater application of learning outcomes to real-world scenarios. Educators can achieve this goal by using real-world examples or fostering small team or group work instead of individual effort, thereby engaging the adult student’s desire for practical application of their knowledge, as opposed to theoretical understanding divorced from practical use. This practical advice on improving adult education makes more sense when viewed from the perspective of learning styles and associated educational course design.

Alice Kolb and David Kolb focus on experiential learning and advance six basic propositions about learning.20 First is that learning is best conceived as a process, as opposed to a series of outcomes. This process should engage students and provide regular and useful feedback. Second is that all learning is relearning, in the sense that it draws on the learner’s beliefs and ideas. During learning these beliefs and ideas are tested and integrated with more refined beliefs and ideas. Kolb and Kolb also posit that learning requires a resolution of conflict between opposing modes of adaptation to the world. In this view, conflict and disagreement drive learning, as the learners seek to reconcile the apparently contradictory information. Kolb and Kolb also describe learning as a holistic process of adaptation consisting of a tension between four mental models: thinking, feeling, perceiving, and behaving. In this environment of tension, learners achieve results through continuous transactions between themselves, other participants, and their environment. Last, Kolb and Kolb offer that learning is the process of creating knowledge through experience. At least one author has posited that educational games are particularly effective at stimulating the experimentation phase of the learning cycle and that the knowledge gained through experimentation, reflection on the results of a player move, and conceiving of a new move or strategy is emblematic of this cycle of learning through experience.21

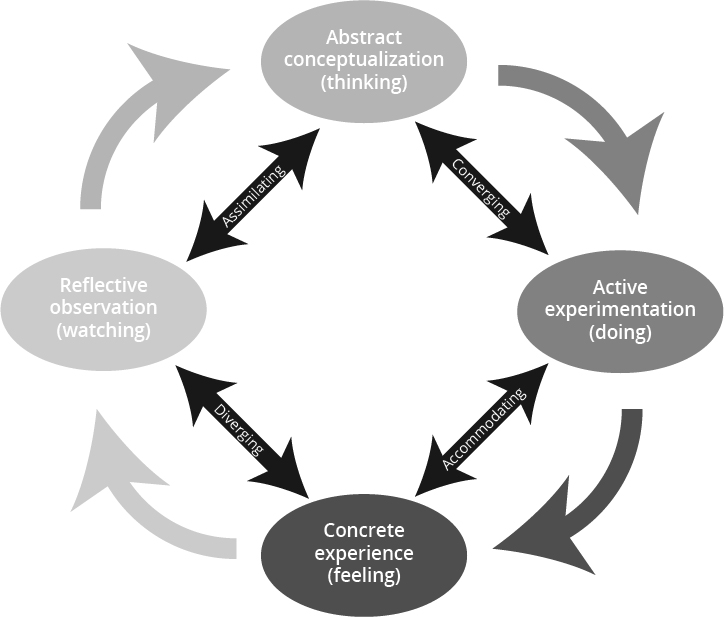

From this backdrop, Kolb and Kolb conclude that there are “grasping” experiences, in which learners understand the concepts being taught, and “transforming” experiences, which change the way learners think about a particular issue. Grasping experiences include both concrete experience and abstract conceptualization. Transformational experiences engage reflective observation and active experimentation. All learning involves some component of each of these experiences, which tie to a learning cycle of thinking (active conceptualization) and doing (active experimentation), feeling (concrete experience), and watching (reflective observation). In turn, these learning experiences are linked to four basic learning styles.

Figure 1. Learning cycle and corresponding learning styles

Source: courtesy of author, adapted by MCUP.

The first learning style is diverging. These learners are best at viewing concrete situations from many points of view. They learn best through concrete experience and reflective observation, feeling and watching the results of their previous actions. The opposite learning style is converging, where learners tend to be best at finding practical applications for ideas and theories. Converging learners learn best through an iterative practical application of an idea or process, by which the learner can experiment with new knowledge (active experimentation), observe or reflect on the results, and conceive of new approaches to the learning scenario in real time (active conceptualization).22 Assimilating learners, who increase knowledge through active conceptualization and reflective observation experiences, are best at understanding a wide range of information and boiling it down to a concise and logical form. Last, accommodating learners tend to be hands-on, focusing on their first inclination rather than logical analysis. Their dominant learning abilities are found in the concrete experience and active experimentation experiences. Though overlapping somewhat with the learning experiences for converging learners, accommodating learners tend to draw more educational utility from the concrete experience or “feeling” portion of the experience as opposed to the active experimentation or “doing” portion of the experience.23

Wargames and Serious Games as Educational Tools

While gaming often has a negative or pedantic reputation in educational circles, it has a long history in the military educational system, and a number of authors have attempted to describe why wargaming is a useful educational tool.24 Discussion has included the “laws of learning” and how those apply in the wargaming context.25 The literature has identified six laws of learning and those aspects of wargaming or game design that support the application of those principles. In essence, these laws are what give wargames or other experiential learning tools their utility; they make knowledge stick.

The first law is readiness; essentially this means that the learner is mentally, physically, and emotionally ready to learn.26 Adult learners, as previously discussed, are often more motivated to learn and thus ready.27 Similarly, games generate “flow,” or the state in which a player focuses on the game to the exclusion of external stimuli.28 Flow is created by the narrative aspects of the game, as well as the give and take feedback between the player, the game, and the opponent (in multiplayer games).29

The second law of learning is “exercise” or the learning experience that causes the student to exercise or use a skill.30 Wargames excel in this context, as they require students to make decisions and better support development of critical thinking and decision making than other nonexperiential forms of learning.31 This problem-based learning provides context and purpose for the exercise of critical thinking and decision-making skills and provides practice in a simulated environment that closely matches the decisions military professionals will need to make.32 Military officers may also adapt their player behavior to best suit the requirements of the game and the nature of their opponent.33 Players may be openly antagonistic to one another or they may cooperate in achieving a common goal. Often, the strategy adopted from one play to another will vary based on the opponent or simply the way the game plays out.34

The third law of learning is “effect.” In essence, effect means that students learn more with positive emotions.35 A well-designed wargame should increase positive emotions by simply being fun to play.36 Effect is closely tied to the fourth law of learning, “intensity.” The more intense the feelings or emotions associated with a learning experience, the more effectively the student assimilates the learning objectives.37 Particularly in military education, the competitive aspect of the contest of wills can increase the intensity of feelings or emotions among military officers, leading to greater concentration on the task(s) at hand and thus improved learning outcomes.38

The final two laws of learning are related—“primacy” and “recency.” The concept of primacy posits that students more readily learn the first piece of information presented.39 Recency indicates that students better recall information learned most recently and that learning can be improved through cyclical or iterative reinforcement and building upon concepts recently taught.40 Games contribute to this by adequately designing feedback loops to reinforce the importance of certain player or opponent actions.41 Furthermore, games often include immediate consequences for poorly planned or executed player actions, contributing to a personalized understanding of why the decision leads to certain consequences.42

One researcher has tied wargames to a learning cycle very much akin to Kolb and Kolb’s learning cycle.43 Johan Elg has proposed that wargames encourage a cycle of learning as follows. First, during proposition, the player considers possible actions to take and makes a decision or proposition as to which action or actions best suit the scenario. Elg then posits that the player tests their proposition by making a game move. The play result will provide feedback in the form of a reaction. From this, the player enters what both models term reflection, by which the player assimilates new information and may change their playing style to suit the new mental model. With this perspective, it appears that wargames have the potential to impact each stage of Kolb and Kolb’s adult education cycle.

Other researchers have examined the effectiveness of serious games and scenario-based simulation in education.44 Evidence supports the effectiveness of serious games as an educational tool; however, there does appear to be a detrimental impact to learning effectiveness in games that impose an excessive mental workload.45 Thus, there is good reason to believe that wargaming as an educational tool is founded on solid adult educational theory. However, effective implementation of educational wargaming into professional military education requires a holistic approach to both game design and assessment of learning outcomes.46

Student-Designed Wargame AA83 and the Learning Cycle

The student wargame AA83 was built using three contributing elements to the game context: the real-life Exercise Able Archer 83, the 2018 unclassified Summary of the National Defense Strategy (NDS), and the newly designated warfighting function of information.47 The group then examined the key aspects of these elements of the game context and used those to develop the primary educational objectives of the wargame.

Elements of Game Context

The basic design of student-designed wargame AA83 is that two players, one Soviet and one American, are engaged in strategic competition within the timeframe of the late 1970s/early 1980s Cold War. The basic mechanism is to use a variety of different types of cards to achieve the player’s objectives.48 Phase one begins when players select a national security strategy and complementary agency. The national security strategy card provides a player’s “win conditions,” or minimum scores a player must achieve across a series of three competing national security priorities to defeat the opponent. During phase two, players then build a deck of 25 tailored player cards to achieve their required win conditions. During phase three, players employ their card decks with the intent of both achieving their own win conditions, while simultaneously frustrating those of the opponent. All player cards are designed using the historical scenario of Exercise Able Archer 83 and Cold War state competition as a backdrop, including both real historical events as well as counterfactual events, which would have been feasible at the time. In addition, the design team drew game components from other aspects of the game frame. Key aspects, by game context component, are as follows.

Exercise Able Archer 83

Exercise Able Archer 83 has been characterized as the nearest that the United States and the Soviet Union came to nuclear war since the Cuban Missile Crisis in 1962.49 The exercise is critical, but the attendant tensions are the culmination of the previous two years of the Ronald W. Reagan presidency. Heightened rhetoric on both sides, exemplified in part by President Reagan’s designating the Soviet Union as “the focus of evil in the world” and an “evil empire” contributed to a tense security environment.50 This was exacerbated by increased military shows of force by the United States, designed to show that the Department of Defense (DOD) possessed a qualitative military advantage over its Soviet adversaries.51 From this perspective, Exercise Able Archer 83 was particularly provocative, in part because it tested many new aspects not previously included in a U.S. nuclear command post exercise.52 The exercise was but one component of this environment in which the risk of strategic miscalculation was heightened.53

A significant part of this miscalculation was based upon the fact that President Reagan caught the Soviets off-guard.54 Rather than continue the conciliatory approach of President James E. “Jimmy” Carter or revert to the realist détente approach of his fellow Republican president Richard M. Nixon as the Soviets expected, President Reagan adopted a much more aggressive approach.55 While this approach had its merits, it also had the unintended or unforeseen consequence of signaling to the Soviets that the United States was preparing to launch a secret and preemptive nuclear strike.56 As a result, and after being briefed on intelligence community estimates of Soviet fears, President Reagan recognized the need to adopt a more stable and predictable approach, which was in turn less provocative.57

2018 National Defense Strategy

Student wargame AA83 also incorporates the 2018 National Defense Strategy’s imperative to shift strategic focus from violent extremist organizations to long-term strategic competition with nation-state adversaries.58 In many ways, AA83 provides a useful parallel to today’s strategic environment, particularly vis-à-vis Russia, as Russian president Vladimir Putin is a product of the Soviet system and exhibits much of the same decision making that pervaded the Soviet system.59 In addressing this component of the AA83 game context, the designers sought to focus educational goals on the dynamic and volatile nature of the Cold War and current security environments, as well as the need to integrate DOD assets with all of the other instruments of national power to achieve U.S. objectives.60

From the 2018 National Defense Strategy, the team identified two key concepts. The first concept is competition in a dynamic and volatile security environment. To simulate this concept of state competition, the game provides players with the opportunity to change the opponent’s national security agency, thereby changing the resources or game moves available to a player during game play. The wargame also incorporates a defense readiness condition (DEFCON) scale, with certain player actions impacting this scale to greater or lesser degrees and any player driving the scale to DEFCON 1 being the loser. The team also viewed the need to integrate all instruments of national power as critical to the 2018 NDS and designed the game so that each player has three competing national security priorities to balance to achieve win conditions.

Warfighting Function—Information

Though somewhat broader than the warfighting context, deterrence is all about information. Strategic deterrence requires not only a demonstrated capability, but it also requires an understanding of an adversary’s perceptions and motivations.61 Part of the difficulty in understanding an adversary’s perceptions and motivations is a tendency to believe that the adversary sees and perceives actions and events either as intended or as the actor seeking to deter would view them.62

This disconnect, often described as “mirror-imaging,” was prevalent in the context of AA83. Not only did Soviet analysts and policy makers misinterpret President Reagan’s approach, but to a significant degree the U.S. policy makers and analysts misunderstood the Soviets as well.63 While every action sends a message to an adversary, the message received may not be the message intended.64 Furthermore, in addition to messaging the adversary, other stakeholders such as the civilian population or regional allies may receive a message as well.65 This mirror imaging can lead to strategic miscalculation when operating in an environment characterized by imperfect information. Furthermore, imperfect information can complicate decision making when an opponent’s goals or outcomes are unclear.66

From the warfighting function of information, the team focused on the concept of imperfect information, or the ways in which lacking an understanding of the opponent’s win conditions would complicate the player’s own decision making.67 The design team also viewed the larger strategic context in which actions or messages are viewed as critical to this element of game design. Accordingly, the design team created a series of interconnected effects between a player’s own cards, as well as between a player’s cards and those of the opponent. In essence, a player might foreclose their own actions, or conversely enable actions by their opponent. However, these interconnected effects between players may also be mutually beneficial, resulting in positive outcomes for both players. The design team also included a probabilistic factor into the game, with certain player actions becoming more likely to succeed based upon increases in one of the player’s national security priorities or having previously played some other card.

By focusing on these key aspects of the game context, the design team developed the following learning objectives:

Learning objective 1: Player identifies that the execution of a national strategy requires balancing of priorities, risks, and resources across all elements of national power;

Learning objective 2: Player understands the dynamic and changing nature of the security environment in which actions are taken;

Learning objective 3: Player appreciates the role of ambiguity/imperfect information in executing a strategy.

With the identification of learning objectives, the next step was to design a wargame to effectively teach students the concepts tied to those objectives.

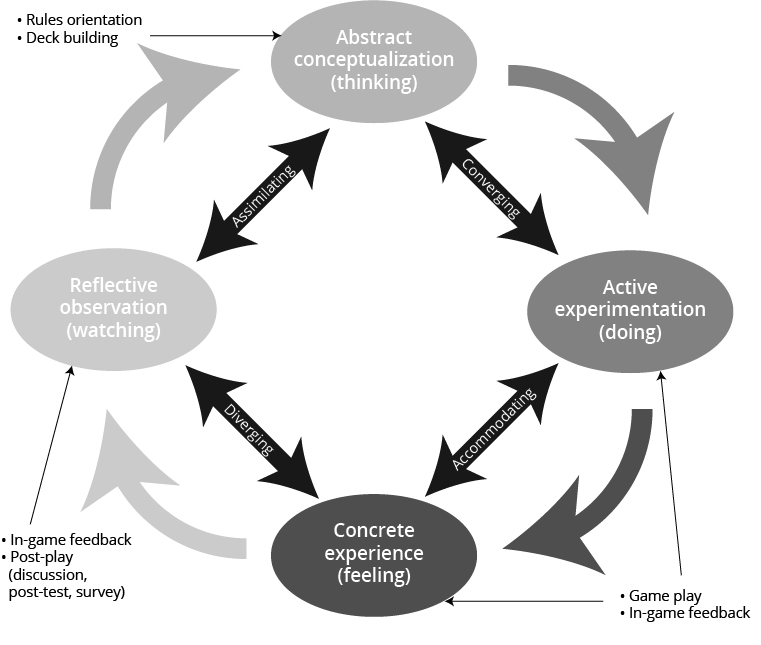

Linking Game Design to the Learning Cycle

The design team was also able to tie various phases of gameplay to the learning cycle. While not constrained or exclusive to the portions of the learning cycle identified, gameplay phases can roughly be viewed as corresponding to specific parts of the learning cycle. Rules familiarization and deck building can be seen as formulating a strategy or “thinking” about a gameplay approach (abstract conceptualization). Gameplay and in-game feedback correspond to the “doing” and “feeling” portions of the learning cycle (active experimentation and concrete experience). In-game feedback and post-play reflection impact the “watching” portion of the learning cycle (reflective observation). By incorporating all of these experiences into the game and the assessment tools, the team was able to design a wargame that effectively stimulates each step in the learning cycle and thereby engages each type of learning style.

Figure 2. Gameplay phases and corresponding steps in learning cycle/learning style

Source: courtesy of author, adapted by MCUP.

The student design team encountered a number of difficulties linking game design to the learning cycle. In linking game design to the abstract conceptualization phase, the design team did not initially include the learning objectives in the AA83 rulebook. In hindsight, this appears a rather obvious omission; however, in spurring players to develop their game approaches it proved helpful to include the learning objectives in the rulebook. The design team also struggled to strike the right balance in the time allotted to players to build their decks.

The design team experimented with a limited amount of time to build decks (20 minutes) and permitting players as much time as they would like. Ultimately, once moving into data gathering, the design team settled on affording players an unlimited amount of time to build their decks. Some players preferred this approach, as it allowed them to be very deliberate in building decks, which provided for complementary or “stacking” effects. However, other players indicated that unlimited time to build a deck was counterproductive and that players could not begin learning until they began playing. On the one hand, an unlimited time to deck build allows players more time to conceptualize a strategy; however, it can bog down players who do not learn best through the abstract conceptualization experience. A limited deck-building time allows players to play more quickly—an attractive proposition to those who learn best through active experimentation or concrete experience; however, it may take several iterations for players to fully appreciate the complex and interconnected nature of game actions in support of learning objectives one and two.

In-game feedback also proved challenging, particularly for first-time players. Student-designed wargame Able Archer 83 is relatively complex, and design team observation revealed that first time players sometimes failed to recognize or apply certain effects as described in the rulebook or on various cards. This led several players to indicate that their first play session was spent learning how to play the game, rather than learning in support of the objectives. In this instance, a simpler game design might better support learning in a busy professional military education curriculum. A balanced game design also proved elusive and presented game feedback challenges.

The Soviet player won the vast majority of games. This may indicate that the game as currently designed is unbalanced in favor of the Soviet player. Designing the American player deck to be more complex may not be feasible in an educational wargame designed for a professional military education curriculum in which students may play the game only one or two times. The design team also found that the DEFCON scale was largely a nonfactor, which hampered support of learning objective 2 related to a complex and dynamic national security environment. Future game refinement would include continuing to balance the Soviet and American player decks such that either player is equally likely to win with limited play sessions and to make the DEFCON scale more of a factor to reinforce learning objective 2.

In an effort to reinforce that players were operating with imperfect information, the design team initially included screens to block each player’s view of the opponent’s game board and national security priority scores. In hindsight, in-game feedback might better reinforce learning objective 3 by removing the screens and allowing players to see the opponent’s game board and national security priority scores. Each player would still be blind to the opponent’s win conditions and seeing the opponent’s score might introduce an element of player bias or distraction by drawing the player’s focus toward a single high score rather than taking a holistic view of the game situation.

Finally, as discussed below, the game design team initially neglected the value of post-play reflection in supporting learning for those who learn best through reflective observation. The post-play assessment and survey used by the design team were of limited utility in this respect, for reasons discussed further subsequently. However, the post-play guided group discussion proved valuable to reflective observation by allowing players to discuss and refine their understanding of the learning objectives and providing players the opportunity to learn from the diverse play experiences of others.

Evaluation of Learning Outcomes in Games

Evaluation of adult learning can often be difficult, in part because effective adult education often involves evaluation that emphasizes comprehension over rote memorization.68 Another challenge is presented by the fact that experiential learning includes diverse instructional methods and requires equally diverse assessment methods.69 The drive toward standardization in education pressures educators to assess achievement of educational goals in a standardized way. Despite the wealth of research on experiential learning theory and extensive use of wargaming in professional military education, there does not appear to be a discussion of assessment methodologies for wargaming as an educational tool or an application of those assessment methodologies. This is especially true in the context of adult education, which values teaching concepts as opposed to rote memorization.70 The goal is to incorporate an assessment model that assesses the utility of the wargame in teaching each type of learner.71

While a general discussion of the assessment methodologies used by the design team follows, along with a description of each methodology’s strengths and weaknesses, the key concepts that the design team took from this experience are twofold. The first is that the tools used to assess the educational utility of the game, in particular the group discussion, were also a critical component of the educational process. Thus, effective incorporation of wargaming into professional military education should include some form of guided or directed period of reflective observation to stimulate those learning with assimilating or diverging learning styles. With this in mind, it may be appropriate to engage players/students in a group or guided discussion or other period of reflection prior to assessing learning outcomes through other means.

Survey

One promising means of assessment would appear to be post-play reflection or interview of players to assess learning outcomes.72 This reflection most often takes the form of group discussions, interviews, or questionnaires; however, surveys can also be an effective means of engaging player reflection as an assessment tool.73 Surveys have a number of strengths as an assessment tool and can provide an accurate perspective as to the relative emphasis or importance that respondents placed on a particular issue. Surveys are also effective generalized assessment tools when specific information is not required. Protection of personally sensitive or classified information can also be accomplished via survey.74 On the contrary, surveys are prone to bias of the respondents and are not effective tools for garnering detailed information.75 Despite the weaknesses of surveys, they can form a valuable component of a more holistic assessment methodology by connecting with the reflective step of the learning process.76

Guided Discussion/Interview

Reflection can also include the use of interviews, and in this case the design team opted for a group guided discussion or after action review.77 Much as with surveys, the reflective nature of a focus group or group discussion can tie to those who learn through a reflective, observation-driven learning style, as well as those who learn through thinking and abstract conceptualization.78 This format was chosen not only to assess the preliminary educational utility of the AA83 wargame but also to identify potential future improvements.

Guided or group discussion can complement data gathering during a survey in a number of ways. Group discussions or focus groups are useful in gathering in-depth information and in resolving conflicting or contradictory claims; for instance, when players’ educational outcomes vary based on the role played during the game or the specific manner in which gameplay progressed.79 Group discussions or focus groups can also explain why people conducted certain actions or took certain lessons away from a gaming experience.80 As demonstrated by the student design team’s play-testing, the group discussion can also provide an avenue for students to learn from the experiences of others whose gameplay included different experiences.81 However, guided discussions or focus groups can be subject to a number of biases based on the relationships between or perceptions of certain group members.82 Unless the sample size is large enough, it may also be difficult to ascertain if a group provides a representative sample of the relevant population as a whole.83 Other group member biases may also impact their responses, such as individuals attempting to appear in a more favorable light to the moderator or other group members.84

Pre-/Post-Test Assessments

Pre-/post-test assessments can tie educational outcomes to those who learn best through concrete experience and active experimentation by assessing changes or improvements in player understanding of certain concepts through gameplay.85 The pre-/post-test method’s primary strength is in identifying changes in knowledge or behavior as a result of the assessed activity.86 Post-test assessments can also provide subjective feedback as to the “why” behind changes in player behavior or in identifying game satisfaction.87 On the contrary, pre-/post-test methodologies may fail to account for psychological or cognitive differences in players when assessing learning outcomes.88 Other biases that may present in a post-play testing include the recency bias, in which players knew or understood a concept, but perform better on a post-test assessment because the topic is fresh in their mind.89

Effective pre-/post-test administration may also require two groups—a test group and a control group to truly draw statistically significant conclusions.90 Poorly crafted questions may not result in the data sought and may not be fully understood by the students.91 Pre-test assessments require some previous knowledge or understanding of a concept on the part of students in order to truly assess learning outcomes.92

Observation

Observation is another promising assessment methodology for wargaming or other scenario-based teaching methods. This assessment can be either in terms of personal observation or real-time, computer-based data capture.93 Furthermore, observation in the context of wargaming could be direct or indirect. Direct observation includes real-time observation while the player or person being assessed is aware of the observation.94 Indirect observation is conducted in an environment where the players are not aware of the observer, providing the benefit of not biasing the players’ actions at the expense of being more difficult.95

Studies on the utility of observation as an assessment methodology in scenario-based simulations have concluded that scenario-based training provides good educational value.96 In reaching that conclusion, previous studies have applied two assessment methods. The first was observation of video-recorded performance during the simulation, and the second was reflective interviews with participants. The study concluded, primarily through observation, that students learned both in the performance of “clinically relevant” activities as well as development of emergent behaviors based upon interaction with other participants.97 In essence, these studies identified favorable learning outcomes based on what students were “doing” when engaged in the active experimentation step of the learning cycle. However, the author acknowledges that the study and resultant data collected was in part limited by the amount of time and resources required to complete the study, as well as the focus on more experienced learners as opposed to novices.

Assessment methodologies also included real-time observation of students to assess educational outcomes; however, those have focused on computer-based games separate and apart from the wargaming context or have been applied to pedagogy rather than adult educational models.98 That said, the use of computer-based, real-time data capture can be viewed as a form of real-time observation as an assessment tool. In relying primarily on observation, a number of studies have concluded that wargames or serious games have educational utility.99

Direct observation, as applied in this context, can provide insight into those who learn through concrete experience or active experimentation learning styles and have the benefit of providing players an uninterrupted setting in which to play the wargame.100 Furthermore, assuming that the student observation checklist is appropriately crafted, the data gathered through direct observation can indicate real changes in behavior or thinking based on the game context.101 However, these changes in player behavior may be artificial and not readily translatable to actual practice.102 Furthermore, under direct observation the players will be aware of the observer, which may present a distraction.103 Lastly, observation may not provide the “why” for certain player actions.104 However, there remain gaps in literature discussing the design and assessment of wargaming specifically, as a subset of serious games.105

Lessons Learned: Effective Implementation of Educational Wargames

The design team sought to draw on specific observations of student-designed wargame AA83 to draw larger conclusions about the educational utility of wargames, design methodology to produce an effective educational tool, and the best methods to assess learning outcomes of those with various learning styles. Initial data supports two broad conclusions, as well as providing two areas of necessary improvement. The first conclusion is that the game has educational utility, particularly in the areas tied to learning objectives associated with constructing a strategy, integrating all elements of national power, and dealing with a complex security environment.106 The second conclusion is that three of the assessment tools provided usable and relevant data to assess the educational utility of the game: the student survey, student observation checklist, and the text analysis matrix. Two areas of necessary improvement also presented.

The first area of improvement identified by the student design team is that post-play assessment tools—in the case of AA83 the guided discussion in particular—can provide an integral part of the learning experience in addition to assessment of learning outcomes. The second area highlights two procedural improvements to increase congruence between the team’s learning objectives, game design, and assessment tools. Development of these three components should proceed sequentially, completing one component as far as possible, before moving to the next. Additionally, each of those components should be developed iteratively, testing through formal or informal evaluation and refining to ensure that the game effectively teaches the desired learning objectives and that assessment tools effectively gauge how well students learned the desired concepts.

The Value of Post-Play Assessment to Reflective Observation

One of the critical lessons learned for the design team was the importance of post-play assessment to stimulate the reflective observation step of the learning cycle and the corresponding learning styles of assimilating and diverging. The survey provided some degree of reflective value; however, the guided discussion provided a high degree of feedback on both where the game was successful as an enjoyable undertaking and as an educational tool. The most commonly discussed themes related to the ways in which a player’s early actions could permit or preclude subsequent options, the necessity to balance efforts across multiple strategic priorities, and the ways in which the player’s own strategy was enabled or frustrated by that of the opponent.

The guided discussion also allowed multiple players to discuss and integrate concepts from each other. This group reflection helped illustrate differences between the strategies and approaches each player took, in particular as the U.S. and Soviet player decks are designed to play somewhat differently. The U.S. player deck has more interconnected effects, with a potentially larger “payoff,” in an effort to simulate a qualitative capability advantage. The Soviet deck, by contrast, has lower resource costs and less interconnected effects, enabling a faster tempo or decision cycle. It became apparent during a number of guided discussions that, in addition to its utility as an assessment tool, the group-guided discussion is a valuable educational component as a form of group reflection to better integrate the learning objectives.107

In addition to the survey and guided discussion results, a number of players commented during their play session that during the first play iteration they were focused on learning the rules and understanding the mechanics of the game. Several players commented during their games that subsequent iterations would allow them to better focus on achieving their strategic priorities, balancing risks and opportunities, and assimilating the learning objectives. This conclusion is supported by observation of subsequent play.

Complementary Design of Curriculum, Game Components, and Assessment Tools

It is also critical for all curriculum and assessment materials to be complementary in both concept, and verbiage. Learning objectives and assessment tools, in particular the pre-/post-test for student-designed wargame AA83 were taken from the identified game context documents as well as CSC course cards related to strategic decision making.

As the game only reached the prototype stage, game design does not appear to have fully supported the learning objective related to an ambiguous information environment and the ways in which imperfect information can complicate decision making. In addition, specific terminology in the post test was not similarly incorporated into the game.108 Because the learning objectives and pre-/post-test used language that does not appear in the game materials, there may be some question as to both whether—and how effectively—the game teaches those concepts. To effectively teach the learning objectives as well as assess educational outcomes, all elements of the curriculum, game, and assessment strategy should complement one another. This can be achieved through adoption of a combination of sequential and iterative approaches to all elements of the wargame and its assessment methodology.

Sequential and Iterative Approaches to Game Design and Assessment Methodology

Continued refinement and iteration of the game and assessment tools should follow each play test session. In the interest of data consistency, the design team decided to forego adjusting the assessment tools after each data-gathering play test session. This was despite the fact that it became apparent relatively early in the assessment phase that there was a lack of consistency between the game and the pre/post test. Another way to remedy this would have been to conduct more nondata gathering play tests while maintaining an eye toward the assessment tools. This approach would likely ensure better linkages between learning objectives, game design, and assessment tools, in particular ensuring that the larger curriculum, the game, and assessment tools use the same terminology.

Another potential solution would be to develop the assessment tools once the game design is finalized. The student design team was somewhat constrained by the timelines of the academic year, as well as the need in several instances to conduct activities virtually because of CSC or other health protection concerns. This resulted in the team attempting to develop the game context/scenario, learning objectives, overall game design, and assessment methodology in parallel. While continued iteration of all of these various aspects would certainly have contributed to a better product, a sequential approach would have been preferable. The preferred course of action would have been to settle on the educational objectives and game context before moving on to game design and to finish the game design prior to developing the assessment methodology and tools.

Design of an educational wargame must begin with the overall curriculum and clearly stated learning objectives. Once these are settled, the design team should move on to game design and design game components and mechanics to complement those learning objectives. When the game design is finalized, the design team can develop the assessment tools and methodology and clearly link those assessment tools to game design and thereby to the learning objectives. In the student design team’s experience for AA83, constructing the game and the assessment tools in parallel contributed to the difficulty in coordinating these efforts.

Conclusion

Student wargame AA83 provides educational utility in teaching concepts related to strategy, balancing of instruments of national power, and the role of ambiguity or imperfect information in state competition. The game accomplishes this through engagement of all four learning experiences. Rules familiarization and deck building stimulate those who learn best through abstract conceptualization. Concrete experience and abstract experimentation are engaged primarily through gameplay and in-game feedback, while reflective observation is engaged primarily post play through the post test, survey, and guided discussion. Thus, the assessment tools used to determine the educational utility of AA83 are also a critical component of the educational experience by engaging all four learning experiences and thus accommodating multiple learning styles.

Student wargame AA83 is also in need of refinement. The wargame could better coincide with the learning objectives and the assessment tools could better correspond to the wargame. This disconnect in substance and terminology contributed to suboptimal assessment data and likely a less than ideal educational utility. An effectively designed wargame should implement two procedural improvements to remedy these issues. First, the design team should take a sequential approach to the development and clarification of learning objectives, game design, and assessment methodology. Each component should be developed as close to final form as possible before moving on to the next. Conversely, an iterative approach to the design of each of these components is critical as more data and insights are gathered from play testing and preliminary or informal data collection and assessment, thereby improving the utility of each specific component.

Endnotes

- Gen David H. Berger, Commandant’s Planning Guidance: 38th Commandant of the Marine Corps (Washington DC: Headquarters Marine Corps, 2019).

- Planning, Marine Corps Doctrinal Publication (MCDP) 5 (Washington, DC: Headquarters Marine Corps, 1997); and Joint Planning, Joint Doctrinal Publication 5-0 (Washington DC: Joint Chiefs of Staff, 2017).

- Specifically, the Gray Scholars Program—Educational Wargaming Seminar.

- Preliminary data collection indicates that student wargame AA83 demonstrates educational utility across all three learning objectives. The learning objectives used in gathering data for AA83 were: learning objective 1: Player identifies that the execution of a national strategy requires balancing of priorities, risks, and resources across all elements of national power; learning objective 2: player understands the dynamic and changing nature of the security environment in which actions are taken; learning objective 3: player appreciates the role of ambiguity/imperfect information in executing a strategy. These learning objectives were also included in the game rule book/preparatory materials, so that students would understand the educational objectives before beginning gameplay. A copy of AA83—along with all attendant game materials and rulebook—is maintained at Marine Corps University’s Brute Krulak Center for Innovation and Future Warfare.

- Alice Y. Kolb, and David A. Kolb, “Learning Styles and Learning Spaces: A Review of the Multidisciplinary Application of Experiential Learning Theory in Higher Education,” in Learning and Learning Styles: Assessment, Performance and Effectiveness, ed. Noah Preston (New York: Nova Publishers, 2006).

- Learning, MCDP 7 (Washington, DC.: Headquarters Marine Corps, 2020), 1-1.

- Learning, 1-9, 3-4, 3-14, 10.

- Learning, 1-12.

- Learning, 2-4–2-6.

- Malcolm S. Knowles, “The Modern Practice of Adult Education: Andragogy versus Pedagogy,” in The Modern Practice of Adult Education: From Pedagogy to Andragogy, rev. ed. (New York: Cambridge, Adult Education Company, 1980).

- Malcolm S. Knowles. et al., Andragogy in Action: Applying Modern Principles of Adult Learning (San Francisco, CA: Jossey-Bass, 1984).

- Stephen D. Brookfield, “Pedagogy and Andragogy,” in Encyclopedia of Distributed Learning, ed. Anna DiStefano, Kjell Erik Rudestam, and Robert Silverman (Thousand Oaks, CA: Sage Publications, 2003), https://www.doi.org/10.4135/9781412950596.n125.

- Knowles, “The Modern Practice of Adult Education.”

- Jack Mezirow, “Learning to Think Like an Adult: Core Concepts of Transformation Theory,” in Learning as a Transformation: Critical Perspectives on a Theory in Progress, ed. Jack Mezirow (San Francisco: Jossey-Bass, 2000).

- Mezirow, “Learning to Think Like an Adult”; and Jack Mezirow, “Transformative Learning in Action: Insights from Practice,” New Directions for Adult and Continuing Education, no. 74 (1997): 5–12, https://doi.org/10.1002/ace.7401.

- Mary Ann Corley, Poverty, Racism, and Literacy (Columbus, OH: ERIC Clearinghouse on Adult, Career and Vocational Education, 2003), 243.

- Heidi Silver-Pacuilla, “Transgressing Transformation Theory,” National Reading Conference Yearbook 356, no. 52 (2003).

- Edward W. Taylor, The Theory and Practice of Transformative Learning: A Critical Review (Columbus, OH: Center on Education and Training for Employment, 1998); and Patricia Cranton, “Teaching for Transformation,” in Contemporary Viewpoints on Teaching Adults Effectively, ed. by Jovita M. Ross-Gordon (San Francisco, CA: Jossey-Bass, 2000).

- Jennifer L. Kisamore et al., “Educating Adult Learners: Twelve Tips for Teaching Business Professionals” (unpublished report, June 2008).

- Kolb and Kolb, “Learning Styles and Learning Spaces.”

- Johan Elg, “Wargaming in Military Education for Army Officers and Officer Cadets” (PhD thesis, Kings College London, September 2017).

- Saul McLeod, “Kolb’s Learning Styles and Experiential Learning Cycle,” Simply Psychology, updated 2017.

- McLeod, “Kolb’s Learning Styles and Experiential Learning Cycle.”

- Elg, “Wargaming in Military Education for Army Officers and Officer Cadets.”

- Curtiss Murphy, “Why Games Work and the Science of Learning” (paper presented at MODSIM World 2011 Conference and Expo, Virginia Beach, VA, August 2011).

- Murphy, “Why Games Work and the Science of Learning.”

- Murphy, “Why Games Work and the Science of Learning”; and Elg, “Wargaming in Military Education for Army Officers and Officer Cadets.”

- Elg, “Wargaming in Military Education for Army Officers and Officer Cadets.”

- Peter Perla and Ed McGrady, “Why Wargaming Works,” Naval War College Review 3, no. 64 (Summer 2011).

- Murphy, “Why Games Work and the Science of Learning.”

- Jeff Wong, “Wargaming in Professional Military Education: A Student’s Perspective,” Strategy Bridge, 14 July 2016.

- Elg, “Wargaming in Military Education for Army Officers and Officer Cadets”; Murphy, “Why Games Work and the Science of Learning”; and Philip Sabin, Simulating War: Studying Conflict through Simulation Games (London: Bloomsbury, 2012), 37.

- Perla and McGrady, “Why Wargaming Works.”

- Elg, “Wargaming in Military Education for Army Officers and Officer Cadets.”

- Murphy, “Why Games Work and the Science of Learning.”

- Murphy, “Why Games Work and the Science of Learning”; Elg, “Wargaming in Military Education for Army Officers and Officer Cadets”; and Yu Zhonggen, “A Meta-Analysis of Use of Serious Games in Education over a Decade,” International Journal of Computer Games Technology (2019), https://doi.org/10.1155/2019/4797032.

- Murphy, “Why Games Work and the Science of Learning.”

- Sabin, Simulating War; and James Lacey, “Wargaming in the Classroom: An Odyssey,” War on the Rocks, 19 April 2016; Elg, “Wargaming in Military Education for Army Officers and Officer Cadets”; and Murphy, “Why Games Work and the Science of Learning.”

- Murphy, “Why Games Work and the Science of Learning.”

- Murphy, “Why Games Work and the Science of Learning.”

- Perla and McGrady, “Why Wargaming Works.”

- Murphy, “Why Games Work and the Science of Learning”; and Elg, “Wargaming in Military Education for Army Officers and Officer Cadets.”

- Kolb and Kolb, “Learning Styles and Learning Spaces.”

- Zhonggen, “A Meta-Analysis of Use of Serious Games in Education over a Decade.” Serious games are those played for purposes other than solely for entertainment. Other such purposes for serious games can include education, assessment of individuals, or testing new concepts. Alexis Battista, “An Activity Theory Perspective of How Scenario-Based Simulations Support Learning: A Descriptive Analysis,” Advances in Simulation 2, no. 23 (2017): https://doi.org/10.1186/s41077-017-0055-0.

- Zhonggen, “A Meta-Analysis of Use of Serious Games in Education over a Decade.”

- Perla and McGrady, “Why Wargaming Works”; and Kolb and Kolb, “Learning Styles and Learning Spaces.”

- Though, the design team expanded the effort to Cold War statecraft writ large. Summary of the 2018 National Defense Strategy of the United States of America: Sharpening the American Military’s Competitive Edge (Washington DC: Department of Defense, 2018); and see Marine Corps Bulletin (MCBul) 5400, Establishment of Information as the Seventh Marine Corps Warfighting Function (Washington, DC: Headquarters Marine Corps, 17 January 2019).

- The U.S. player’s goals are to balance: (1) military superiority, (2) détente, and (3) North Atlantic Treaty Organization (NATO) Alliance. The Soviet Union player’s goals are to balance: (1) intelligence collection, (2) bolstering the Eastern Bloc, and (3) maintaining a first strike capability. Different national security strategy cards would require the players to achieve different values on a scale of 10 for each of these priorities to achieve “win conditions.”

- Ben B. Fischer, “Threat Perception, Scare Tactic, or False Alarm?: The 1983 War Scare in US-Soviet Relations,” CIA Studies in Intelligence (ca. 1996): 61.

- Fischer, “Threat Perception, Scare Tactic, or False Alarm?,” 61.

- Ben B. Fischer, A Cold War Conundrum: The 1983 Soviet War Scare (Washington, DC: Central Intelligence Agency, 1997).

- Fischer, “Threat Perception, Scare Tactic, or False Alarm?,” 69. Some of these elements included participation by National Command Authority in the United States and UK, as well as a “full-scale” mock nuclear weapons employment.

- See Colin L. Powell memorandum, “Significant Military Exercise NIGHT TRAIN 84” (8 December 1983). Exercise Night Train 1984 was another nuclear command post exercise, which included live fly portions by Strategic Air Command, live launches of U.S. Navy UGM-73 Poseidon missiles, a concurrent (though separate) North American Aerospace Defense (NORAD) exercise, as well as participation by the Office of the Secretary of Defense and Joint Chiefs of Staff. Colin L. Powell memorandum, “Significant Military Exercise NIGHT TRAIN 84”; and Ben B. Fischer, “Threat Perception, Scare Tactic, or False Alarm?,” 62.

- Francis H. Marlo (dean of academics, Institute of World Politics), interview by Supervisory Special Agent Christopher Sneed, Maj Samuel Robinson, and Maj Pete Combe, 18 November 2020. hereafter Marlo interview.

- Marlo interview; and Fischer, A Cold War Conundrum.

- Bud McFarlane (national security advisor), interview by Dan Oberdorfer (unpublished), 18 October 1989, hereafter McFarlane interview.

- McFarlane interview.

- Summary of the 2018 National Defense Strategy of the United States of America, 2.

- Marlo interview.

- Summary of the 2018 National Defense Strategy of the United States of America, 1, 4.

- Tami Davis Biddle, “Coercion Theory: A Basic Introduction for Practitioners,” Texas National Security Review 3, no. 2 (Spring 2020): 106, http://dx.doi.org/10.26153/tsw/8864; Robert Jervis, “Deterrence and Perception,” International Security 7, no. 3 (Winter 1983): 3, https://doi.org/10.2307/2538549; and Janice Gross Stein, “The Micro-Foundations of International Relations Theory: Psychology and Behavioral Economics,” International Organization, no. 71 (2017): 256, 259, https://doi.org/10.1017/S0020818316000436.

- Thomas G. Mahnken, Secrecy & Stratagem: Understanding Chinese Strategic Culture (Sydney, Australia: Lowy Institute for International Peace, 2017), 3, 12, 16.

- Marlo interview.

- Marine Air-Ground Task Force Information Operations, Marine Corps Warfighting Publication (MCWP) 3-32 (Washington, DC: Headquarters Marine Corps, 2016), 3-7; Information Operations, JP 3-13 (Washington, DC: Joint Chiefs of Staff, 2014), I-3, II-1; and Marine Air Ground Task Force Information Environment Operations: Concept of Employment (Washington, DC: Headquarters Marine Corps, 2017), 24.

- Marine Air-Ground Task Force Information Operations, 3-3; Information Operations, VI-1; and Marine Air Ground Task Force Information Environment Operations, 22.

- Jervis, “Deterrence and Perception.”

- This concept was perhaps best demonstrated by an occasion in which the Soviet player was postured to win, having achieved win conditions in two strategic priorities, while the U.S. player had achieved win conditions in only one. On the Soviet player’s last turn, they played a card, which resulted in the U.S. player achieving win conditions in all three strategic priorities and thus a U.S. player overall win. This was further highlighted during the group discussion with all players from this session, enabling the entire group to learn from this single experience.

- Kolb and Kolb, “Learning Styles and Learning Spaces.”

- Kolb and Kolb, “Learning Styles and Learning Spaces.”

- Kisamore et al., “Educating Adult Learners.”

- Margaret C. Harrell and Melissa A. Bradley, Data Collection Methods: Semi-Structured Interviews and Focus Groups (Santa Monica, CA: Rand, 2009).

- Mezirow, “Learning to Think Like an Adult”; Kolb and Kolb, “Learning Styles and Learning Spaces”; and Elg, “Wargaming in Military Education for Army Officers and Officer Cadets.”

- Shamus P. Smith, Karen Blackmore, and Keith Nesbitt, “A Meta-Analysis of Data Collection in Serious Games Research,” in Serious Game Analytics: Methodologies for Performance Measurement, Assessment, and Improvement, ed. Christian Sebastian Loh, Yanyang Sheng, and Dirk Infenthaler (New York: Springer International, 2015); and Harrell and Bradley, Data Collection Methods, 10–11.

- Harrell and Bradley, Data Collection Methods, 10.

- Smith, Blackmore, and Nesbitt, “A Meta-Analysis of Data Collection in Serious Game Research,” 47.

- Smith, Blackmore, and Nesbitt, “A Meta-Analysis of Data Collection in Serious Game Research,” 47; and Kolb and Kolb, “Learning Styles and Learning Spaces.”

- Smith, Blackmore, and Nesbitt, “A Meta-Analysis of Data Collection in Serious Game Research.”

- Kolb and Kolb, “Learning Styles and Learning Spaces.”

- Harrell and Bradley, Data Collection Methods, 10; and Elg, “Wargaming in Military Education for Army Officers and Officer Cadets,” 48.

- Elg, “Wargaming in Military Education for Army Officers and Officer Cadets,” 82.

- The student design team played a number of iterations of the game during development to highlight weaknesses and necessary refinements. In addition, the design team solicited player feedback from other students at Marine Corps Command and Staff College (both within and external to the Gray Scholars Program) for the same purpose. These play sessions were focused on refining the game, rather than for educational purposes or data gathering for assessment. These pre-data gathering sessions will be referred to subsequently as “play-testing.”

- Elg, “Wargaming in Military Education for Army Officers and Officer Cadets.”

- Elg, “Wargaming in Military Education for Army Officers and Officer Cadets.”

- Smith, Blackmore, and Nesbitt, A Meta-Analysis of Data Collection in Serious Game Research, 47.

- Kolb and Kolb, “Learning Styles and Learning Spaces.”

- Smith, Blackmore, and Nesbitt, A Meta-Analysis of Data Collection in Serious Game Research, 48.

- Smith, Blackmore, and Nesbitt, A Meta-Analysis of Data Collection in Serious Game Research, 49.

- Smith, Blackmore, and Nesbitt, A Meta-Analysis of Data Collection in Serious Game Research, 49.

- Murphy, “Why Games Work and the Science of Learning.”

- LtCol Richard A. McConnell, USA (Ret) and LtCol Mark T. Gerges, USA (Ret), “Seeing the Elephant: Improving Leader Visualization Skills through Simple War Games,” Military Review 99, no. 4 (July–August 2019): 114.

- Sylvester Arnab et al., “Mapping Learning and Game Mechanics for Serious Game Analysis,” British Journal of Educational Technology 46, no. 2 (March 2015): 405–6, https://doi.org/10.1111/bjet.12113.

- Arnab et al., “Mapping Learning and Game Mechanics for Serious Game Analysis,” 407–8.

- Dirk Ifenthaler and Yoon Jeon Kim, ed., Game-Based Assessment Revisited (Cham, Switzerland: Springer Nature AG, 2019), https://doi.org/10.1007/978-3-030-15569-8; and Battista, “An Activity Theory Perspective of How Scenario-Based Simulations Support Learning.”

- Smith, Blackmore, and Nesbitt, A Meta-Analysis of Data Collection in Serious Game Research, 34–35.

- Smith, Blackmore, and Nesbitt, A Meta-Analysis of Data Collection in Serious Game Research, 34–35.

- Battista, “An Activity Theory Perspective of How Scenario-Based Simulations Support Learning.”

- For example, other students, role-play patients, or the presence of instructors. The use of serious gaming and scenario-based training appears relatively common in the medical training community. See Battista, “An Activity Theory Perspective of How Scenario-Based Simulations Support Learning”; and Zhonggen, “A Meta-Analysis of Use of Serious Games in Education over a Decade.”

- Zhonggen, “A Meta-Analysis of Use of Serious Games in Education over a Decade”; Smith, Blackmore, and Nesbitt, A Meta-Analysis of Data Collection in Serious Game Research; Dirk Ifenthaler et al., “Assessment in Game-Based Learning Part 1 & 2” (conference, Association for Educational Communications and Technology, January 2012); and Kim and Ifenhalter, Game-Based Assessment Revisited.

- Kim and Ifenhalter, Game-Based Assessment Revisited; and Zhonggen, “A Meta-Analysis of Use of Serious Games in Education over a Decade.”

- Kolb and Kolb, “Learning Styles and Learning Spaces”; and Smith, Blackmore, and Nesbitt, A Meta-Analysis of Data Collection in Serious Game Research, 35.

- Smith, Blackmore, and Nesbitt, A Meta-Analysis of Data Collection in Serious Game Research, 35.

- Smith, Blackmore, and Nesbitt, A Meta-Analysis of Data Collection in Serious Game Research, 35.

- Smith, Blackmore, and Nesbitt, A Meta-Analysis of Data Collection in Serious Game Research, 35.

- Contra, for example, Harrell and Bradley, Data Collection Methods, 82.

- Zhonggen, “A Meta-Analysis of Use of Serious Games in Education over a Decade.”

- Learning objectives 1 and 2.

- Kolb and Kolb, “Learning Styles and Learning Spaces.”

- For example, the term mirror imaging. Thus, player feedback on this particular question was inconsistent (though not necessarily incorrect) with the game design team’s understanding or application of that term.