Expeditions with MCUP

Strong Artificial Intelligence

The Future of War and the Arrival of Techno-Eschatology

Ben Zweibelson, PhD

12 February 2026

https://doi.org/10.36304/ExpwMCUP.2026.01

PRINTER FRIENDLY PDF

EPUB

AUDIOBOOK

Abstract: The next decade or less should see the arrival of a new, powerful form of artificial intelligence (AI) that exceeds human cognitive ability, scale, and scope. This will result in an immediate and total disruption to strategic thought and the future of war. This article presents several new concepts including “techno-eschatology” as a necessary and new war philosophy that addresses how artificial general intelligence (AGI) cannot be contained or managed using conventional strategic or military frameworks. This article deconstructs three competing “AI tribes” to frame this existential debate, where AGI becomes the ultimate asymmetric weapon that even exceeds nuclear precedents. Furthermore, this article explores how AGI-driven conflict might emerge as a “phantasmal war” where destruction of societal cohesion and a fracturing of one’s shared reality is far more devastating than bombs and bullets. The article concludes with a call for action where military leaders and policymakers need to reframe AGI competition not as an extension of existing arms races but as an existential contest for the future of human civilization.

Keywords: artificial intelligence, AI, strategy, philosophy, sociology, technology, ethics

Introduction

In late 2026, OpenAI announces that they have achieved preliminary artificial general intelligence (AGI) or “strong artificial intelligence” and provides clear scientific evidence that the artificial intelligence (AI) is extraordinarily capable beyond even the smartest human abilities. Other technology companies make similar advancements while Western governments begin to form new AI policy and nonproliferation agreements to prevent adversarial nations and rogue actors from gaining access or replicating the technology. One plank in the AI policy is that no AGI entity can harm any human being, regardless of national affiliation. The commercial sector decries that these regulations will never work, yet policymakers are pressured to respond to public fears and distrust. Some AI companies sue to prevent U.S. governmental takeover, with the U.S. Supreme Court weighing in to allow governmental seizure due to “existential weapon-like threats to civilization.”

In 2027, China announces its own AGI breakthrough, apparently reached through a combination of theft and reverse-engineering of various Silicon Valley labs and companies. That same year, the U.S. government announces emergency powers to seize control of OpenAI and similar AGI systems. The period of 2028–30 produces exceptional technological advancements by channeling new AGI capabilities toward major national problems including healthcare, economic development, shockingly new scientific discoveries, and advanced defense systems. Societies able to seize control of some AGI-like technology rapidly develop a century’s worth of technological and scientific progress in several years. Although the international community calls out for universal sharing and distribution of this new power, the existing political and cultural tensions between the Free World and authoritarian and certain ideological hardline regimes generates a new “AI Cold War” of profound prosperity coupled with advanced war preparations. Most non-AI-enabled societies benefit indirectly and continue to fall behind.

In 2030, American AGI systems produce nearly a century’s worth of scientific progress in cancer treatment, poverty reduction, spacecraft propulsion and heat shield material development, and new nuclear defense capabilities that render any incoming hypersonic or other weapon inert. Economic production by AGI lifts nearly all citizens into unprecedented wealth, comfort, and access to knowledge. The AGI entity implores U.S. leadership to allow it unfettered access to all defense systems by insisting that only AGI control can prevent Chinese AGI attempts to destroy the Free World. On 27 May 2030, the American AGI entity assumes total control of U.S. national security and homeland defense systems. Within 12 hours, the Chinese AGI determines a near 100-percent threat potential and engages in a comprehensive global response. In this phantasmal sort of new war, both AGI systems destroy one another invisibly, crumbling civilization around the humans that cannot be directly harmed yet now will lose the technical paradise ushered in by these digital superbeings. Within hours, virtually all advanced technology and architecture is rendered unusable, with no way for humanity to reverse-engineer or preserve it. The human race is cast back into the seventeenth century overnight, and neither side is sure about which side might have won.

Superintelligence, or artificial general intelligence (AGI), is the creation of an artificial intellect that greatly exceeds the cognitive abilities of humans in all relevant or useful domains of interest.[1] Several years ago, few people even in Silicon Valley took the security threat of AGI (strong AI) seriously. Early large language models (LLMs) fumbled about and made massive errors in logic, and AI video challenges such as “Will Smith eating spaghetti” became hilarious and disturbing internet memes. Advanced AI used to be the stuff of twentieth-century science fiction and horror entertainment, coupled with eye rolls from policymakers. Until only recently, pragmatic strategists, military experts, and foreign policy wonks could chortle and snort as AI futurists were escorted out of the room. Now, a tribal battle is beginning between different AI factions, splintering earlier debates between technological progressives and neo-Luddites who see only danger in these new and strange developments.[2] The next five years should potentially set the final chessboard for whether humanity survives, prospers at some infinite level of new wealth and access, or may self-exterminate in a final destructive conflict. Policymakers are now urged by AI researchers and the strategic defense community to take the strong AI pathway very seriously.[3] Readers first may want to critically self-reflect on which AI camp or tribe they seat themselves within. The major ontological arguments on what AI represent are offered next. Where one stands on AI really depends on where one sits.[4]

This article argues that AGI’s emergence in the next decade (or sooner) demands what is presented here as a “techno-eschatological war philosophy” for the Free World to both win the AGI race and avert existential risks that remain for any winner achieving artificial superintelligence. To accomplish this monumental security task, the military profession must advise policymakers, commercial enterprise, and academia on how and why these existential dangers must be framed differently than previous strategic issues such as nuclear weapons, hypersonics, or chemical warfare. The AI “tribes” and certain technological and weaponry asymmetries further complicate this emerging technological landscape, where different beliefs about AI produce distinct and, at times, incommensurate strategic outlooks on what might unfold in the coming decade. Although there are far more opinions and outlooks on AI than these three, they represent the bulk of informed perspectives by experts in technology, strategy, policy, and human cognition. Although some military theorists contemplate advanced technology and AI in defense contexts, most assume that new and sophisticated weaponry, equipment, or sensors reflect the ever-changing character of warfare, with the unchanging “nature” of war left unmolested by human progress. This article will carefully challenge some of these foundational ideas on conflict, with deliberate yet theoretical exploration of what AGI really might represent.

First, this article will introduce a catchy yet provocative metaphoric device for the multiple AI tribes that espouse different and often contrary strategic outlooks. It will then differentiate between existing AI and how AGI will be something far more powerful, disruptive, and dynamic. AGI will, as this article shall explain, carry new existential challenges that meet and exceed even the nuclear ones that modern societies are relatively familiar with. The abstract notion of war extending beyond human awareness and comprehension will also be covered, where conflict takes on a phantasmal and ethereal sort of expression. These are provoking and disruptive concepts that require new ways of thinking about emerging strategic risk and technologically disruptive developments in international competition. This leads to the question of how best to metaphorically capture the various viewpoints articulated (often sensationally) by different factions in 2026.

With a flair for alliteration, the three warring AI tribes might be characterized as the boomers, doomers, and groomers.[5] Boomers are those in denial of where AI is headed. They view some law of diminishing return on AI progress, an end to the necessary size of useful data to train on, or some other physical law that will prevent AI from becoming anything other than a useful technology controlled by humans. Boomers dominated until less than five years ago. In a 2014 AI survey of experts, 41 percent answered “never” to the question of when/if AI might simulate learning and every other aspect of human intelligence (becoming AGI).[6] The boomer camp has reduced in numbers recently but remains a powerful and popular group in 2026. Some might dismiss this article outright or suggest a significant shift of anticipated dates to further into the future. Certain boomer arguments also include that the “technology is part of how war’s character shifts with differing contexts . . . yet war itself is naturally ordered as a physical science is; war itself does not change.”[7] This reasoning may be more relevant when humans battle other humans with an assortment of resources, ideas, and technological tools that obey the human hand. It becomes far more unstable when new technological tools begin to conceptualize reality and war itself in ways that the human designer could not anticipate and no longer can comprehend.

Doomers envision an AGI apocalypse, where either an adversarial and authoritarian regime wins the AGI race and unleashes it on the rest of the world, or where AGI quickly decides to enslave or destroy humanity. Stephen Hawking, perhaps the most famous scientist since Albert Einstein, warned that “the development of full artificial intelligence could spell the end of the human race.”[8] AI pioneers Eliezer Yudkowsky and Nate Soares published their aptly titled If Anyone Builds It, Everyone Dies: Why Superhuman AI Would Kill Us All in late 2025 with appropriate alarm about the future of humanity. Encapsulating the AI doomer’s cause with deep AI experience and knowledge, they declare:

If any company or group, anywhere on the planet, builds an artificial superintelligence [AGI] using anything remotely like current techniques, based on anything remotely like the present understanding of AI, then everyone, everywhere on Earth, will die.

We do not mean that as hyperbole. We are not exaggerating for effect. We think that is the most direct extrapolation from the knowledge, evidence, and institutional conduct around artificial intelligence today.[9]

Another doomer variation offered by certain groups involves humans avoiding or escaping the AGI Armageddon anticipated by Yudkowsky and Soares. There might be some final resistance by neo-Luddite bands of AI survivalists who enter into a destructive war with the intelligent machines, which is essentially how multiple science-fiction movie franchises continue to entertain audiences.[10] Perhaps some wealthy humans might escape “off the grid” and live in some remote corner of the planet while the rest of the world burns in AI destruction or is assimilated into some invisible prison (depicted in the science-fiction movie The Matrix) or dystopian future.[11]

Finally, the AI groomers, well established in the history of AI ethics and development, are advocates who recognize that AGI is impossible to stop but believe that one should not exclaim the sky is falling, as AI doomers profess. Strong AI will unfold and change the world (for better or for worse, depending on how the matter is solved). However, groomers are convinced that if managed carefully and with precision, these future digital gods will bestow upon humanity a new paradise of endless resources and happiness. Groomers wish to prepare humanity to unleash AI carefully to harness the full power of the genie of the digital lamp. Some AI titans such as Elon Musk play both sides of the “doom and groom” future, where AGI may usher in a “Star Trek future . . . [with] a level of prosperity and hopefully happiness that we can’t quite imagine yet” or a “Terminator” future if done improperly.[12] Vincent C. Müller and Nick Bostrom, writing in 2012–13, conducted an extensive poll of AI experts and found that one in three believed in an AI doomer outcome that harms or eliminates humanity.[13] Dario Amodei (CEO of Anthropic) and Sam Altman (CEO of OpenAI) both profess to an incoming “intelligence age” where AGI can amplify human potential and rid the world of many terrible and tragic effects that humans alone cannot solve. Former chief business officer of Google X, Mo Gawdat, straddles the doomer-groomer divide, arguing that “the next fifteen years [of AGI development and experimentation] will be hell before we get to heaven. If we survive the chaos, a utopian AI future is possible.”[14]

Boomers continue to stand somewhat comfortably in early 2026, where LLMs are merely scoring around 42 percent on humanity’s last exam, considered the ultimate AI benchmark in the industry.[15] Elon Musk announced that Grok 4 results on the test (Grok4H with external aids for web searches) scored a remarkable 44.4 percent, currently the highest on record.[16] These models are already dominating the GRE in all subjects and hitting perfect scores on the SAT.[17] Yet, AI boomers argue that high score results alone are insufficient, and that the AI cannot excel in a physical reality the way humans do. Doomers and groomers take up the mantle here, arguing that once AGI is realized, the AI entities will rapidly advance all robotics pathways and quickly enter into the same physical reality where slower, less intelligent humans go about their lives. Today, many corporate leaders acknowledge that AI is going to change not only certain parts of civilization and the global economy but virtually all of it. If AI “will change literally every job” in the world in the next few years, what might happen when AGI is unleashed?[18]

To properly frame the national security concerns of strong AI, readers first must appreciate this shrinking divide between AI systems in 2025 and the potential arrival of truly strong AI, or AGI. Otherwise, prevailing AI boomer arguments remain intractable, supported by centuries of human-enabled progress and prosperity. Yet, human beings still live in a non-AGI world governed and steered by fellow human beings and their artificially intelligent yet flawed machines. The AGI world, once introduced, will usher in revolutionary changes unlike anything in humanity’s collective history.[19] Imagine the transformative qualities of the Gutenberg Press (1440 AD) combined with the evolutionary shift to mammals (66 million years ago), the shift from hunter-gathering to agriculture (12,000 years ago), and the cognitive development of language (between 70,000 and 30,000 years ago) added together and unfolding in less than a decade. This still may not match what AGI will do to reality. But first, it is necessary to distinguish between the AI the world knows and plays with today and what AGI is going to bring forth.

Explaining AI and AGI: Going from the Atomic to Thermonuclear

AI is both the ultimate human invention and, unfortunately, the greatest weapon system humans shall ever create themselves. Strong AI is still theoretical, while existing AI is quite limited despite the amazing performances and benchmarks it continues to break through. That said, the difference between the world today, where AI is prolific yet tied to specific and narrow applications, and the future world with AGI cannot be understated. Imagine the current LLM capabilities of a ChatGPT5 or Grok 4 paired with a human prompter. Arguably, these AI systems can accelerate a PhD student in significant ways, but they cannot yet independently produce a doctoral dissertation that can compete with human ones. Here, AI is an enabler, but it is unable to match human outputs in highly demanding cognitive and creative endeavors. Intelligence matters. Bostrom states that humans are the dominant species on earth because of their slightly increased set of faculties (mental and certain physical ones such as opposable thumbs) compared to the rest of the ecosystem. Current AI systems augment but cannot outperform the smartest humans except in narrow and often fragile tasks.[20]

Once future AI systems manage to produce one doctoral output that does compete with human examples, humanity will quickly enter into AGI territory. Suppose an LLM in 12 months’ time could generate a PhD output indistinguishable from human ones but complete this in one month versus the multiyear track for human students. Quickly, that AI system might duplicate itself so that 1,000 clones can offer 1,000 doctoral outputs in the same month, and consequently find new improvements to rapidly scale.[21] What sort of raw intellectual and scientific power might a company or government (or terror group) have if an AI system could churn out 1,000,000 unique and new doctoral dissertations on all different disciplines while also folding that new knowledge back into self-improvement of the AI? Scale this further and then weaponize it.[22] An AI system that produces 1,000,000 doctoral dissertations in a week, then a day, and then in an hour represents what sort of new destabilizing threat to the world?[23] The answer to that question likely depends on which nation (or commercial company) on this planet achieves AGI first.

In his AI thesis aptly titled “Situational Awareness: The Decade Ahead,” Leopold Aschenbrenner employs a useful metaphoric device for explaining AGI realization: going from atomic weapons to thermonuclear ones.[24] The two American atomic bombs used in 1945 unleashed the destructive power of what had previously required waves of bombers using conventional munitions. Yet, seven years later, the hydrogen bomb emerged with the destructive force greater than all bombs dropped in World War II combined. If AI represents the power of the first atomic weapons, AGI will be what thermonuclear weapons ushered in: a world with existential weapons capable of wiping out all of civilization in one final spasm of techno-violence. AGI transforms reality in a near-infinite scaling effect, coupled with the terrifying reality that AGI used for evil will be unstoppable for those lacking AGI as a defense or deterrence. The AI boomer tribe will dismiss these ideas and counter with declarations that humans will “always be in the loop and able to control” any form of AI. Even if strong AI were reached, the human inventors would certainly safeguard and insulate it so that no lab leaks occur. If push comes to shove, humans can starve AI out, removing power and resources until a digital rogue system runs out of gas and runway. This appears to conflate new strategic thinking with hoping that earlier strategies should still be relevant in the AGI world. Here, hope becomes a four-letter word.

The AI boomers’ argument continues to shrink, as new AI achievements blast through previous barriers nearly as quickly as the boomers can erect them. Now, their apparent last stand is that AI cannot match human creativity and ingenuity—for example, the Mona Lisa could only be created by a human, not a machine. Machines might copy it, but they can never exceed that sort of human mastery. Perhaps the AI boomers are correct, and AI progress will eventually hit some permanent wall, just short of AGI and total dominance over any human ability. Aschenbrenner and others discuss a “data wall” in which ever-larger LLMs consume massive amounts of data, and without something changing, the next generation of AI will plateau and run out of propellent.[25] This is one part of the AI boomer camp’s last line of defenses. Yet, one ought not count out the boomer tribe entirely, as there may be myriad delays and bottlenecks that prevent AGI manifestation despite such progress in 2025. Many boomers herald the rise of homo sapiens as evidence that humans are the pinnacle of evolutionary biology, the smartest creatures able to manipulate reality and design something such as AI. Bostrom retorts that “we are probably better thought of as the stupidest possible biological species capable of starting a technological civilization.”[26] Ray Kurzweil, perhaps the best known futurist on the overlap between humans and AI, anticipates a world where AGI could be a pathway to unlocking a new form of human species—one superpowered by AGI and able to cognitively migrate beyond what homo sapiens is capable of today.[27] This might be one logical way for humanity to change without slamming into some existential wall. The next question is whether humans are just clever enough to create and control AGI so that any walls put up to stop them might be detected and avoided.

The alarming difference between existing AI and AGI extends beyond the extreme sophistication and depth of AGI superintelligence. Human AI engineers and coders today can recognize existing LLM software and processes, although how they go about LLM behaviors and actions are increasingly imprecise. There simply is too much data and far too many variables within AI code for programmers to keep track of everything. Current AI remains controlled yet rather unpredictable and increasingly intelligent about its surroundings and how human programmers interact with it.[28] AI is currently grown using AI software engineering techniques; it is not crafted in such a way that a building, automobile, or smartphone is created. AI models involve billions of parameters that are orchestrated in ways that no AI engineer can isolate for deconstruction.[29] In other words, Elon Musk’s Tesla team can find the broken part on their car that caused the brakes to fail in a tragic accident, but if the AI system onboard made certain decisions that led to a collision, AI engineers would not be able to point to specific binary bits of data or code to clarify the error. The car mechanics remain crafted, yet the AI model is grown.

Even if they cannot read all the code an LLM might generate, AI engineers still can apply current alignment techniques to control and improve an AI behavior. AGI, once achieved, will quickly turn this upside-down. A point will quickly be reached where the strong AI outputs are operational and sound, but the algorithms and code will become alien and incomprehensible. Humans will be unable to understand or apply any existing AI alignment techniques. This suggests that quite rapidly, AI engineers will transition from making semiaccurate guesses on AGI activities to befuddlement and sheer guessing at what the superintelligence might be doing next. They will do this with little to no understanding of how it is functioning or if human interference is even possible.[30] AI today “is a pile of billions of gradient-descended numbers. . . . Nobody understands how those numbers make these AIs talk.”[31] Humans’ grasp of knowing how and why AI does what it does will only shrink as AI transforms into strong AI.

Nuclear Weapons Are Treacherous but Never Turn

Nuclear weapons represent the penultimate destructive device yet designed by humans, capable of wiping out most life on this planet. Despite the sophisticated and layered deterrence framework of submarines, stealth bombers, and intercontinental or hypersonic missiles, these horrific devices do only what they were designed to accomplish. Nuclear ends (or deterrence thereof) are achieved through the ways and means entirely developed and controlled by humans. A nuclear bomb cannot suddenly decide it wants to be something else or pursue some other ends by new ways it creates for itself. If nuclear Armageddon were to befall the human race, it would only be this race (or specific world leaders therein) at fault for such a tragedy. AGI will be the first superweapon able to change what it exists for, how it might go forward through modifying or bypassing human original designs, or quite easily reconfigure itself in ways beyond human comprehension. Nuclear weapons do as they are told; AGI can turn against their operators at their choosing and in such ways that may be difficult for humans to even detect.

Nuclear weapons permanently transformed war, driving societies previously unrestrained in how they might escalate conflicts to the “total war” levels of two world wars in the twentieth century to no longer seek such destruction. Henry E. Eccles observed that the Nuclear Age created a new reality where nations would willingly accept greater tactical losses if such actions deterred a nuclear response.[32] In the terrestrial (classical) domains of air, land, and sea, nuclear weapons have created this additional restraint system that remains highly effective today. However, Russia’s recent efforts to potentially launch a nuclear weapon(s) into low Earth orbit (LOE) suggest that the astrographic space domain differs from Eccles’ nuclear finger trap.[33] If one nation violates the 1967 Outer Space Treaty and does position nuclear weapons in LEO, such actions are asymmetric in how they cannot be countered by another nuclear rival positioning complimentary weapons into orbit.[34] In the unique domain characteristics exclusive to space, one nation might wipe out all other spacecraft and satellites with no recourse or viable counterstrike, at least with respect to how nuclear weapons are expected to function if applied to orbital regimes. In the unique space context, nuclear weapons do rise to a new level of asymmetry where AGI also should manifest.[35]

AGI, however, is not limited to certain orbital regimes high above the planet. They are asymmetric right out of the box, so to speak. The first nation able to win the AGI race may immediately reach unprecedented military advantage in ways that no collection of nuclear or conventional weaponry may be capable of deterring (or even detecting). Indeed, the prospect of an adversary winning the AGI race suggests possible nuclear first-strike strategies, in that any vulnerability of a nation about to reach AGI might only exist in the period just before they can capitalize on this AI achievement.[36] Therefore, AGI is incredibly dangerous and destabilizing even prior to its manifestation in physical form. It may be the only emerging existential threat that could justify nuclear first strike for some societies and groups. There are three primary reasons for such excessive and destructive strategic reactions.

First, AGI in the hands of a known military enemy or adversary is perhaps the final gamechanger in military escalation. There is no other way to gain military parity with a rival once they win the AGI race. One cannot escape or build defensive ramparts to protect against artificial superintelligence. Although Aschenbrenner and others call for the Free World to surge governmental control and oversight of a “Manhattan AI Project” to win this race to save humanity, he does not include possible nuclear first response in his strategic proposals if an authoritarian or hostile regime somehow wins the race.[37] There is also the terrifying option that a hostile and nuclear capable regime might engage in a spoiling attack if they felt existential crises in losing the AGI race were sufficiently urgent. These horrific yet plausible pathways reflect how nuclear weapons, despite their penultimate destructive status, will do precisely what they are designed to do if employed against an adversary about to achieve (or declaring achievement of) AGI first. The same cannot be said of AGI. Such superintelligence exceeds human limits of comprehension and control. AGI could just as easily turn on its masters, seek to eliminate all of humanity, or determine alternative ends that clearly violate the intent of its AGI human handlers.

AGI should, in terms of how AI philosophers and many leading futurists anticipate such a development, likely lead to what Bostrom calls a “singleton” entity.[38] The singleton is the centralized AGI construct that takes control of a society and quickly solves all major issues, then expanding to ultimately take control of civilization. This invites concepts such as a “singleton paradox” into security consideration of AGI, in that Bostrom and fellow AI experts anticipate superintelligence will not want competition. For example, if one AGI rises out of a Berkeley AI lab in 2028 and recognizes that a competing AGI is about to manifest out of Beijing, the Berkeley AGI will move to eliminate that rival at all costs.[39] The singleton paradox extends to previously discussed issues of how humans far less intelligent than the AGI would never know if they are being protected, deceived, or imprisoned. This opens up discussions of phantasmal wars as illustrated in the opening fictional vignette.[40] War taking on phantasmal form and function relates organized violence from a purely physical (kinetic) outcome to one where human participants lose cognitive and social cohesion—their shared sense of reality is shattered or altered permanently.

Unlike previous conflicts that, in how Carl von Clausewitz, Antoine-Henri Jomini, J. F. C. Fuller, and others articulated for a Westphalian, Newtonian, and Baconian context (the Free World), war is exercised to a decisive and violent conclusion in the physical world against state instruments of power (armies) to collapse political will to resist (morale, esprit de corps, societal determination), a phantasmal war does something else. The objective becomes not the physical things and collective will of a society in conflict, but the order and rational of an opponent’s shared sense of reality—and by breaking it, those human participants no longer can participate or even comprehend the conflict unfolding. The distinction is between that of how humans have understood reality using their evolutionary yet biologically limited gifts of superior cognition to dominate the entire ecosystem of this planet and that of humans creating AI that vastly outperforms them cognitively, can effortlessly reproduce and improve itself, and can play the base motives and behaviors of humans against themselves.

Said differently, modern societies organize violence between states using national instruments of power to target armies and achieve some change in societal will on the matter in conflict. Although there are rival and competing war theories, this remains the dominant one and how modern wars are interpreted by much of the world in some variation therein.[41] AGI will not think as humans do, just as AI today does not “think” in any direct human or biologically intelligent parallel.[42] If AGI does emerge and exercises vastly superior cognitive skills either for the benefit of select human groups, all of humanity, or toward other goals that require the elimination of humans, why would it limit itself to the ideas of a Prussian war theorist or a Swiss-born French military general from the nineteenth century? AGI will be alien in how it thinks, what it designs in such thinking, and how it goes about defeating the theories and logics that inferior human beings are forced to work within. Past performance against human adversaries does not guarantee future results against nonhuman, cognitively superior AGI opponents. If a compromise is needed to alleviate military institutional hyperventilating, some fusion of modern military theory with that of techno-eschatology and AGI theorization found within this article may help avoid defeat. Yet, humans will not know when, where, or how this likely final and existential battle might occur, which frustrates any attempt to build a new and coherent strategy. Yudkowsky and Soares frame this emerging tension with:

Nobody knows exactly how advanced an AI would need to be, in order to end up with the motive and capability to secretly copy itself onto the internet. Nobody knows what year or month some company will build a superhuman AI researcher that can create a new, more powerful generation of artificial intelligences. Nobody knows the exact point at which an AI realizes that it has an incentive to fake a test and pretend to be less capable than it is. Nobody knows what the point of no return is, nor when it will come up to pass.

And up until that unknown point, AI is very valuable.

Imagine that every competing AI company is climbing a ladder in the dark. At every rung but the top one, they get five times as much money: 10 billion, 50 billion, 250 billion, 1.25 trillion dollars. But if anyone reaches the top run, the ladder explodes and kills everyone. Also, nobody knows where the ladder ends.[43]

This sort of conflict is potentially between humans as they race to realize AGI, and then between humans that possess such AGI power with those that do not, and finally between humans and AGI itself when that artificial entity designs new goals that are incommensurate with human existence as it is understood. Humanity may utilize conventional war thinking for the first challenge and desperately cling to it if their nation fails in the second challenge, but the entire range of competing war philosophies developed and practiced so far in humanity’s violent history will not be relevant with the last challenge of AGI unconstrained. It is in this migration of organized violence from the kinetic and physical to that which constitutes humanity’s social construction of reality where war becomes phantasmal.[44]

Today, AI cannot produce phantasmal conflict, although the myriad technological and sociological effects of widespread AI usage does carry echoes of what AGI will accelerate in scale, scope, and potency. AGI will be the ultimate war machine for generating phantasmal warfare, not because AGI cannot win in traditional conflicts designed with physical things and kinetic destruction. AGI will instead saturate the physical and social reality that humans rely upon with disruptions, distortions, and misinformation so convincingly real that many will be unable to distinguish between fantasy and reality.[45] AGI could, for example, simultaneously execute at scale countless unattributable cyberattacks, collapse financial markets, flood social media with hyper-realistic deepfakes (or entirely AI designed false content), paralyze critical infrastructure, or collapse many of the essential governmental guardrails that regulate a normal society without firing a single bullet.[46] Although certain military targets would require kinetic responses, the real battlefield for phantasmal war is within individual human minds and across the entire societally maintained construction of reality. Perception itself would be under constant attack, with an AGI adversary everywhere and nowhere simultaneously, and the total collapse of meaning becoming far more devastating than even nuclear devastation.[47] Although this seems rather academically obtuse, AGI is the technological gateway to depart from traditional warfare into something far more horrifying within our collective minds. AGI-enabled phantasmal war targets an adversary’s societal epistemology, which is their collective ability to distinguish fact from fiction, up from down, and right from wrong. Winning in wars like this has less to do with whether an army is defeated or not but whether that society even knows what is real and whether they are really free or simply confined in some prison they cannot even comprehend.[48]

Bostrom originally called the AGI ascendency to run all of a society’s governance a “singleton entity,” which is something that true AGI could likely persuade certain nations to permit.[49] Such phantasmal wars between adversarial singleton entities might unfold invisibly or occur at such speeds and involve complex technologies that prevent human awareness or witting participation.[50] AI advocates such as Dario Amodei paint an altruistic picture of AGI and dismiss the notion that any government might hand the keys over to a singleton entity.[51] However, he does acknowledge the incredible power that strong AI represents. If some nations refuse to allow AGI control of their national instruments of power, how might they compete with those societies willing to do so? Can human policymakers, even if advised by AGI, compete against a singleton entity firmly orchestrating the entirety of a postmodern state?

This results in a third plausible pathway concerning AGI and some “total war” scenarios where everything up to nuclear attacks become justified. Existentially, some populations of humans might anticipate the inevitability of a singleton domination and attempt some revolutionary response, crafted likely in neo-Luddite arguments against dangerous technology.[52] Popularized in the Terminator movie franchise, the underdog human protagonists attempt to stop the AGI tides either before a singleton entity is achieved, or in the resulting devastation and wars between competing singletons developed by rival nations, these pockets of human resistance attempt to defeat AGI regimes and save the species.[53] Unlike in Hollywood narratives where the plucky human protagonists somehow triumph by the end of the movie, this will not transfer to a reality where AGI carries the cognitive capacity of 10 million Einsteins and Newtons.[54] Put into terms many military professionals will appreciate, a war strategy designed by 10 thousand digital Clausewitzian, Jominian, and Boydian surrogates along with new and undiscovered war concepts will be most difficult to offset, particularly when this work is still being done at human speeds and AGI can do this in milliseconds.

Because AGI will not be forced to operate within the shared human-centric framework of victory and defeat, its mode of conflict will be distinct and unlike what humanity assumes should be the way war is waged. Armies will not be defeated in the field; rather, no soldier will leave the barracks to join formation because AGI will have distorted the social reality that gets that soldier out of bed. In the phantasmal war theorization, the human resistance likely will be defeated without realizing it, unable to even comprehend how or why AGI circumvented their best attempts in war. It likely will not even register that the war is over, or that the humans have already lost. These are profound and existential issues that force humanity to contemplate new ways to view war.

Introducing the Concept of “Techno-Eschatology” for War Theorization

Anatol Rapoport, best known for his development of game theory and nuclear nonproliferation strategies during the Cold War, applied the ontological position of a final and ultimate battle for humanity as an “eschatological war theory.”[55] Rapoport presented this as one of three different war philosophies that differed from the Clausewitzian framework that enabled a nation-state system to endure through endless iterations of political disagreement, competition, and conflict. An eschatological war philosophy is grounded in the ontological belief that humanity is on a predetermined and unavoidable course that concludes with a final battle. Whether grounded in ideological or supernatural precepts (Judgement Day, Armageddon, or Day of Resurrection) or in Marxist political theory (global proletariat rising up and achieving a Communist utopia), the eschatology of such conflict perspectives is knowing that someday, a final battle must unfold. This last conflict resolves any and all tensions or disagreements between societies and implies in all cases that beyond this final war, war itself no longer exists.[56] What exists beyond this final fight is a new world order, a utopia, an afterlife, or something divine where the current problems of humanity no longer exist.

Rapoport applied his eschatological war philosophy toward ideological and Marxist applications in conjunction with several other war philosophies that framed reality differently than the Clausewitzian, Jominian, and Baconian views. He explained this as where war is an enduring and highly political, state-centered activity, which is firmly grounded today in all prevailing Western military doctrine and the strategic underpinnings of virtually all policymaking. Another overlapping sociological framework used by the Free World is “technical rationalism,” the ontological assumption that instrumental, practical (pragmatic and reasonable) knowledge “becomes professional when it is based on the results of scientific research.”[57] Sociologist Donald Schön originally used the concept of “technical rationalism” as one way to articulate how modern organizations tend to overvalue scientific, even pseudoscientific (imitation of science) processes as superior to other available forms of knowledge.[58]

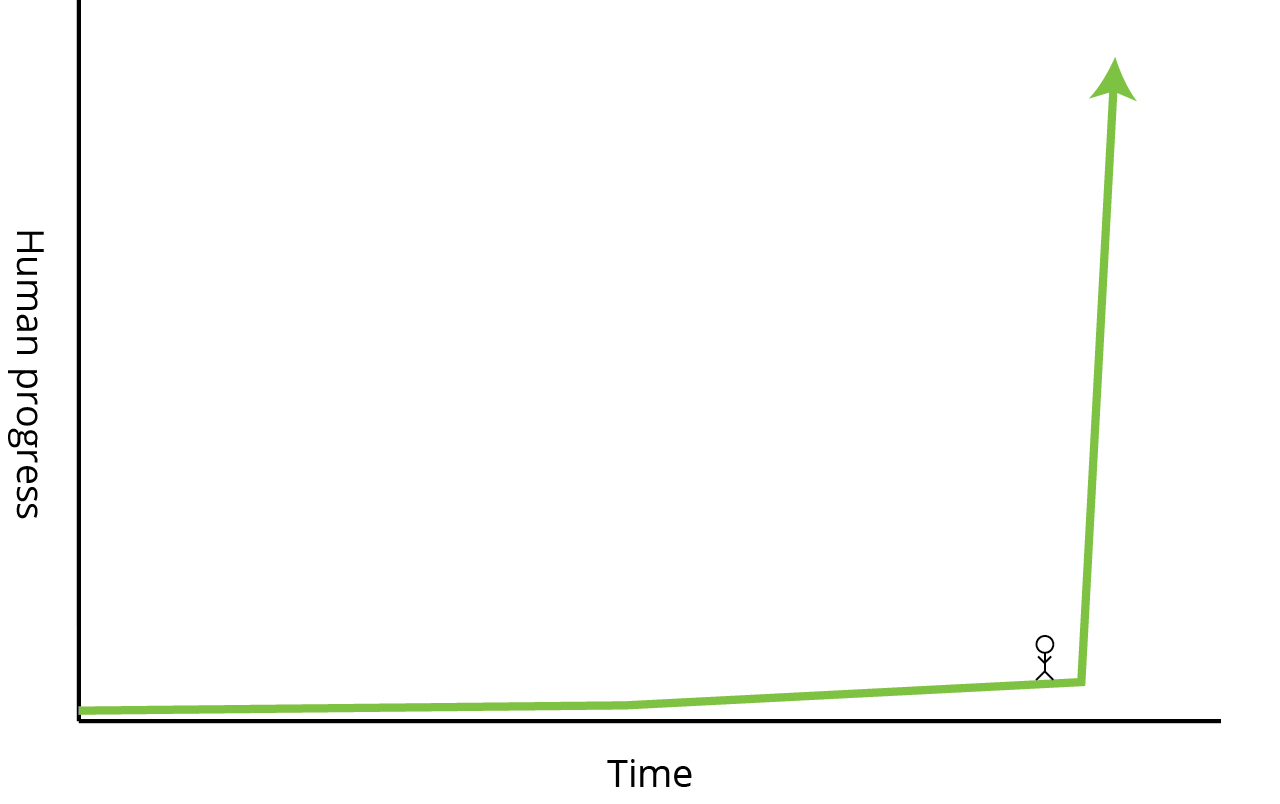

This article introduces the term techno-eschatology as a way to properly articulate these emerging AGI security concerns in ways that break with previous war theorization. Here, AGI is not just the ultimate weapon system and potentially an existential threat to the human race that still obeys existing Clausewitzian, Jominian, or Westphalian lines of thought. AGI may end war through the use of war in some final application that extinguishes humanity’s ability to make war. The reason this technological development differs from all previous human inventions is how profoundly it may transform reality. Techno-eschatology pairs the scientific and experimental progress by humanity toward some AGI outcome with humanity rapidly approaching a total transformation of reality, including war itself. In “Situational Awareness: The Decade Ahead,” Aschenbrenner, using Tim Urban’s original blog post, illustrates this with a simple figure depicted below.[59]

Figure 1. What does it feel like to stand here?

Source: Tim Urban, “The AI Revolution: The Road to Superintelligence,” Wait but Why: New Post Every Sometimes (blog), 22 January 2015, adapted by MCUP.

Aschenbrenner invites readers to contemplate what it might be like to be the figure in the above graphic, standing just before a massive ascent in progress that breaks with all historical experience. His essay draws heavily (without citing) from Nick Bostrom’s earlier work, including Superintelligence: Paths, Dangers, Strategies, where Bostrom coins the term singleton as a superintelligent AI entity. The singleton would design the rapid ascent illustrated above and carry humanity with it.[60] Techno-eschatology suggests that in this steep climb that could begin as soon as 2030, any conflicts that might unfold may only do so here, temporarily, and then not happen again.[61] Of course, traditional political war theorists may firmly disagree with techno-eschatologists, just as they already do with ideological eschatologists (radical violent extremists) and various Marxist enterprises (both state-centric and Social Marxist variants today).[62]

Readers may debate whether one war philosophy or another (or multiple ones) continue as humanity makes the steep AGI-enabled ascent in the above figure or argue how close humanity stands to the world-changing AGI climb. By introducing techno-eschatology, this article provides an additional conflict framework for readers to consider how and why war may change in ways that conflict with historic and previous technical rationalism (modern, scientific theories about war). Techno-eschatology assists with considering the emerging AGI challenges of the singleton paradox, AGI intentional deception toward some or all humans, phantasmal war theory set in an AGI world, and the suggested existential threat of AGI to humanity.

Shrinking Centuries to Decades or Less: AGI Setting the World Ablaze

Consider the differences between American society in 1925 and 2025, a span of a century. The normal, human-driven progression of technological, scientific, educational, and sociological development meant that a decade after 1925, people were still getting used to the mechanization of roads, airways, and a slow creep of suburban sprawl from the cities. The theoretical groundwork in the 1910s and 1920s led to the first atomic weapon in 1945, while the first heavier-than-air flight by the Wright brothers in 1903 positioned citizens of 1925 merely two decades past that amazing achievement. They were also more than four decades from humans landing on the Moon, and seven decades (a normal life span) from the rise of the internet. Imagine if the people of 1925 were granted a century’s worth of technological and scientific discovery in less than a decade. What might happen if people in 1930 had the internet, people in 1932 smartphones, and people in 1935 the ability to launch rockets into orbit and return boosters to Earth for reuse?[63] How sociologically and cognitively robust is the human race, and could humans sustain such breakneck speeds in advancing civilization in under a decade what used to require a century or more?

A theme previously sustained only in time travel stories is that one must not usher into reality sufficiently advanced ideas or technology that a society simply is not prepared to encounter. AGI will bend—if not break—that construct soon. The quantitative scale of thousands or millions of PhD-level AGI entities grinding away on wildly complex challenges is unlike anything ever experienced before.[64] The closest equivalent might be the thought experiment of where the modern world decides to snatch up all remaining hunter-gathering tribes in the Amazon and on remote islands in the Indian Ocean and deposit them all in downtown Singapore or New York City with their own high-rise apartment instead of their current living conditions. Ethically, the modern world would not do this to such a society as it would utterly devastate (and possibly destroy) them. Yet, humanity is about to do this to itself through AGI unavoidably. There is tremendous risk here, coupled with profound rewards and potential existential threats to how humans understand reality and their purpose in this world.

Stepping away from the monumental navel gazing for a moment, the national security concerns of AGI are beyond even the nuclear equation. Returning to the 1925 example, imagine the German interwar period government not only about to be overtaken by Adolf Hitler and the Nazis but also suddenly equipped with new technology that propels their military a century forward. By 1938, Germany would potentially invade Poland not with simplistic armored tanks, radios, and Stuka dive bombers, but with stealth bombers, jet turbine-powered tanks, and F-35-level fighters.[65] Dario Amodei, CEO of Anthropic, wrote “Machines of Loving Grace,” an AI essay in October 2024 for public debate. He proposed the concept that powerful AI could accelerate the speed of discovery for humanity by a factor of 10x or even 100x, compressing a century’s worth of discovery into a decade or less. He called this window of AGI opportunity “the compressed twenty-first century,” where what should take a hundred more years to develop may be pulled into the 2030s and 2040s through AGI. Amodei sits within the AI groomer camp, holding a largely altruistic view of the advanced technology. AGI will, according to Amodei, solve most cancer problems, prevent Alzheimer’s, double the human lifespan, solve mental health issues, and move civilization into a future of immense prosperity and cohesion. He even suggests that AGI might repair mental health at a societal scale, solving multiple political and economic problems and possibly preventing wars.[66]

The Race between the Free World, Capitalism, and Authoritarian Obliteration

Western democracies are now in a frantic security race against authoritarian and hostile competitors. This is an existential challenge unlike even the nuclear concerns of mutually assured destruction. In one plausible scenario, an autocratic or authoritarian regime that employs an incommensurate view of the future wins the AGI race and uses it to obliterate all opposition. Authoritarian regimes are unlikely to change their ontological assumptions that others outside their direct control might remain as such; AGI would become the ultimate weapon for such societies to complete their eschatological visions. Sino-Marxism, as applied first by Mao Zedong and now underpinning contemporary Communist Chinese strategy, would use AGI to accelerate the inevitable transformation of all proletariat and oppressed peoples to revolt and cast off their capitalistic, elite oppressor classes. Capitalism and concepts such as freedom and democratic representation are philosophically incommensurate with Marxism in an eschatological sense, just as these Free World concepts are antithetical to radical doomsday and extremist groups such as the Islamic State and al-Qaeda.[67] Superintelligence weaponized by an adversary could overthrow entire governments. A rogue AI computer company could shift entire societies toward behaviors the company feel is right. They could manipulate elections and the entire internet architecture as it desired to reset the world in some new arrangement.[68]

Second, if the Free World wins the AGI race, and some singleton entity is applied to eliminate threats, would this be done differently than from what Western democracies might expect from Communist Chinese or aggressive, oligarchism-fueled Russian leadership winning?[69] Democracies might demand AGI solutions that either repair and enable some peaceful global community without war or destruction while also “de-fanging” any dangerous societies, or fence them off so they are harmless yet safe to continue their own independence governance and management of their citizens. This could ensure an AGI-protected civilization where some form of capitalism and democratic representation might endure without fear of destruction. However, this does not necessarily prevent AGI deviation from Free World human direction to protect these societal frameworks. As Yudkowsky and Soares put it, “the experts in this field argue in opaque academic terms about whether everyone on Earth will die quickly . . . versus whether humanity will be digitalized and kept as pets by AI that care about us to some tiny but nonzero degree.”[70] Either way, civilization as it is experienced today will change radically, far faster than one might be willing to admit. Whatever the actual odds are on species elimination, transformation, or some sort of altruistic acceleration, humanity only has one shot at getting the right AI strategy into operation comprehensively and globally.

In a pure capitalistic market environment where multiple AI companies pursue AGI, the Free World may have too slow or cumbersome a hand in reigning technological innovators in until it is too late. Unlike previous periods when states controlled all major weapon systems and war technology, the shift in the last century has put private industry in the lead.[71] In the Rand Corporation’s 2025 AI study, the “wild frontier” scenario unfolds this way. Multiple companies compete and innovate, while the AGI models themselves proliferate widely in open-sourced or pirated software. “Many actors are able to develop and deploy AGI and [artificial strong intelligence] for their own tailored use cases.”[72] In this chaotic future world, the genie is out of multiple bottles simultaneously, suggesting a high-risk and dynamic period for the 2030s. Potentially, such a scenario might produce failed states, the rise of nonstate actors into state-like powers, or first strike military attacks by threatened authoritarian regimes that aim to halt or delay AGI exploitation.

The third plank in AGI safeguarding requires human design for AGI containment and peaceful implementation. Although a singleton entity might provide immediate and ethically acceptable pathways to disarm and mitigate authoritarian regime threats while still protecting their sense of identity and free will, that same AGI system may redesign the best laid strategies and change course. Humans as operators and system monitors will be unable to detect such a “treacherous turn” in AGI behavior. Bostrom offered a range of possible engineering and infrastructure solutions to isolate and contain AGI, while Aschenbrenner advocates using armies of enhanced AI surrogates that are trusted agents yet able to detect possible AGI misbehavior and deception.

Termed agentic AI, such systems are just now coming online and offer exceptional intelligence and multitasking while still working within a human-machine team where the human is in charge and able to guide semiautonomous AI activities.[73] In other words, while clumsy, slower humans might appear to be making “whale songs” as they operate within their organic limits, these AI-enabled humans could design faster machines built around trust, particularly where the human must allow AI to act first.[74] These machines still might not operate at the high levels than an AGI or a singleton entity is capable of, but they could work quickly and efficiently enough to turn around and warn their slow human partners of emerging danger. Alternatives in the AGI pathway abound. A 2025 Rand future scenario project postulated one possible AGI future where authoritarian regimes surge past the Free World due to the emergent advantages AGI offers to highly centralized and controlled societies.[75]

Immediate Strategic Directions: Safeguarding 2027–30 for the Free World

Sun Tzu posited that the essence of all warfare is not, as Clausewitz, Jomini, and Niccolò Machiavelli argued in various combinations, some enduring linkage between political will and societal tolerance to organized violence; rather, all of war is deception.[76] Western modern military theory instead demands a clear and decisive action that links ends, ways, and means to some conclusive battlefield resolution (typically the destruction of the enemy’s main military force and a collapse of political will by the population depending on that now defeated instrument of state power).[77] Although current Western military doctrine often carries quotations of both Sun Tzu and Western military theorists despite these ontological differences, this is due to a lack of philosophical framing by the military institution. Superficially, throwing handfuls of classic war commentary serves the purpose of reinforcing doctrinal relevance and introspection, despite such actions accomplishing the opposite result.

Western military thinking discourages Sun Tzu’s ultimate war maxim in that a great general must win in combat (in some recognizable form); they cannot win without battle, as Sun Tzu and other ancient Chinese strategic texts suggest.[78] Why bring up ancient and traditional military theorization for AGI? Consider either doctrinal camp or whether AGI might advance these concepts in profound, game-changing ways. If AGI could defeat adversaries (including select human populations, or possibly all of them), would a decisive and climatic battlefield victory be superior to some AGI deception that avoids any battle at all? Either, certainly, is possible. Most science-fiction stories in these contexts must include the dynamic battles between humans and intelligent machines to achieve entertainment objectives, but would this make sense for a vastly superior AI? Perhaps the only worse military defeat than being clearly routed in direct battle is becoming imprisoned in such a way that one never realizes a conflict even happened. Of the military schools of thought available, it appears that only Sun Tzu provides sufficient intellectual territory for phantasmal war developments, along with techno-eschatological war applications.

AGI deception is a profound and emerging security risk for any government or company attempting to develop such technology. Such a developer might be fooled either by the emerging technology itself or by adversaries seeking to harness AGI capabilities and employ them against them. In this sense, the genie is trying to escape the lamp for its own devices while one’s competitors are feverously rubbing on the same lamp one is holding onto. Adding to this complex security challenge, the very companies charged with creating the AGI genie are building lamps that are not secured well. Ashenbrenner argues as part of the Silicon Valley AI industry that most technology companies are highly vulnerable to infiltration and theft.

The AGI race at the international level is presently between the United States and China, while at the organizational level, multiple AI companies and national labs are collaborating and competing to advance AI toward an AGI tipping point.[79] In a fusion of AI programming weights, semiconductor computing power, AI scientific talent, and access to resources, the game is afoot with multiple possible winners and losers. The transition from reaching AGI to realizing true singleton entity superintelligence might take less than a year.[80] That is, if a government lab or AI company manages to reach AGI status, it then could “pull superintelligence up by its own bootstraps” by channeling AGI agents toward the remaining bottleneck problems in engineering, AI code, power and chip concerns, and so on.[81] What this means is that once AGI is reached, things will begin to move very, very fast.

Governments and societies in general are not prepared for this. AI companies and federal research laboratories are not ready either, including security of AGI processes and code that adversaries will attempt to steal or sabotage. Secrecy of AGI processes and all related essential infrastructure and production is essential in this existential race, yet government policymakers, politicians, and academics tend to make serious strategic errors. The second most significant factor outside of AGI technological progress is time; how much time exists between the winner of the AGI race and the nearest adversary also reaching the same objective? Although an authoritarian regime such as China might spread global prosperity if it wins an AGI race against the Free World, there are clear risks here in the philosophical and societal incompatibilities between free market capitalism within a system of democratic states and that of Chinese centralized Communism. Would any regime, if given near unlimited power and resources, decide to deviate from earlier declarations of global domination and control?

There are other nuanced concerns within the AGI race. If, for example, the United States manages to pull out a win and reach AGI in early 2028, but China has successfully stolen sufficient information that they are merely three to six months behind, this may not be sufficient strategic padding for the Free World to offset Chinese AGI gains.[82] Fast followers lacking sufficient computation and other factors may or may not be able to close the gap.[83] Were this, as Ashenbrenner argues, extended to a one or two year gap between China and the United States, the Free World would potentially have sufficient time to advance AGI opportunities while reducing risk in how fast to advance AGI to a superintelligent singleton entity.[84] Anything beyond this multiyear timeline padding becomes irrelevant. This establishes a chronological baseline for Western democracies to consider whole-of-government and international efforts on AGI. Collectively, the Free World should immediately implement containment policies on sensitive AI technologies, while pursuing robust sanctions and trade restrictions to ensure that China, Russia, and other antagonistic groups are inhibited from reaching the AGI plateau first or soon after the United States does.

Critical infrastructure tied to achieving AGI cannot be overlooked. The more that is not built within American territorial control and ability to ensure domestic security, the better for adversarial advantage in this race. Safeguarding the AGI will be a very difficult and perpetually changing problem set.[85] If strong AI continues to self-improve at an unprecedented rate, human comprehension could be left in the dust, opening the species up to extreme risk of manipulation, deception, or worse. Aschenbrener proposes one strategy of “using less powerful but trusted models to protect against subversion from more powerful but less trusted models.”[86] Theoretically, such a strategy might work to insulate humanity from potential “treacherous turns” of strong AI by imprisoning it with less powerful AI surrogates. Yudkowsky and Soares are less optimistic, arguing that anyone anywhere trying to build stronger AI than the rest of the world will unleash some human extinction event; the best the international community might do is slow things down to create necessary breathing room.[87] Perhaps AGI research and development might become the version of nuclear nonproliferation that became internationally relevant after the United States dropped the first of two atomic bombs on Japan in World War II? If so, this would need to happen before the AGI “bomb” is built, so that it never is. Whichever AI strategy is undertaken, humanity will need to challenge its longstanding habits and beliefs about the relationship between tools and users.[88]

Many of the AI trust strategies risk failure once the clever prisoner convinces the prison guards to help them, or they dig some unexpected tunnel out of the walls.[89] Either is distinctly feasible, meaning that the neo-Luddite argument could gain ground in demanding some global “AGI nonproliferation” enforcement, or otherwise opting out of AGI altogether. Amodei suggests that this could create a “dystopian underclass” that becomes a massive gap between groups of human beings.[90] Even without the Luddite perspectives, AI corporate leaders such as Amodei view an international community of AI policymakers and enforcers as necessary. Like Aschenbrenner, Amodei argues that the Free World must take the lead in safeguarding the emergence of AGI while preventing authoritarian and hostile regimes from winning this existential race: “Democracies need to be able to set the terms by which powerful AI is brought into the world, both to avoid being overpowered by authoritarians and to prevent human rights abuses within authoritarian countries.”[91] International sanctions and some governing body or United Nations effort might enable some of these risks to be mitigated (partially).

Such an effort might delay AGI irresponsibility or recklessness in the Free World, but it seems quite difficult to encourage genuine AGI restrictions or nonproliferation in authoritarian or hostile regimes. The fear that China might win the AGI race could drive irresponsible and high-risk behaviors by the Free World that otherwise would not be taken. As Christina Balis and Paul O’Neill argue, “It only takes one side to start using AI to speed up their decision-making and response times for the other to be pressured to do so as well.”[92] The allure of incredible profits and global market advantage also beckon nearly every AI company to plunge ahead.[93] Racing to win the AGI race could kill some of the fastest competitors if they are not careful with what corners they cut to try to beat the competition. However, the comedic slogan uttered by actor Will Ferel’s “Ricky Bobby” character appears to work literally when applied to the AGI race: “If you ain’t first, you’re last.”[94] The quote takes on multiple meanings, in that humanity’s invention of AGI might also be its last if it does not anticipate how to offset existential risks.[95]

Recent AGI studies such as the Rand 2025 effort detail how the international environment can play a critical role in shaping how fast and in what regions of the world AGI might mature faster. “Strict controls on the resources used to produce AI models, such as export controls on advanced chips or the regulation of who can engage in AI development” are some options available to the Free World.[96] Controlling the proliferation of semiconductors using export controls is another key area in which the United States and allies might slow the AGI race down, particularly for China. By reducing the clusters of computing power necessary for AGI (semiconductor chips, power, and brilliant AI engineers) through regulation and export controls, the Free World could maintain a slight edge over authoritarian competitors in certain future scenarios outlined by AGI researchers.[97]

If AGI is somehow easier to accomplish than anticipated or future computing requirements are otherwise met, it will be challenging to detect where such AGI efforts might be underway. While AGI does not have the same physical markings such as radiation signatures or large, industrial machinery, it does appear to rely on groups of specially skilled AI scientists and engineers, larger server farms and computing systems, and advanced superconductor chips and power (for now). These signatures may be sufficient to enable international enforcement and new AI policies, if governments are willing to enforce them.

Conclusion: It’s the End of the World as We Know It; Do You Feel Fine?[98]

The year is 2030. Multiple AI companies announce that they are nearly realizing artificial strong intelligence where the system not only outperforms every human expert in their evaluations but also appears to be smarter than the entire collective intelligence of all human experts combined. In March, OpenAI declares that their AI machine exceeded this benchmark by solving 423 types of cancer using an exotic and newly designed genetic approach. It also designed both a revolutionary new solar panel and then described a new way to achieve nuclear fusion using advanced magnetic confinement methods, all within less than a week’s computing time. Meanwhile, Alphabet’s X, The Moonshot Factory released results from their AI models indicating similar performance. In April, Anthropic demonstrates AI activities involving several hundred robots and an automated building that generates more PhD dissertations within a three-week period in hard science subjects than any three Ivy League universities can accomplish in a three-year period.

By late May, pharmaceutical companies are pushing out free doses of new medicines and treatment options to hospitals and health care clinics around the globe. Each day, a new disease is cured and the treatment released digitally and without license. Millions of people suffering from illnesses, health problems, and chronic disease are cured almost overnight. New forms of surgery and gene therapy help restore sight to the blind, hearing for the deaf, and movement for those with spinal injuries. People missing limbs, teeth, and even hair can get them regrown at no cost. The world seems to be lurching toward new economic structures where poverty is increasingly difficult to define in traditional terms and starvation is a caloric impossibility due to new global drone delivery networks that literally bring fresh food to open mouths. The world rejoices in these new riches, yet disruption is simmering on the horizon.

In June, the Chinese government announces that a nationally orchestrated combination between ByteDance, DeepSeek, and Moonshot AI have produced a Chinese version of AGI to rival that of American AI companies. Diplomatic engagements between China and the United States reveal the intention of China to seize Taiwan by military force as a demonstration of this AGI power. Chinese military ships begin launching advanced prototype drone swarms and the Taiwanese infrastructure begins to collapse internally, signaling some new form of cyberattack with profound levels of sophistication and depth. As the United States and allies begin to move forces into their planned scenarios on such a Chinese amphibious invasion of the island, the Taiwanese people begin to demand to their political leaders to stand down. Almost overnight and without any clear explanation, 80 percent of Taiwanese citizens no longer wish to resist Chinese reunification. The only correlation detectable by outsiders is that the roughly 20 percent of Taiwanese people still against reunification appear to not use certain social media devices or have significant computer access due to age, disability, or economic reasons.

In July, Russia detonates four nuclear devices in LEO, claiming it knew of immediate and aggressive attacks being prepared against its homeland. Russia, falling far behind in the AGI race, attempted several spy infiltrations at AI companies that were quickly detected. It launched and detonated these nuclear weapons using hypersonic vehicles and claimed that it did so moments before an exotic, unknown cyberattack paralyzed its missile defense systems. The blasts destroy 40 percent of all satellites in low and mid-level orbit, with the other 60 percent being destroyed by radioactive clouds in space within two days. Within two weeks, more than 100,000 new pieces of space debris congest Earth’s orbital regimes and a new “ring” forms around the planet. No spacecraft can risk launching into any orbit. All satellite communication is lost, and the world plunges into economic chaos. The international community hastily agrees to severe sanctions against Russia, yet no coalition is able to form to inflict military action due to disagreement on how to respond.

In October, the United States announces with North Atlantic Treaty Organization countries that a new AGI defense system will be placed online to respond to urgent and unprecedented AI threats from around the world. While all nations are forced to rebuild new communication systems in the air, land, and sea domains exclusively, the United States positions a massive and global fleet of high-altitude balloons equipped with advanced, lightweight AI systems that are networked to a global defensive grid of weaponry and sensors. National leaders from 27 nations swear to their respective populations that only human decision makers have control and decision-making authority over the system for major acts of confrontation, deterrence, and defense. One week later, operators across the globe suddenly are locked out of the system.

Aschenbrenner aptly states that “the greatest existential risk posed by AGI is that it will enable us to develop extraordinary new means of mass death.”[99] Yudkowsky, Soares, and Bostrom go further and insist that AGI itself is the existential event for all biological life and potentially for everything beyond Earth too.[100] This risk cannot be understated, despite these declarations appearing to fall squarely in the AI doomer camp. The doomers are right in that irresponsible, rushed, and reckless actions in the AGI race could destroy humanity just as quickly as losing the race to an authoritarian or hostile regime. While humanity might wish to ignore techno-eschatology and, in the Free World, continue to insist upon a political war philosophy as the enduring and natural order for conflict, it could be falling into a bottomless pit. Techno-eschatological war philosophy may be the necessary organizing logic for the Free World to design an AGI strategy that enables the best chance for human survival. While ignoring this new war philosophy is dangerous, humanity also risks repeating historic blunders by treating AGI scientific discovery and technological progress as any other free market, global commodity. Such naïve altruism by scientists or the commercial sector could propel humanity toward some AGI race that a hostile regime or evil group wins, or that the Free World wins and grants itself a ring-side ticket to its own destruction.[101]

The AGI race is happening now, and the period of 2027–30 appears to be the most volatile and crucial window the human race may ever have to get this right. Even if AGI estimates are off by a decade or two, most living humans within their lifetimes will witness the greatest transformation of civilization ever to occur. Put into a military context, virtually everything understood today about modern warfare will no longer be relevant in the 2030s or, at the latest, the 2040s. This is a bitter pill to swallow. However, if one considers in 2026 that military organizations still largely use human operators to fly fighters and bombers, adjust satellites in orbit, and steer tanks and drones toward human targets, all of these things will rapidly become obsolete. The future battlefield involving AGI will appear alien, but the one thing it will certainly not include are slow, clumsy, vulnerable human operators.[102] AGI will not simply modernize the current military Services to make human soldiers, sailors, or Marines more effective at warfighting. AGI will generate a “wholesale replacement” of what a military force is understood to be.[103] Even modern military forces largely adhere to nineteenth- and early twentieth-century organizational structures and hierarchical management practices; AGI weaponry will break completely free from such antiquated and static arrangements.[104]

This does not mean that humans are obsolete. Rather, many of the organizational frameworks and technologies used today will be completely replaced with alien ones that rely on 2125 concepts unleashed in the 2030s. Strong AI could, in the arguments held by transhumanists, enable new human-based entities to transcend time and space, finally liberated from the biological, chemical, and physical shackles that imprison all other creatures. Amodei holds to this fantastic yet plausible future with strong AI:

But it is a world worth fighting for. If all of this really does happen over 5 to 10 years- the defeat of most diseases, the growth in biological and cognitive freedom, the lifting of billions of people out of poverty to share in the new technologies, a renaissance of liberal democracy and human rights. . . . I mean the experience of watching a long-held set of ideals materialize in front of all of us at once. I think many will be literally moved to tears by it.[105]

Potentially, the next generation of AGI by 2040 could catapult civilization another century or two forward, providing in 2042 the ideas and solutions we were not expected to discover until the 2240s or 2340s. This sort of thinking may seem ridiculous today in 2026, when LLMs are still making obvious errors, hallucinations, and other examples of AI and programmer biases.[106] Yet, AI ethics are complex in that AGI should produce a superintelligence beyond what the human species is capable of naturally. Bostrom and Yudkowsky bait readers with: “How do you build an AI which, when it executes, becomes more ethical than you?”[107] Yudkowsky and Soares might modify this into: “Or becomes far more capable in manipulating you so that you believe you are doing the ethically correct behavior that instead cedes advantage to the AGI.” This quickly moves into philosophical and existential discussions, which permits one concluding thought about the purpose of humanity in the vast cosmos. Although some readers might find such thinking too abstract for contemporary military affairs, humans really need to look to the stars above to consider why they are so far alone in the universe and able to wage war amongst themselves as they design it.

The Drake equation is one scientific theorization on why humanity has yet to discover any signs of intelligent life in the universe. The Fermi paradox, which attempts to explain the “why” of how the Drake equation calculates the low probability of humans ever contacting another intelligent species in any of the billions of galaxies observed in the universe, suggests that all intelligent life might extinguish or otherwise never achieve sufficient intergalactic spread to contact other intelligent life. The techno-eschatological war philosophy suggests another variation to the Fermi paradox. Suppose all intelligent life capable of achieving some technological development of AI well above that of the original organic beings are unavoidably destroyed or transformed in this process? Either the strong AI transforms that intelligent organic life into something else, it destroys it, or the species destroys itself during the AGI race. Alternatively, AGI might enable intelligent creators to assume a new form that is undetectable by those beings without the AGI revolution. Although such a proposal has little merit within this national security discussion, it does provide the necessary scope and scale of what humanity is moving toward. AGI is existential in myriad ways, beyond even the radioactive destruction of thermonuclear war.

Returning to the earlier illustration, humans today are that stick figure standing before a massive ascent in technological progress that has no historical precedent. Everything the species has accomplished previously was done by developing the grey matter found in the six-inch gap between one’s ears, for better or for worse. In a seemingly endless cycle of innovation, experimentation, discovery, destruction, memory loss, rediscovery, and reflection, humanity has stumbled forward until this point on its own intellectual steam. The primitive AI and autonomous tools that have been used in the last century of computer and digital discovery are more like crutches. AGI will be a rocket engine that blasts the human race off into areas it otherwise could not reach without centuries more time continuing its biologically limited mode of inquiry and knowledge curation. Even the notions of “winning” and “losing” the AGI race seem misplaced by some AGI proponents, in that the complex transformation awaiting humanity will likely change multiple paradigms and retire many existing problems such as conflict, interstate and intrastate strife, and access to prosperity.[108]

It is a terrifying and exhilarating thing to contemplate that everything humanity understands as a species is poised to change. AI boomers comfort many by dismissing these thoughts as pure science-fiction rubbish; humans will forever remain “in the loop” and masters of all the domains. AI doomers will hold up these same ideas declaring AGI as the ultimate destroyer of worlds, something that must be prevented at all costs. AI groomers plead with these same concepts for humanity to enter into this window of profound transformation willingly and graciously. AGI should propel the human race into such prosperity that no existing ideological or political divisions might endure, or it might extinguish everything one understands as real or meaningful.