A New Approach to Assessments and Evaluations

Captain James R. R. Van Eerden

https://doi.org/10.21140/mcuj.2020110105

PRINTER FRIENDLY PDF

EPUB

AUDIOBOOK

Abstract: Despite the billions of dollars invested in the security cooperation enterprise each year, the Marine Corps and the Department of Defense (DOD) have failed to implement standardized metrics and processes for evaluating security cooperation engagements at the tactical level. Without such data, it is nearly impossible for the security cooperation enterprise to accurately assess progress in achieving national security objectives, such as partner nation basing access and partner force capacity building. Without clear signposts of progress, cooperation engagements will continue to be hampered by redundant or irrelevant training that limits the return on investment for the DOD and strategic U.S. partners.

Keywords: security cooperation, Marine Corps, Department of Defense, cooperation agreements, national security objectives, return on investment, data, metrics

In the realm of investment banking and equity markets, the term alpha is used to describe financial performance relative to standard market returns during a given period of time.1 For investors, the process of “seeking alpha” requires discipline and careful attention to data and analytics patterns that can ultimately lead to a greater return on investment. Similarly, individuals responsible for managing defense spending should seek to implement standard methodologies and data-based decision-making processes, particularly in high investment areas like security cooperation. At times, the focus on new technological developments, such as artificial intelligence and 3D printing, has distracted from the human dimension of conflict and the “key role in building partner capacity” described in the Marine Corps Operating Concept.2 While it is vital to continue developing disruptive technology for future warfare, it is equally important to pursue innovation through improvement of existing technology and processes.

Based on numbers provided by the Office of the Secretary of Defense for fiscal year 2019, security cooperation activity accounts for at least $10 billion in spending—a conservative estimate that does not include classified programs or drug-interdiction programs authorized under Section 127e and Section 284c of Title 10 of the U.S.C.3 Each year, the Department of Defense (DOD) conducts 3,000–4,000 security cooperation engagements with more than 130 countries.4 Despite the large investment of money and time in the security cooperation enterprise, the DOD has failed to implement a standard methodology for evaluating security cooperation activity at a tactical level. In light of this, the Marine Corps should implement a standardized, quantifiable, tactical level security cooperation assessment methodology to accurately measure the effectiveness of engagements with partner forces.

Security Cooperation Defined

Security Cooperation, Joint Publication (JP) 3-20, provides the following definition of security cooperation:

Security cooperation (SC) encompasses all Department of Defense (DOD) interactions, programs, and activities with foreign security forces (FSF) and their institutions to build relationships that help promote U.S. interests; enable partner nations (PNs) to provide the U.S. access to territory, infrastructure, information, and resources; and/or to build and apply their capacity and capabilities consistent with U.S. defense objectives.5

The Fiscal Year (FY) 2019 President’s Budget: Security Cooperation Consolidated Budget Display outlines seven categories of security cooperation activity, including military-to-military engagements, support to operations, and humanitarian and assistance activities, among others.6 The security cooperation framework traditionally includes security assistance (SA), security force assistance (SFA), and some aspects of foreign internal defense (FID).7 In the context of this article, the term security cooperation refers primarily to military-to-military engagements, where the U.S. military engages in training partner forces under the auspices of Title 10 and Title 22 authorities.

The important role of security cooperation in the future operating environment cannot be overstated. The Summary of the 2018 National Defense Strategy of the United States of America asserts that enduring military success is contingent upon “building long-term security partnerships” and upholding “our allies’ own webs of security relationships.”8 In the current operating environment marked by great power competition, security cooperation will be a vital tool used to preempt high-end conflict and assure strategic access to basing, equipment, and intelligence resources. In short, security cooperation is an indispensable pillar of U.S. foreign policy, with the capability to influence all instruments of national power.

Overview of Doctrine and Policies

Though there is much current debate about cost-sharing measures between the United States and its allies, few national security experts would object to the importance of security cooperation. However, it is often difficult to articulate the metrics that define mission success. Perhaps the problem is best framed with a question: If indeed security cooperation is important, how does one measure the output from such activity to shape future planning and funding? This question is only partially answered by doctrine and directives at the joint and Service component levels.

Security Cooperation recommends that all combatant commanders should use an assessment, monitoring, and evaluation framework. However, the publication mistakenly identifies strategic-level assessments and evaluations as the only deficiency in security cooperation planning: “Because SC activities are dispersed and generally support long-term objectives, the impacts can be difficult to immediately measure above the tactical and operational levels (i.e., operational assessments and service or functional component-level evaluations).”9 In January 2017, the Office of the Undersecretary of Defense for Policy published DOD Instruction 5132.14: Assessment, Monitoring, and Evaluation Policy for the Security Cooperation Enterprise, which further elaborates on the assessment, monitoring, and evaluation (AM&E) framework. The DOD instruction outlines the responsibilities of all relevant parties at the strategic level, including the Chairman of the Joint Chiefs of Staff, the geographic combatant commanders, and the functional combatant commanders. The instruction letter states that the “DoD will maintain a hybrid approach to management of AM&E efforts, whereby, in general, assessment and monitoring will be a decentralized effort based on the principles and guidelines established in this instruction and other directives, policies, and law.”10 In theory, this decentralized approach to assessments is preferable. The reality, however, is that Service components have failed to support the Office of the Secretary of Defense’s AM&E framework with focused data inputs.

While the Marine Corps has successfully implemented operational assessments through the use of security cooperation engagement plans and capabilities-based assessments, it lacks the necessary tactical assessments to contribute to the higher-level AM&E structure. In general, the Marine Corps supports the implementation of assessments for security cooperation engagements. Marine Corps Order 5710.6C, Marine Corps Security Cooperation, which governs the conduct of security cooperation activity, suggests that integrated assessment teams are vital to an effective long-term strategy. The order also states that the purpose of assessments is to “provide maximum effectiveness.”11 In addition to the Marine Corps order on security cooperation, Marine Corps Operations, Marine Corps Doctrinal Publication 1-0, affirms the value of assessments. According to Marine Corps Operations, assessments not only provide a “basis for adaptation,” but they also serve as a “catalyst for decision-making.”12 Based on this information, it would seem that the Marine Corps and the joint force have properly identified the need for security cooperation assessments, which prompts the question: What, if anything, needs to be changed about the current approach to security cooperation engagements?

Research Hypothesis

As a former theater security cooperation (TSC) coordinator for Special-Purpose Marine Air-Ground Task Force Crisis Response-Africa 17.1 (SPMAGTF-CR-AF 17.1), the author was not aware of any method to accurately measure the performance and effectiveness of security cooperation missions. After reviewing the after action reports submitted by previous teams, there was a noticeable scarcity of specific training data; higher headquarters and the Marine Corps Security Cooperation Group had not promulgated a standardized, quantifiable, tactical-level assessment methodology. Although the author’s personal experiences indicate that security cooperation assessments lacked analytical rigor, additional independent research was used to validate the hypothesis that the Marine Corps lacked a standardized, quantifiable process for evaluating security cooperation missions at the tactical level.

Research Process

The research consisted of two parts: first, the author thoroughly reviewed the seminal doctrinal publications, directives, and policies relevant to the field of security cooperation to determine if an assessment methodology existed. This process entailed a complete review of 16 authoritative documents and articles. Second, the author completed a data-mining project to evaluate after action reports submitted to the Marine Corps Center for Lessons Learned (MCCLL). The data mining spanned six years of SPMAGTF-CR-AF data between 2010 and 2016, excluding 2011. The author’s research included both unit after action reports and MCCLL reports for security cooperation missions. The source content for the second part of the research project consisted of 19 after action reports, totaling 280 pages of material.

Summary of Findings

The first part of the research project involving the 16 authoritative source documents yielded no additional information about quantitative assessment methodologies. The second part of the research project yielded more instructive results:

- 32 percent of the documents did not provide a single reference to the words “assessment” or “evaluation”

- 68 percent of the documents mentioned the word “assessment” or “evaluation” at least once

- 38 percent of the documents that used the words “assessment” or “evaluation” used them in the context of developing a training schedule or assisting partner nation forces

- 20 percent of the documents explicitly mentioned using training and readiness standards as a baseline for evaluating partner nation forces

- None of the after action reports incorporated quantifiable data or a standard process for evaluating partner force performance and capability

The most salient conclusion from this data is that security cooperation leaders recognize the importance of assessments and evaluations in achieving successful outcomes with partner forces. However, the research also implies that security cooperation leaders have not fully incorporated quantifiable standards into the evaluation process, as evidenced by the lack of data and inconsistent assessment methodologies.

Research Conclusions

Despite the myriad references to assessments and evaluations in doctrine, policies, and mission after action reports, the analysis confirmed that the Marine Corps had not published or even developed a standardized, quantifiable, tactical-level assessment methodology for security cooperation engagements. Currently, the only feedback received by operational planners is subjective observations from team leaders and team chiefs in the form of after action reports. Marine Forces Europe and Africa (MFEA) headquarters provides limited guidance for developing the after action reports and quantitative data is not required. Most of the reports are replete with anecdotal information, where teams bemoan their lodging conditions or food options, rather than provide specific, actionable data to inform future engagements and planning. This void in the feedback loop means that operational and strategic planners are left without the details necessary to complete their respective higher-level assessments.

A Proposed Solution: Hybrid Training and Readiness Assessment Methodology

Galileo Galilei noted that one should always seek to “measure what is measurable, and make measurable what is not so.”13 Based on the previously mentioned research findings, the security cooperation enterprise has not succeeded in making security cooperation activities measurable at the tactical level. In light of this, the Marine Corps should adopt a hybrid training and readiness assessment methodology for future security cooperation engagements. The joint force should replicate this methodology to synchronize assessment efforts across the DOD.

The Hybrid Training and Readiness Assessment Methodology (hereafter referred to as the “methodology”) was developed and implemented by the author during a SPMAGTF-CR-AF deployment in 2017 and was used during subsequent SPMAGTF-CR-AF deployments. The methodology was lauded by the SPMAGTF-CR-AF commander and reviewed by the Commandant of the Marine Corps in 2017. Senior staff members from the Center for Army Lessons Learned have requested to highlight the methodology as a recommended model for future partner engagements across the Department of the Army.

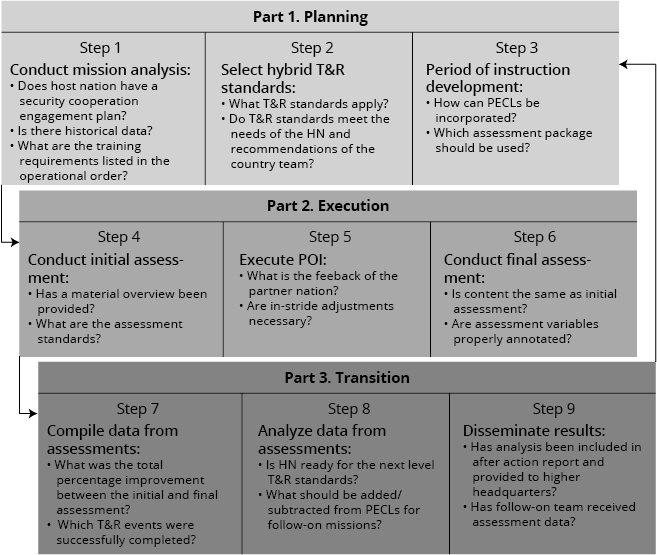

The methodology is a three-part process consisting of nine individual steps (figure 1). The three parts are planning, execution, and transition, which reflect the various stages of a security cooperation mission. Part one, planning, begins with the security cooperation team leaders and team chiefs completing steps one through three, which consist of mission analysis, T&R selection, and period of instruction development. Part two, execution, encompasses steps four through six that require an initial assessment, a period of instruction, and a final assessment. Part three, transition, includes steps seven through nine, which require TSC teams to compile data from their assessments, analyze the data, and then disseminate conclusions from the data. The outputs from part three feed back into part one as new inputs prior to reinitiating the mission analysis process. Each step of the methodology consists of several questions that should be answered before proceeding to the next step. This nine-step iterative process can be adjusted and tailored to meet the unique demands of each mission.

Figure 1. Hybrid training and readiness assessment methodology (nine-step process)

Source: Courtesy of the author, adapted by MCUP.

During part 1, the team leaders and team chiefs are dependent on the embassy country team and MFEA regional planners to relay the specific training requests of the host nation. Operational and tactical level staff must work together to compare Marine Corps T&R standards with the partner nation training requirements. By using Marine Corps T&R standards as a baseline and adjusting the standards to meet the partner nation objectives, the security cooperation team employs a hybrid T&R approach, which is used over time to gauge the progress of the partner nation. During part 2, security cooperation team leaders will select one of three different assessment packages to perform the initial and final assessments. The type of assessment chosen by the team depends on the type of mission.

The first assessment option is a written test, which is preferable for short missions conducted in a classroom setting. This approach is not always ideal, because language barriers can inhibit clear test translation; additionally, some partner nation trainees are averse to formal testing. A second assessment option is a practical application, which is ideally suited for longer missions requiring extensive field skills and infantry tactics training. This assessment model should be designed similar to the combat endurance test at the Marine Corps Infantry Officers Course, with separate skills stations and rigorous physical fitness tests. The benefit of this approach is that it avoids the appearance of formal testing while providing greater flexibility for trainers to evaluate the performance of partner nation military personnel. The disadvantages of this approach are twofold: first, the practical application assessment introduces more subjectivity into the evaluation process; and second, it requires additional trainers and larger training facilities, both of which may not be readily available.

A third assessment option is the combined approach, which incorporates elements of a written test with a practical application. This approach is ideally suited for multifaceted security cooperation missions that require a combination of academic training and field skills. This third assessment option encourages trainers to generate both quantitative and qualitative mission data while catering to a wider variety of learning styles.

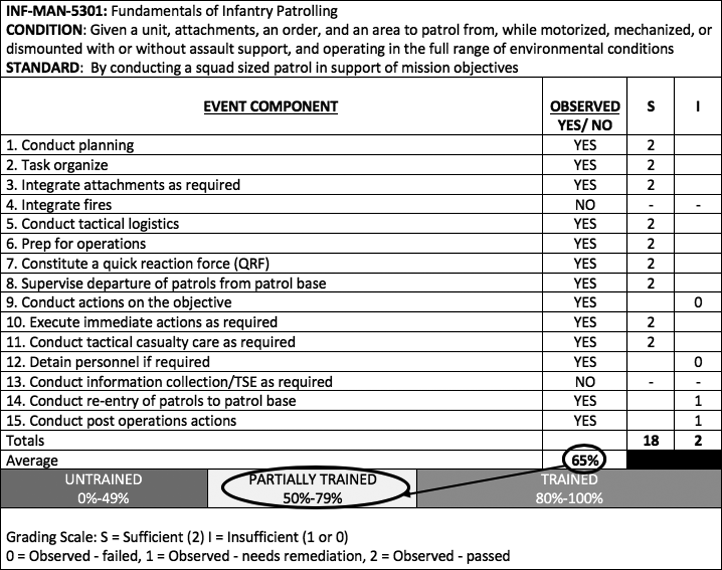

Conventional Training and Readiness Evaluation Process

The conventional approach to evaluating partner nation forces is centered on the Marine Corps Training and Readiness Standards and Performance Evaluation Checklists (PECLs). PECLs include conditions, standards, and event components, which are evaluated by trained instructors. During the author’s deployment, the most common PECL used was a standard infantry patrolling checklist that included 15 event components (figure 2). During the course of an evaluation, the trainer first marks “yes” or “no” next to the “observed” column on the checklist. As each event component is completed, the evaluator will determine if performance is “Sufficient” (S) or “Insufficient” (I). After determining the average score for each of the event components, the trainer assigns a grade of “untrained,” “partially trained,” or “trained.” Most Marines are familiar with this evaluation process and are accustomed to using PECLs as a baseline for monitoring improvement. The problem with implementing a conventional approach to evaluations is that it ignores the nuances of host nation training requirements. The conditions, standards, and event components of traditional PECLs should be adjusted to reflect the requests of the host nation.

Figure 2. Sample conventional performance evaluation checklist (PECL)

Source: NAVMC 3500.44A, Infantry Training and Readiness Manual (Washington, DC: Headquarters Marine Corps, 26 July 2012).

A Hybrid T&R Evaluation Process

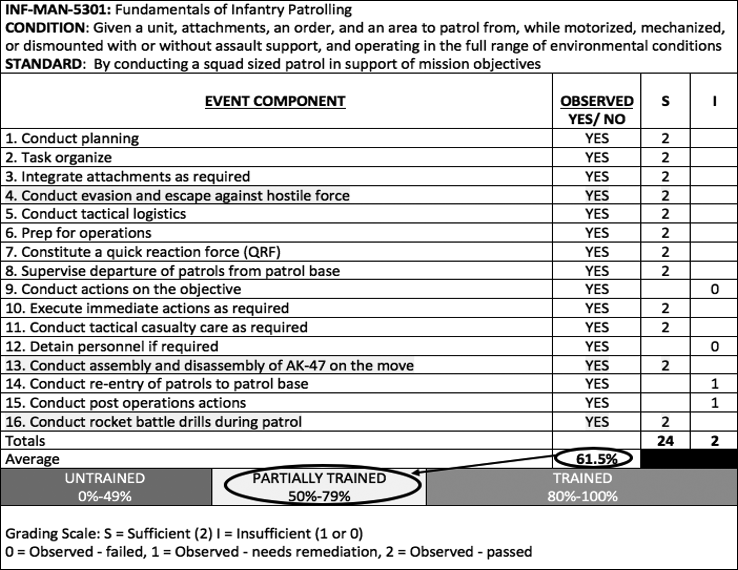

Figure 3 represents an example of a hybrid PECL, which replaces event components 4 and 13 and adds event component 16 (hybrid adjustments are highlighted gray). The hybrid PECL provides a standardized template that is adjusted to meet the demands of the partner force.

Figure 3. Sample “hybrid” performance evaluation checklist (PECL)

Source: NAVMC 3500.44A.

First Lieutenant Robert Curtis used the methodology during a deployment with SPMAGTF-CR-AF 18.1. As the logistics combat element TSC coordinator, he experienced firsthand the utility of employing hybrid PECLs. According to First Lieutenant Curtis, “Using regular T&R standards is difficult because the partner nations are not equipped or organized like the Marine Corps; therefore, our standards do not always apply to them. Using hybrid T&Rs allows the teams to produce more focused and relevant assessments for the partner nation.”14

Process for Generating Assessment Data

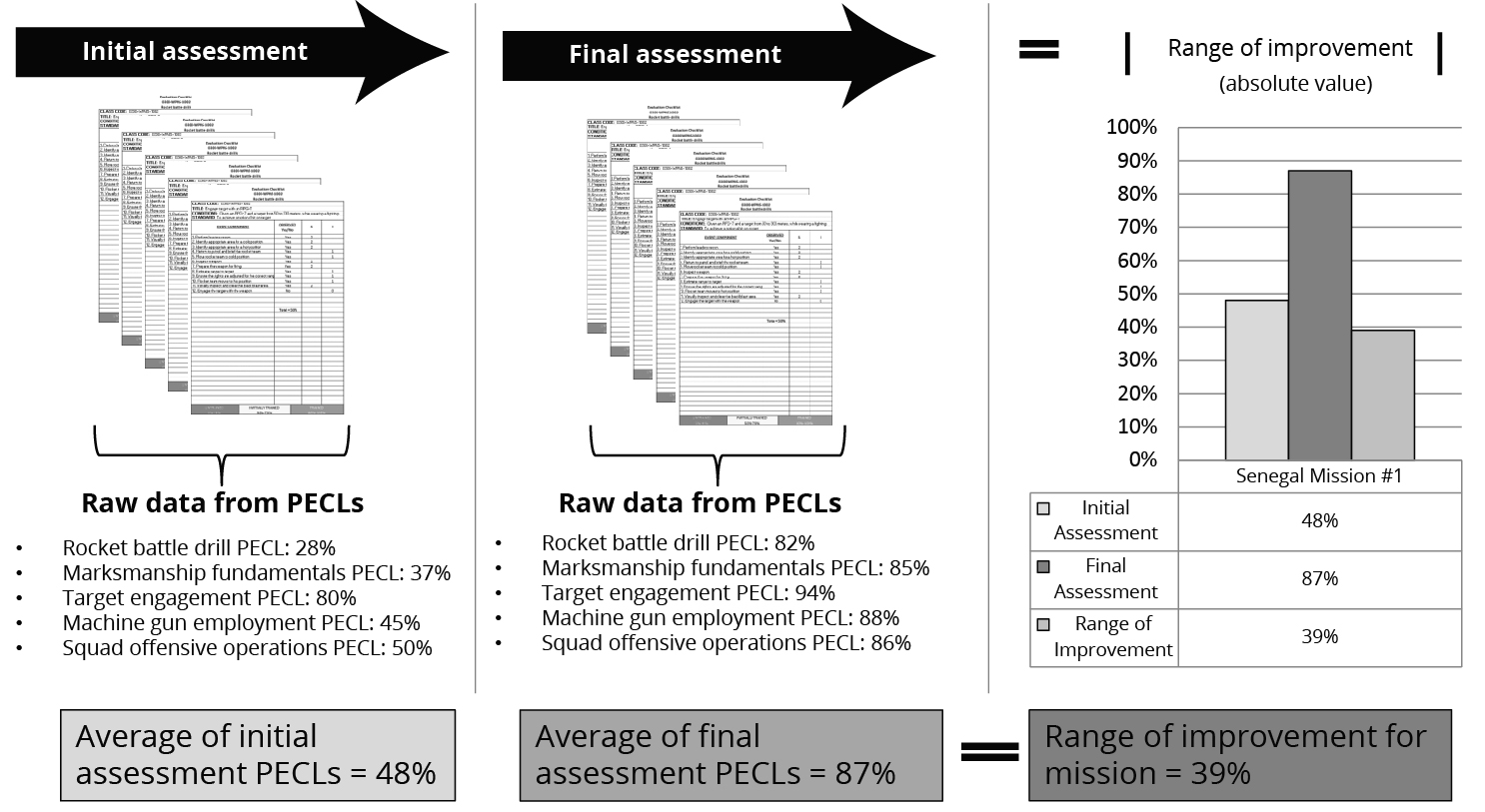

After completing the nine-step methodology, the security cooperation team will be able to produce valuable quantifiable data that will shape future engagements. To produce this data, team leaders will need to complete a simple formula (figure 4). First, the team leader will compile the results from the initial assessment and compute the average for each student who was evaluated on a written test, a practical application test, or a combined test. In the case of the assessment data provided in figure 4, the average score is 48 percent. The team leader will then compile the results of the final assessment—ensuring the same test is used for both the initial and final assessment—and compute the average of the scores using either a mean or median calculation model. In the hypothetical illustration below, the final assessment average is 87 percent. The team leader will then compare the initial assessment with the final assessment and derive the absolute value or range of improvement for the mission. In the example provided in figure 4, the total range of improvement equates to 39 percent.

The data presented in figure 4 is representative of one security cooperation mission, so regional planners who are responsible for multiple missions should collate the assessment data in a bar chart or bar graph format to depict the range of improvement across all missions during a given period of time as seen in the data presented during the SPMAGTF-CR-AF 17.1 TSC missions (figure 5).

Figure 4. Formula for generating assessment data

Source: Courtesy of the author, adapted by MCUP.

Figure 5. Data captured during the SPMAGTF-CR-AF 17.1 TSC missions

Source: Courtesy of the author, adapted by MCUP.

Arguments in Support of the Hybrid Training and Readiness Assessment Methodology

Perhaps the most compelling reason to enact the methodology is to promote fiscal accountability and responsibility within the Marine Corps and the DOD. Among the Services, the Marine Corps is known for its propensity to conserve scarce resources. In the early nineteenth century, Commandant Archibald Henderson popularized the long-held Marine Corps mantra of “fighting on the cheap.”15 Henderson successfully lobbied for Marine Corps involvement in the Seminole Wars (1817–18, 1835–42, 1855–58) and the Mexican War (1846–48) largely because he was able to convince the president that the Marine Corps could accomplish the mission with fewer resources than the Army.16 A similar mentality persists in the modern Marine Corps. During a deployment in 2017, Marines with SPMAGTF-CR-AF saved approximately $700,000 in a $3,000,000 operational budget by implementing the aforementioned assessment methodology. Other Marine Corps units that employed the methodology during SPMAGTF-CR-AF rotations also garnered considerable cost savings. The data produced during these deployments equipped senior leadership with the information necessary to eliminate extraneous programs and increase overall efficiency. By broadly integrating the Hybrid T&R Assessment Methodology into all partner nation engagements, the Marine Corps will further establish its reputation as a force that is ruthlessly efficient and frugal.

In addition to promoting fiscal responsibility, the methodology will also enhance planning across the tactical, operational, and strategic levels of war. In a recent Rand Corporation study, researchers identified several challenges facing the DOD as it continues to implement the AM&E framework. One particularly daunting challenge mentioned in the report is the process of comparing tactical security cooperation activities with U.S. policy objectives and determining if the activities have fulfilled the objectives.17 The authors of the study suggest that “a standardized [assessment, monitoring, and evaluation] AM&E regimen applied across activities helps policymakers and implementers make more informed decisions that maximize immediate outcomes and help ensure programmatic sustainability and impact in the longer term.”18 The report also mentions that assessments can provide important insight for planners: “If fully implemented, partner country capability/interoperability assessments have the potential to provide useful information to security cooperation planners and programmers who lack domain expertise or Service perspectives on what is needed from partner militaries.”19 Military planners are often criticized for their failure to harmonize the strategic, operational, and tactical levels of war. The methodology makes progress in achieving a more integrated approach to TSC planning.

Counterarguments

Despite the many benefits of adopting the methodology, there are also some drawbacks. One potential problem with the methodology is that it can create a culture of chasing the data, where security cooperation teams and partner forces are motivated by test performance at the expense of genuine teaching and learning. Although this challenge is worth consideration, it is not enough to overcome the need for assessments. When properly trained, security cooperation instructors recognize that assessments are only one aspect of effective education. A healthy educational culture is established by team leadership, and trainers must be willing to adjust the format and frequency of the assessments to prevent unhealthy obsession about data. One method for reducing the focus on assessments during partner force engagements is to ensure that the assessment results are anonymous. Reflecting on his time as a security cooperation team leader in Gabon and Ghana in 2017, First Lieutenant Brendan Gallahue summarized his approach to testing: “At the end of the initial assessment, we debriefed the group on how they performed and explained the average score for the collective unit, without posting each individual’s scores.”20 Security cooperation teams can mitigate an unhealthy assessment culture by promoting group-wide improvement and retaining close control of assessment results.

Another counterargument is that the Marine Corps lacks the capacity to fully train security advisors on more complex hybrid T&R standards. Purveyors of this point of view claim that the predeployment workup cycle is already limited for the SPMAGTFs and Marine Expeditionary Units (MEUs), and therefore units will not have the bandwidth to conduct ancillary security cooperation training. While some hybrid standards may require additional training, most of the hybrid PECLs include material that is familiar to conventional Marine Corps units. If more complex hybrid standards cannot be taught organically by individuals from a deploying unit, the unit can request individual augments to fill low-density skill sets. During previous security cooperation engagements, Marine units have successfully requested support from 2d Reconnaissance Battalion and 2d Combat Engineer Battalion to provide specialized skills training.

First Lieutenant Gallahue confirmed the feasibility of training security cooperation advisors and noted that his team was able to seamlessly integrate the methodology into their training plan. After his mission, First Lieutenant Gallahue observed that “using the Hybrid T&R Assessment Methodology actually made the mission execution a lot simpler than we anticipated. My team successfully built a training program around an initial assessment, where we established a baseline and culminated with a final exercise to measure the progress of our partners and gauge the effectiveness of the training.”21 The methodology is likely to cause some friction initially, but it will ultimately simplify the efforts of security cooperation trainers.

Summary

Anecdotal, experiential, and empirical evidence all suggest that the Marine Corps and DOD support the need for a tactical-level assessment methodology. Despite repeated mandates from Congress to account for the billions of dollars’ worth of security cooperation expenditures, only marginal progress has been achieved. At this point, the return on investment for the security cooperation enterprise is unclear, at best. The Hybrid Training and Readiness Assessment Methodology is a tool that can radically shift the investment proposition of the enterprise from one marked by tepid returns to a position of maximum return. The methodology fills a critical role in connecting tactical, operational, and strategic planning while also promoting fiscal responsibility and accountability. Sherlock Holmes’ famous aphorism summarizes the problem and the potential solution for what ails security cooperation efforts: “It is a capital mistake to theorize before one has data. Insensibly one begins to twist facts to suit theories, instead of theories to suit facts.”22 Indeed, by leveraging facts and data, the security cooperation enterprise will transition from seeking alpha to at last achieving alpha.

Endnotes

- “Alpha,” Financial Dictionary, accessed 15 January 2019.

- Marine Corps Operating Concept: How an Expeditionary Force Operates in the 21st Century (Washington, DC: Headquarters Marine Corps, 2016), 26.

- Fiscal Year (FY) 2019 President’s Budget: Security Cooperation Consolidated Budget Display, February 2018 (Washington, DC: Office of the Secretary of Defense, 2018), 3–4.

- Jefferson P. Marquis et al., Developing an Assessment, Monitoring, and Evaluation Framework for U.S. Department of Defense Security Cooperation (Santa Monica, CA: Rand, 2016), 1, https://doi.org/10.7249/RR1611.

- Security Cooperation, JP 3-20 (Washington, DC: Joint Chiefs of Staff, 2017), V.

- Fiscal Year (FY) 2019 President’s Budget, 4–5.

- Taylor P. White, “Security Cooperation: How It All Fits,” Joint Force Quarterly, no. 72 (2014): 107.

- Summary of the 2018 National Defense Strategy of the United States of America: Sharpening the American Military’s Competitive Edge (Washington, DC: Department of Defense, 2018), 9.

- Security Cooperation, JP 3-20 (Washington, DC: Joint Chiefs of Staff, 2017), V-1.

- DOD Instruction 5132.14: Assessment, Monitoring, and Evaluation Policy for the Security Cooperation Enterprise (Washington, DC: Department of Defense, 2017), 12.

- Marine Corps Order 5710.6C, Marine Corps Security Cooperation (Washington, DC: Headquarters Marine Corps, 24 June 2014), 1–3.

- Marine Corps Operations, MCDP 1-0 (Washington, DC: Headquarters Marine Corps, 2017), 3-24.

- “Galileo Galilei Quotations,” Mathematical Association of America, accessed 15 January 2019.

- 1stLt Robert Curtis, email message to author, 20 January 2019.

- LtGenVictor H. Krulak, USMC (Ret), First to Fight: An Inside View of the U.S. Marine Corps (Annapolis, MD: Naval Institute Press, 1999), 141.

- Krulak, First to Fight, 141.

- Marquis et al., Developing an Assessment, Monitoring, and Evaluation Framework for U.S. Department of Defense Security Cooperation, 10.

- Marquis et al., Developing an Assessment, Monitoring, and Evaluation Framework for U.S. Department of Defense Security Cooperation, 4.

- Marquis et al., Developing an Assessment, Monitoring, and Evaluation Framework for U.S. Department of Defense Security Cooperation, 37.

- 1stLt Brendan Gallahue, email message to author, 19 January 2019, hereafter Gallahue email.

- Gallahue email.

- Arthur Conan Doyle, The Adventures of Sherlock Holmes (London: George Newnes, 1892).