David E. McCullin, DM

10 November 2021

https://doi.org/10.36304/ExpwMCUP.2021.07

PRINTER FRIENDLY PDF

AUDIOBOOK

Abstract: This article is the second in a four-part series that discusses the integration of evidence-based framework and military judgment and decision-making (MJDM). The series is written as a conceptualization and implementation of the presidential memorandum on restoring faith in government dated 27 January 2021. The focus of the series is on integrating the evidence-based framework into defense planning and decision-making as an operational art. The series frames this integration in terms of basing decisions on the best evidence in four categories: subject matter expertise, stakeholder input, organizational data, and scholarship. It also recognizes that scholarship is the single component within the evidence-based framework not currently integrated into military planning.

Keywords: evidence-based framework, evidence-based management, EBMgt, evidence-based practice, military planning, military judgment and decision-making, MJDM, systematic review

In a presidential memorandum on restoring faith in government dated 27 January 2021, U.S. president Joseph R. Biden Jr. stated, “It is the policy of my Administration to make evidence-based decisions guided by the best available science and data.”1 With this declaration, the Office of Science and Technology Policy was subsequently charged with the responsibility of ensuring “the highest level of integrity in all aspects of executive branch involvement with scientific and technological processes.”2 The strategy posited in this memorandum is that U.S. federal agencies integrate the evidence-based framework into decision-making for planning and policy. This memorandum reestablishes the Foundations for Evidence-Based Policymaking Act of 2018.3

Definitions

The following definitions are provided here to offer context and clarity to the terms used in this article. They represent a compilation of evidence and experiences.

1. Critically appraised topic (CAT): provides a quick and succinct assessment of what is known (and not known) in the scientific literature about an intervention or practical issue by using a systematic methodology to search and critically appraise primary studies.4

2. Research question: a question developed from a pending decision to focus a research effort that is designed to create evidence.5

3. Evidence-based management (EBMgt): a decision-making framework that draws evidence from experience, stakeholder input, organizational data, and scholarship.6

4. Evidence-based practice framework: the employment of a methodology of asking, acquiring, appraising, aggregating, applying, and assessing. This practice is often executed in a systematic review.7

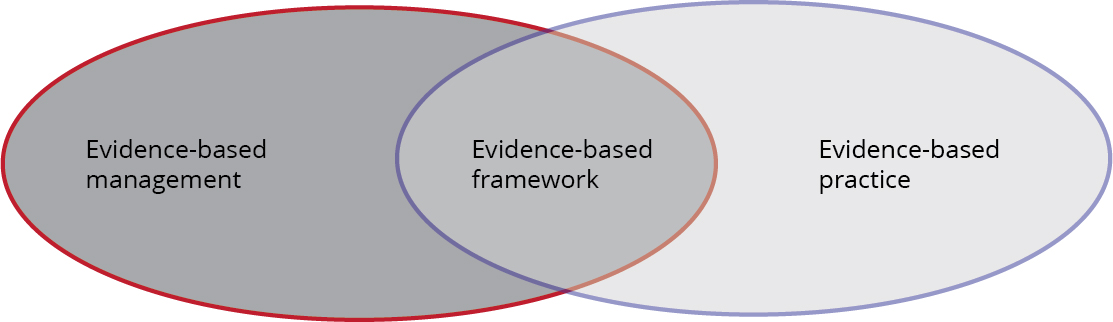

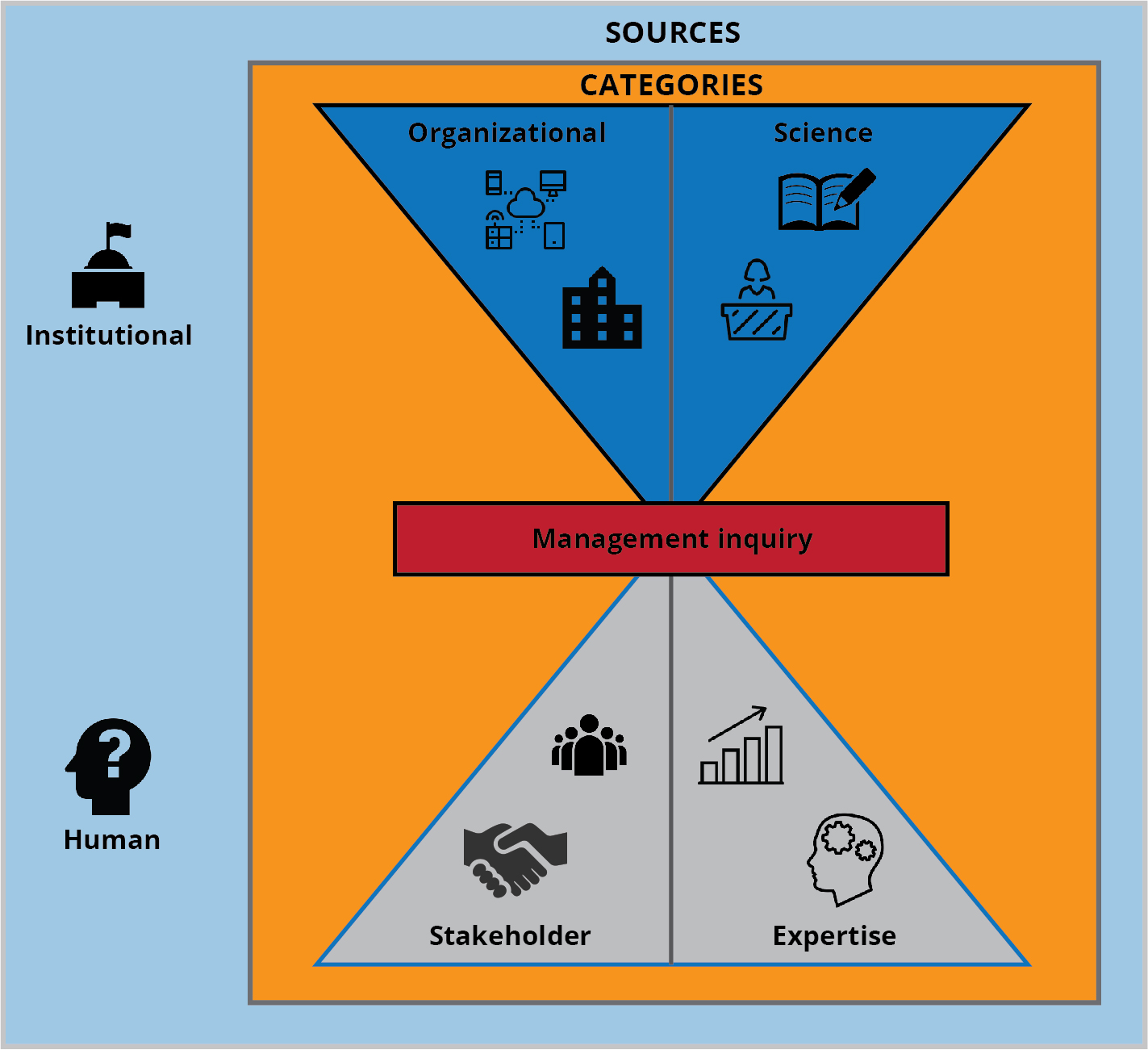

5. Evidence-based framework: The integration of EBMgt and evidence-based practice (figure 1).

6. Research framework logic: a portfolio of logic frameworks that defines specific parameters to guide the development of a research question and validate data set content. A common thread within the portfolio is the use of variables that define who the study impacts, the instrument(s) used, and what is expected or what the study will produce (table 1).8

7. Stakeholders: individuals or organizations directly impacted by a judgment or decision.9

8. Military judgment and decision-making (MJDM): a spectrum of decision-making processes related to the arts and sciences of national defense. Within this spectrum, quantitative and qualitative processes are used to make decisions based on multiple courses of action.

9. Theoretical framework: links theory and practice. The author of a study selects one or more theories of social science research to help explain how a study is linked to a practical approach identified in a research question.10

10. Systematic review: a method of social science research that follows the scientific method. Systematic reviews explore relationships between variables to address hypothetical research questions.11

11. Conceptual framework: an explanation of how the constructs of a study are strategically used in addressing the research question. A conceptual framework is often accompanied by a sketch showing how the constructs of a study are related.12

12. Organizational complex adaptive systems (OCAS): are made up of elements such as material, labor, and equipment and are guided by specified actions. OCASs occur when organizations create habitual networks of elements to accomplish a societal goal. They face societal pressures to constantly evolve. They are vulnerable to constraints that impede systems functioning, which causes the elements to adapt the system.13

Figure 1. Evidence-based framework

Courtesy of the author, adapted by MCUP.

Table 1. Research logic frameworks

| FRAMEWORK |

STANDS FOR |

SOURCE |

DISCIPLINE/TYPE OF QUESTION |

| BeHEMoTh |

Behavior of interest

Health contest

Exclusions

Models or theories |

Andrew Booth and Christopher Carroll, “Systematic Searching for Theory to Inform Systematic Reviews: Is It Feasible? Is It Desirable?,” Health Information and Libraries Journal 32, no. 3 (June 2015): 220–35, https://doi.org/10.1111/hir.12108. |

Questions about theories |

| CHIP |

Context

How

Issues

Population |

Rachel Shaw, “Conducting Literature Reviews,” in Doing Qualitative Research in Psychology: A Practical Guide, ed. Michael A. Forester (London: Sage Publications, 2010), 39–52. |

Psychology, qualitative |

| CIMO |

Context

Intervention

Mechanisms

Outcomes |

David Denyer and David Tranfield, “Producing a Systematic Review,” in The SAGE Handbook of Organizational Research Methods, eds. David A. Buchanan and Alan Bryman (Thousand Oaks, CA: Sage Publications, 2009), 671–89. |

Management, business, administration |

| CLIP |

Client group

Location of provided service

Improvement/information/innovation

Professionals |

Valerie Wildridge and Lucy Bell, “How CLIP became ECLIPSE: A Mnemonic to Assist in Searching for Health Policy/Management Information,” Health Information and Libraries Journal 19, no. 2 (2002): 113–15, https://doi.org/10.1046/j.1471-1842.2002.00378.x. |

Librarianship, management, policy |

| COPES |

Client-oriented

Practical

Evidence

Search |

Leonard E. Gibbs, Evidence-Based Practice for the Helping Professions: A Practical Guide with Integrated Multimedia (Pacific Grove, CA: Brooks/Cole-Thomson Learning, 2003). |

Social work, health care, nursing |

| ECLIPSE |

Expectation

Client

Location

Impact

Professionals

Service |

Valerie Wildridge and Lucy Bell, “How CLIP became ECLIPSE: A Mnemonic to Assist in Searching for Health Policy/Management Information,” Health Information and Libraries Journal 19, no. 2 (2002): 113–15, https://doi.org/10.1046/j.1471-1842.2002.00378.x. |

Management, services, policy, social care |

| PEO |

Population

Exposure

Outcome |

Khalid S. Khan et al., Systematic Reviews to Support Evidence-Based Medicine: How to Review and Apply Findings of Healthcare Research (London: Royal Society of Medicine Press, 2003). |

Qualitative |

| PECODR |

Patient/population/problem

Exposure

Comparison

Outcome

Duration

Results |

Martin Dawes et al., “The Identification of Clinically Important Elements within Medical Journal Abstracts: Patient_Population_Problem, Exposure_Intervention, Comparison, Outcome, Duration and Results (PECODR),” Journal of Innovation in Health Informatics 15, no. 1 (2007): 9–16, https://doi.org/10.14236/jhi.v15i1.640. |

Medicine |

| PerSPECTiF |

Perspective

Setting

Phenomenon of interest/problem

Environment

Comparison (optional)

Time/timing

Findings |

Andrew Booth et al., “Formulating Questions to Explore Complex Interventions within Qualitative Evidence Synthesis,” BMJ Global Health 4, suppl. 1 (2019), https://doi.org/10.1136/bmjgh-2018-001107. |

Qualitative research |

| PESICO |

Person

Environments

Stakeholders

Intervention

Comparison

Outcome |

Ralf W. Schlosser and Therese M. O’Neil-Pirozzi, “Problem Formulation in Evidence-Based Practice and Systematic Reviews,” Contemporary Issues in Communication Sciences and Disorders 33 (Spring 2006): 5–10. |

Augmentative and alternative communication |

| PICO |

Patient

Intervention

Comparison

Outcome |

W. Scott Richardson et al., “The Well-Built Clinical Question: A Key to Evidence-Based Decisions,” ACP Journal Club 123, no. 3 (November/December 1995), A12-A13. |

Clinical medicine |

| PICO+ |

Patient

Intervention

Comparison

Outcome

+Context, patient values, and preferences

|

Scott Bennett and John W. Bennett, “The Process of Evidence‐Based Practice in Occupational Therapy: Informing Clinical Decisions,” Australian Occupational Therapy Journal 47, no. 4 (2000): 171–80, https://doi.org/10.1046/j.1440-1630.2000.00237.x. |

Occupational therapy |

| PICOC |

Patient

Intervention

Comparison

Outcome

Context

|

Mark Petticrew and Helen Roberts, Systematic Reviews in the Social Sciences: A Practical Guide (Oxford, UK: Blackwell Publishing, 2006). |

Social sciences |

| PICOS |

Patient

Intervention

Comparison

Outcome

Study Type

|

David Moher et al., “Preferred Reporting Items for Systematic Reviews and Meta-Analyses: The PRISMA Statement,” PLoS Medicine 6, no. 7 (2009), https://doi.org/10.1371/journal.pmed.1000097. |

Medicine |

| PICOT |

Patient

Intervention

Comparison

Outcome

Time

|

W. Scott Richardson et al., “The Well-Built Clinical Question: A Key to Evidence-Based Decisions,” ACP Journal Club 123, no. 3 (November/December 1995), A12-A13. |

Education, health care |

|

PICO

(specific to diagnostic tests)

|

Patient/participants/population

Index tests

Comparator/reference tests

Outcome |

Kyung Won Kim et al., “Systematic Review and Meta-Analysis of Studies Evaluating Diagnostic Test Accuracy: A Practical Review for Clinical Researchers - Part I: General Guidance and Tips,” Korean Journal of Radiology 16, no. 6 (November/December 2015): 1175–87, https://doi.org/10.3348/kjr.2015.16.6.1175. |

Diagnostic questions |

| PIPOH |

Population

Intervention

Professionals

Outcomes

Health care setting/context |

The ADAPTE Process: Resource Toolkit for Guideline Adaptation, v. 2.0 (Berlin, Germany: Guideline International Network, 2010). |

Screening |

| ProPheT |

Problem

Phenomenon of interest

Time |

Andrew Booth et al, Guidance on Choosing Qualitative Evidence Synthesis Methods for Use in Health Technology Assessments of Complex Interventions (Brussels, Belgium: European Commission; Integrate-HTA, 2016); and Andrew Booth, Anthea Sutton, and Diana Papaioannou, Systematic Approaches to a Successful Literature Review, 2d ed. (London: Sage Publications, 2016). |

Social sciences, qualitative, library science |

| SPICE |

Setting

Perspective

Interest

Comparison

Evaluation |

Andrew Booth, “Clear and Present Questions: Formulating Questions for Evidence Based Practice,” Library Hi Tech 24, no. 3 (2006): 355–68, https://doi.org/10.1108/07378830610692127. |

Library and information sciences |

| SPIDER |

Sample

Phenomenon of interest

Design

Evaluation

Research type |

Allison Cooke, Debbie Smith, and Andrew Booth, “Beyond PICO: The SPIDER Tool for Qualitative Evidence Synthesis,” Qualitative Health Research 22, no. 10 (2012): 1435-43, https://doi.org/10.1177/1049732312452938. |

Health, qualitative research |

| WWH |

Who

What

How |

What was done (i.e., intervention, exposure, policy, or phenomenon)?

How does the what affect the who?

|

|

Courtesy of the author, adapted by MCUP.

Article Overview

This article is the second in a four-part series that explores the integration of the evidence-based framework into MJDM. The first article in this series presented research on the feasibility of an integration of MJDM and EBMgt, using a CAT to explore the potential for integration. The findings of the CAT demonstrated that such an integration was both feasible and practical.14 As a result of that study, three additional articles were planned to conceptualize how that integration might be implemented. The third and fourth articles in the series will explain the importance of building data sets and evaluating evidence, respectively. This series is being published during a time when a new U.S. presidential administration has issued a directive on restoring trust in government through scientific integrity and evidence-based policy making. This directive outlines new organizational decision-making policies and procedures for all government agencies.15 This series aims to shed light on how evidence-based tools could be used to implement the integration of MJDM and the evidence-based framework.

This article speaks to President Biden’s directive on restoring trust in government with specific application to integrating the evidence-based framework into military planning. The purpose of this article is to explain the scope of evidence-based decision-making and make specific recommendations on how it could be integrated into military planning. This article is divided into three major sections. The first explains the scope of evidence-based decision-making in terms of origins, practice evolution management, and systematic reviews. The second details the scope of MJDM, which is outlined in Joint Planning, Joint Publication (JP) 5-0.16 The third discusses how this integration could occur.

Section I: The Scope of Evidence-Based Decision-Making

This section explains the components of evidence-based decision-making. Each component is explained in detail to provide the context that will reveal how EBMgt functions as a system to create evidence for decision-making. The discussion begins with the emergence of EBMgt. This will be followed by discussions on the evidence-based framework, systematic review, and information taxonomy methodologies. The final component discussed is theoretical and conceptual framing. With an understanding of these components, the full context of evidence-based decision-making and the roll of systematic review methodologies will be apparent.

The Emergence of Evidence-Based Management

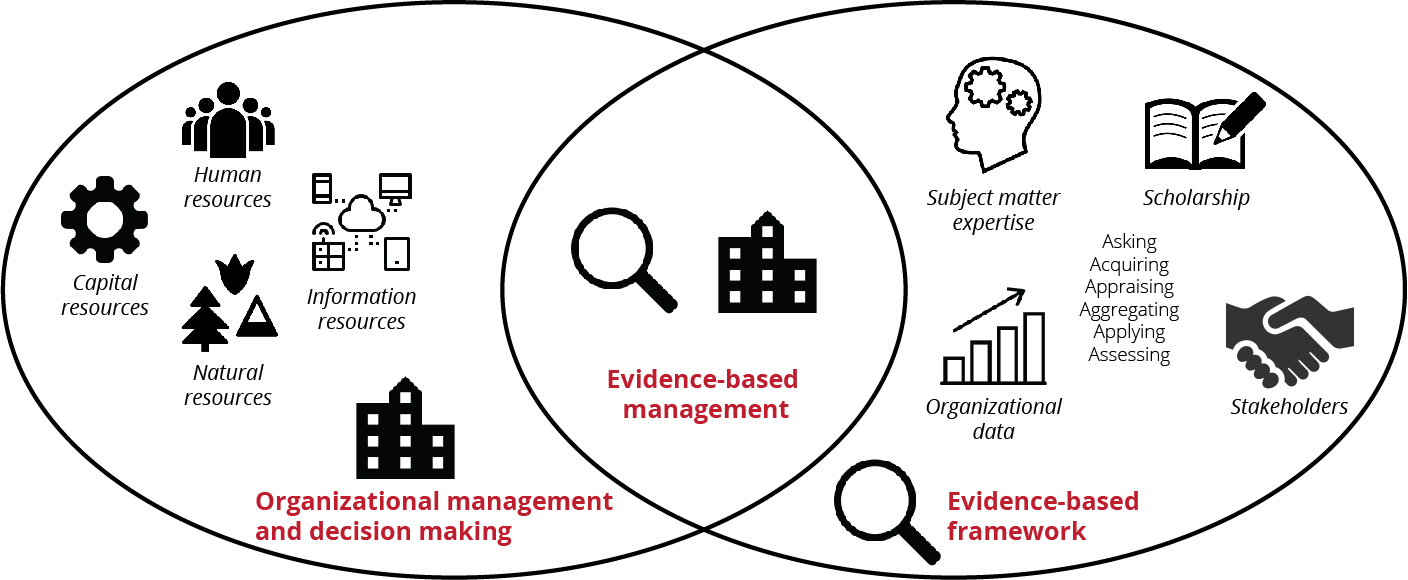

Evidence-based management (EBMgt) emerges from a need to improve the quality of organizational decision-making by making decisions through the judicious use of the best available information. In EBMgt, evidence is created from science, subject matter expertise, organizational data, and stakeholder input. EBMgt uses the evidence-based framework to create evidence for decision-making. The evidence-based framework overlaps with organizational management practices. Where the overlap occurs, EBMgt is initiated. Figure 2 illustrates this concept.

Figure 2. Scope of evidence-based decision-making

Courtesy of the author, adapted by MCUP.

EBMgt is an adaptation of evidence-based medicine, which is used to collect data to inform decision-making among medical practitioners. Evidence-based medicine emerged from the need to apply relevant scientific medical studies to patient treatment decisions. This type of decision-making was enabled by the emergence of databases that archive vast stores of medical research housed in hundreds of thousands of volumes of medical journals such as PubMed, PubMed Central, Expertia, Medica, Embase, Cochrane, and UpToDate.

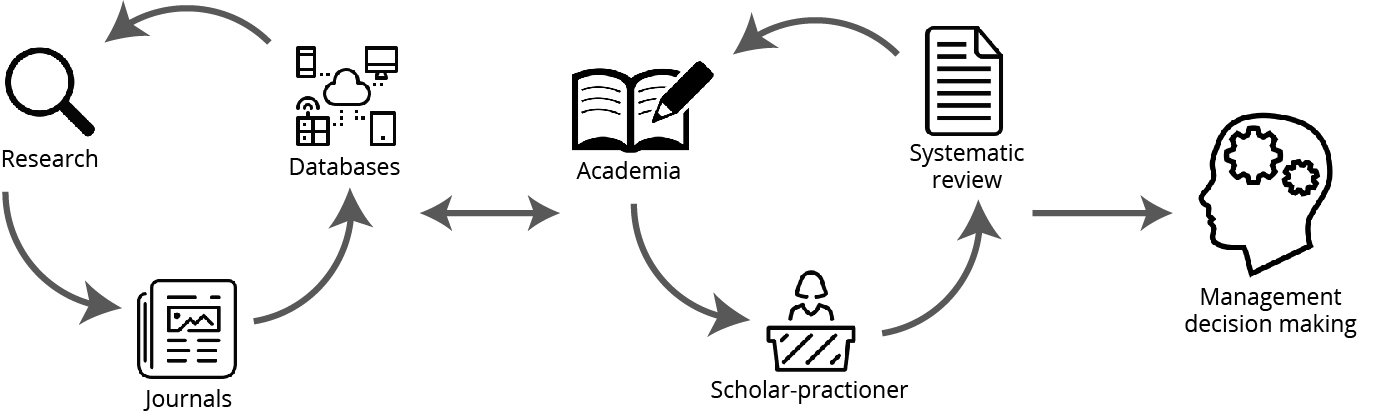

This practice of integrating medical evidence in decision-making was adapted for management decision-making with the emergence of vast databases of social science research. These databases warehoused hundreds of thousands of studies in academic journals, all of which vary greatly in terms of the level of recognizable scholarship they contain. The emergence of these databases created the challenge of translating research into useable formats for decision-making. Institutions of higher learning began addressing this problem by creating curriculum to develop a scholar-practitioner.

Through academic programs, the scholar-practitioner has emerged as the professional who employs the evidence-based framework. They use research from scholarly peer-reviewed journals archived in databases such as EBSCO, ProQuest, and Google Scholar. From these databases, the scholar-practitioner builds a data set to answer a specific management inquiry.

The primary tool of the scholar-practitioner for informing management inquiries is the systematic review. Figure 3 illustrates how the systematic review links academia and practice, as well as how the practitioner serves as the primary liaising agent with the skill set to create evidence using the systematic review.

Figure 3. Evolution and application of EBMgt

Courtesy of the author, adapted by MCUP.

This judicious use of information is the impetus for applying systematic review. According to Rob B. Briner, David Denyer, and Denise M. Rousseau, evidence-based research enables decision-making using the evidence-based framework by combining the systematic gathering, evaluation, and integration of scholarly research with practical evidence.17 Andrew H. Van de Ven and Paul E. Johnson have posited that management research-practice gaps often occur as knowledge transfer problems. What they mean is that an identified gap in a management practice occurs because the knowledge to address the gap has not been transferred to the decision-maker. The systematic review is one mechanism used to transfer knowledge to an identified evidence-based framework problem.18

Evidence-Based Framework and Systematic Review

The findings of the first article in this series suggested the emergence of scholar-practitioners within the U.S. Department of Defense (DOD) and offered recommendations that focused on the professional development of these scholar-practitioners by teaching and promoting systematic review methodologies to integrate MJDM and the evidence-based framework.19

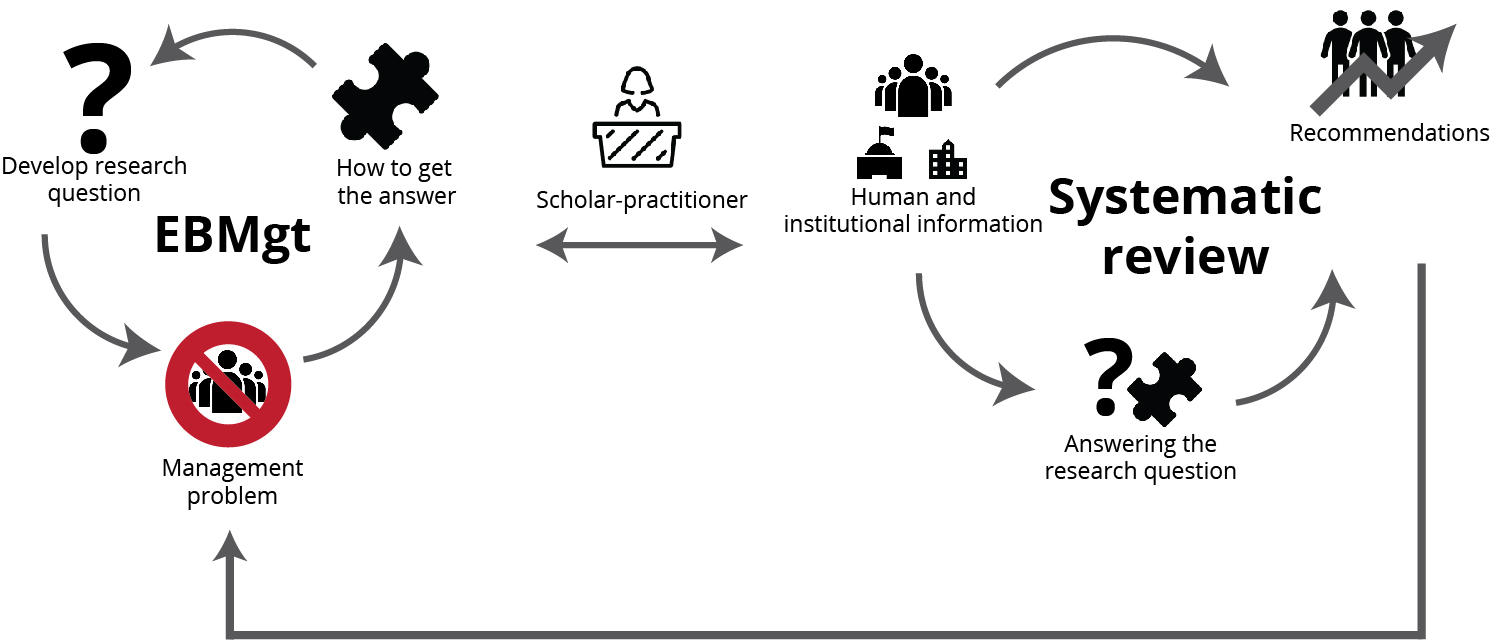

The chief dynamics linking the scholar-practitioner, the evidence-based framework, and the systematic review together in terms of decision-making are that the systematic review is the mechanism for employing the evidence-based framework and that the evidence-based framework employs systematic review. In management practice, the systematic review guides and documents research as part of the evidence-based framework. The evidence-based framework brings the best available evidence to decision-making, while the systematic review is the tool for creating that evidence. Figure 4 illustrates this dynamic. The scholar-practitioner executes these dynamics in a continuous process of identifying problems, framing questions, creating evidence, and making recommendations for decision-making.

Figure 4. Dynamics of EBMgt and systematic review

Courtesy of the author, adapted by MCUP.

Information Taxonomy

The judicious use of information involves creating the best available evidence. This evidence is created from four categories of information, two of which are institutional sources and the other of which are human sources. The institutional sources are organizational data and science from original research. The human sources are subject matter expertise and stakeholder input. These informational sources and categories are both supplementary and complementary, as shown in figure 5.

Figure 5. Information taxonomy

Courtesy of the author, adapted by MCUP.

These sources supplement the types of information needed, suggest where information may be located, and advise on how to obtain it. The informational categories are complementary in that they emphasize the specific characteristics of informational sources. In order to locate and collect the information needed, the human and institutional sources must be carefully considered. To fully implement the evidence-based framework, all four informational categories must be represented in the final decision. According to Eric Barends, Denise M. Rosseau, and Rob B. Briner, a critical evaluation of the best available research evidence, as well as the perspectives of those people who might be affected by the decision, epitomizes the core concept of EBMgt.20

Details of Evidence-Based Practice Framework

Evidence-based practice creates and collects information with a framework of asking, acquiring, appraising, aggregating, applying, and assessing.21

Asking

Asking translates a practical issue or problem into an answerable question. The asking process involves developing and applying a logic framework, which contains bullet point constructs that guide the development of the rationale for a study. The rationale is written into the background section common to all systematic review types. In addition to the rationale for the study, the research question also emerges.

Acquiring

Based on the logic framework and research question, a rigorous and transparent search process is an integral part of all systematic review methodologies. In this process, the antecedents of a search help develop the screening criteria that determines which studies are included and excluded from the final data set. The search process ultimately defines the data set.

Appraising

In the appraising process, the data set is transparently displayed with the associated authors and publications and evaluated. This appraisal focuses on the relevance of each study in terms of the research question and the quality of the research. There are numerous methods to assess the quality and relevance of the data set. The key is to assess each study in a process that demonstrates rigor and transparency. During the appraisal, final judgments are made that may eliminate studies from the data set because of low quality or a weak nexus to the research question and logic framework.

Aggregating

Aggregating is a tedious process that involves creating codes by extracting relevant text passages from each study. The author judges which passages are extracted and how the text is interpreted into codes. Software applications that are specifically designed to facilitate the coding analysis and synthesis processes in systematic reviews, such as EPPI-Reviewer 4, Covidence, DistillerSR, Rayyan QCRI, Sysrev, CReMs, and the System for the Unified Management, Assessment, and Review of Information (SUMARI), are commonly used. When the coding process is complete, the researcher analyzes the results to identify trends and patterns that become the raw data to address the research question. The raw data is then refined in a process of synthesis and translated into themes from which recommendations can be made.

Applying

Next, recommendations are made from the themes. These recommendations are supported by the findings of the study. For example, the first article in this series found that integration of EBMgt and MJDM is feasible. In that article, recommendations were centered on developing education and training to create scholar-practitioner skills related to the evidence-based framework.22 These skills are critical to a successful integration of EBMgt and MJDM.

Assessing

Assessing involves developing performance metrics to measure the success of failure of the recommendations adopted. An assessment should be an ongoing process that results in system adaptations that are ultimately identified by the first step in the evidence-based framework: asking. Because assessing leads back to asking, new studies may be justified.

Introduction to the Systematic Review

As explained by Guy Paré et al., a properly executed systematic review locates articles that represent powerful information sources for practitioners looking for state-of-the-art evidence to guide their decision-making and work practices.23 According to David Gough, the systematic review summarizes, critically reviews, analyzes, and synthesizes a group of related literature to identify gaps and create new knowledge.24 Systematic review methodologies are applied to searching for relevant literature, building a data set, appraising the coding data, and analyzing and synthesizing the data in the context of answering a specific research question. The portfolio of systematic review methodologies includes the critically appraised topic (CAT), rapid evidence assessment (REA), meta-analysis, literature review, and systematic review of literature. Each of these methods employs some variant of the following tasks:

- Clearly define the question.

- Determine the types of studies that need to be located.

- Carry out a comprehensive literature search.

- Screen the results of that search using predefined criteria.

- Critically appraise the included studies

- Synthesize the studies and assess areas of homogeneity (a.k.a. coding for determination of “themes”).

- Compile results and publish.25

The pursuit of rigor, transparency, validity, and reliability in EBMgt makes the systematic review the ideal tool for executing the evidence-based framework. There are numerous professional organizations as well as more than 90 degree-granting institutions of higher learning that offer education on systematic reviews as reported by the Center for Evidence Based Management.26 The following paragraphs describe the systematic review as defined by institutions and organizations that use them.

Temple University in Philadelphia, Pennsylvania, defines the systematic review as a review of the best available information translated into evidence to inform a clearly formulated question. The systematic review uses rigorous and transparent methods to identify, select, critically appraise, and analyze published research. This process creates the evidentiary data to answer a specific question.27

The Systematic Review Center for Laboratory Animal Experimentation (SYRCLE) in the Netherlands states that in the systematic review, information is identified, selected, appraised, and synthesized to enable evidence-based decisions.28

The University of Texas Health Science Center at Houston describes how a systematic review attempts to correlate all empirical evidence that fits prequalified eligibility criteria to answer a specific research question.29

The Uniformed Services University of the Health Sciences in Bethesda, Maryland, describes the systematic review as a summary that attempts to address a focal question using method design to reduce the likelihood of bias. In this view, the systematic review conducts an analysis to synthesize the findings of original studies, clearly stating why the research is being done and which methods were used to find the primary study.30

The Centre for Reviews and Dissemination at the University of York in the United Kingdom states that the systematic review uses objective and transparent methods to identify, evaluate, and summarize all relevant research findings to avoid bias. The approach and methods are set out in advance. When carried out successfully, a systematic review provides the most reliable evidence about the effects of tests, treatments, and other interventions.31

The Center for Evidence-Based Crime Policy at George Mason University in Fairfax, Virginia, states that the systematic review summarizes the best available evidence on a specific intervention or policy using transparent comprehensive search strategies, explicit criteria for including comparable studies, systematic coding and analysis, and quantitative methods for producing an overall indicator of effectiveness where appropriate.32

The Systematic Review Center at New York University refers to the systematic review as a review of a clearly formulated question that uses systematic and explicit methods to identify, select, and critically appraise relevant research, and to collect and analyze data from the studies that are included in the review.33

According to Georgetown University in Washington, DC, a systematic review is a research methodology designed to answer a focused research question. Authors conduct a methodical and comprehensive literature synthesis focused on a well-formulated research question. Its aim is to identify and synthesize all of the scholarly research on a particular topic, including both published and unpublished studies. Systematic reviews are conducted in an unbiased, reproducible way to provide evidence for practice and policy making and identify gaps in research. Every step of the review, including the search, must be documented for reproducibility.34

The Center for Evidence Based Management says that in contrast to a conventional literature review, a systematic review is transparent, verifiable, and reproducible, and, as a result, the likelihood of bias is considerably smaller. Many systematic reviews also include a meta-analysis, in which statistical analysis techniques are used to combine the results of individual studies to arrive at a more accurate estimate of effects. In some cases, systematic reviews are used not only to aggregate evidence relating to a specific topic but also to make clear what is not known, thereby directing new primary research into areas where there is a gap in the body of knowledge.35

These nine examples illustrate the common threads in the systematic review as framed by various institutions and organizations. These definitions also speak to the importance of systematic reviews in social science research. In the following paragraphs, each method in the portfolio of systematic reviews will be defined.

Types of Systematic Reviews

Rapid Evidence Assessment

An REA provides a balanced assessment of what is known (and not known) in the scientific literature about an intervention or problem. To be “rapid,” an REA makes concessions in relation to the breadth, depth, and comprehensiveness of the search. Both the REA and CAT incorporate and publish rigorous search methodologies and appraisals of quality. However, the REA is designed to focus on statistical results, and the quality appraisal is focused on methodological appropriateness.36

Critically Appraised Topic

The differences between the CAT and REA are extremely subtle. The choice between which tool to use is not strictly delineated to identify why one is more advantageous that the other. Like the REA, a CAT is formatted for a quick and succinct assessment of literature. CAT questions focus on the “how many,” “how often,” and “how to implement and functionality,” whereas the REA is more often used with statistical analysis or in qualitative studies.37

Meta-Analysis

A meta-analysis is a statistical analysis that combines the results of multiple scientific studies. Meta-analysis can be performed when there are multiple scientific studies addressing the same question, with each individual study reporting measurements that are expected to have some degree of error.38

Literature Review

A literature review provides summarized studies to reveal similar and diverse framings of opinion and theory related to a specific research question. A literature review also provides transparency to the author’s familiarization with seminal research related to a research question. The literature review offers rigor in that each study is systematically reviewed by the author. However, in a typical literature review, the search process is not published and there is no quality appraisal.39

Systematic Review of Literature

The systematic review of literature (SRL) is the seminal tool for integrating scholarly literature into the evidence-based framework. Although the focus is on scholarship, the SLR does not limit the search for literature to specific databases or the type of literature used on the study. In addition, the SRL allows the author to employ the widest approach to a critical appraisal. The number of literature sources is not limited in an SRL. The coding, analysis, and synthesis as well as the critical appraisal in the SRL are both tedious and time-consuming. Although the SRL is less subject to biases inherent in the CAT, REA, and literature review, it is not an ideal format for a rapid assessment.40

Theoretical and Conceptual Frameworks

A systematic review will often include both theoretical and conceptual frameworks. These frameworks are important because of the context they offer to a research effort. In some instances, theoretical frameworks are accounted for as the mechanism or an intervention that addresses a knowledge gap. In these cases, a theoretical framework will be identified within the logic framework constructs. The theoretical and conceptual frameworks offer two important perspectives in terms of their contributions to the research. The theoretical framework is linked to a well-established management theory in which it is the lens through which the study is seen by the author. The conceptual framework explains what the research will seek to determine. It is typically accompanied by a diagram that depicts how the constructs of the research are networked in order to answer the research question.

As postulated by Norman G. Lederman and Judith S. Lederman, theoretical frameworks are critically important to quantitative, qualitative, or mixed methods research to “justify the importance and significance of the work.”41 In many journals, “the lack of a theoretical framework is the most frequently cited reason for [an] editorial decision not to publish a manuscript.”42 The theoretical framework provides a way for readers and researchers to gain an understanding of how the author sees the practical application of theory.

The Theoretical Framework

In the first article in this series, a CAT was conducted to demonstrate the feasibility of an integration of EBMgt and MJDM.43 The theoretical lens used to reveal how the author viewed this integration was isomorphic properties. Isomorphic properties are defined as a characteristic of properties in two or more separate sets that are common in each set. In addition, both sets face similar environmental conditions within their respective populations.44 In this view, EBMgt and MJDM share isomorphic properties. Regardless of the U.S. military Service component or process, all MJMM processes are used to inform decisions related to the disposition of resources. Therefore, all Service component MJDM methodologies are isomorphic. By this same logic, the study proposed that properties of the evidence-based framework are also used to inform decisions related to the disposition of resources and are thereby isomorphic with MJDM methodologies.

The Conceptual Framework

Conceptually, the isomorphic properties that link MJDM to EBMgt were used to demonstrate the feasibility of an integration of EBMgt and MJDM. Based on this concept, a single Service component planning methodology model can be used to explore the feasibility of such an integration for all Service components.45 The planning methodology used in the study presented in the first article in this series is the Commandant’s Planning Guidance: 38th Commandant of the Marine Corps.46 The integration concept is as follows: because the first article demonstrated that there is sufficient and relevant scholarship to inform U.S. Marine Corps planning, MJDM processes for all Service components will also be informed.

Section II: The Approach to Integrating MJDM and the Evidence-Based Framework

Because MJDM and the evidence-based framework are complex adaptive systems (CAS), the approach for this integration is to network the systems elements of MJDM and the evidence-based framework into a single set. Evidence-based framework systems elements include the scholar-practitioner, the commissioning organization, and the systematic review. MJDM is networked with decision makers, plans, orders, commanders and staff, and the Joint planning process. The 2021 presidential memorandum on restoring faith in government directs federal agencies to include science in both planning and decision-making.47 The planning and decision-making process that exists for the DOD is detailed in Joint Planning.48 Based on President Biden’s directive, the integration proposed herein preserves the methodologies of MJDM, meaning that the emerging set will result from extending the MJDM network to include the evidence-based framework.

Any organizational complex adaptive system (OCAS) will generate constraints that impede the organization from achieving its goals. Although constraint are impediments to achieving organizational goals, they are also indicators of where an adaptation will occur within a complex system. In the first article in this series, the constraints impeding the integration of EBMgt and MJDM into a single set was a doctrinal guidance and a belief that scholarship is not sufficiently available and relevant. The previous article demonstrated the availability and relevance of the scholarship required for such an integration but left the constraint on doctrinal precedence unanswered in terms of guidance.49 In the following paragraphs, this article will present the guidance that is designed as an antecedent to the doctrine governing final integration. By addressing doctrinal guidance, the remaining constraint impeding the integration of a single set between MJDM and the evidence-based framework will be eliminated.

Because this integration extends the elements of MJDM to incorporate the evidence-based framework, the approach will be to look for an integration in the existing Joint planning process doctrine specified in Joint Planning. The Joint planning process incorporates policies and procedures to facilitate responsive planning and foster a shared understanding through frequent dialogue between civilian and military leaders to provide viable military options to the U.S. president and secretary of defense. Continuous assessment and collaborative technology provide increased opportunities for consultation and updated guidance during the planning and execution processes.50

Operational Art

Operational art refers to the cognitive approach by commanders and staffs to develop strategies, campaigns, and operations to organize and employ military forces by integrating ends, ways, and means and evaluating risks. This approach is supported by the commanders and staffs’ skills, knowledge, experience, creativity, and judgment. Strategic art and operational art are mutually supporting. Strategic art provides policy context to objectives, while operational art demonstrates the feasibility and efficacy of a strategy. Operational planning translates strategy into executable activities, operations, and campaigns within resource and policy limitations to achieve objectives.51

Planning Products

According to Joint Planning, although campaign and contingency planning have two specific diametrically opposed purposes, there are nevertheless similarities within these processes.52 The differences are recognized in the planning products each process produces. The key distinctions are that campaign plans govern noncrisis situations that are generally not driven by external events, while contingency plans govern time-constrained scenarios influenced by external events. Campaign planning is ongoing and covers all current and near-term operations. In the event of a crisis, the combatant commander can initiate a crisis planning. Crisis action teams translates elements from a contingency plan to implement direct action related to a crisis. In a persistent crisis, the contingency plans become the dominant planning product.53

Planning Processes

Both campaign and contingency planning have four common components that supplement and implement one another in a specific sequence. The base plan (BPLAN) contains a commander’s estimate detailing the concept of operations. This plan is developed without the annexes found in the concept plan (CONPLAN). The CONPLAN allows a combatant commander to communicate the concept of operations so that their staff can prepare estimates and transition it into an operation plan (OPLAN).54 The OPLAN is a detailed plan identifying resources required for a particular operation and detailing how the resources will be allocated.

A CONPLAN is the antecedent of an OPLAN in that it contains a concept supported by the following annexes: task organization; intelligence; operations; logistics; command relationships; command, control, communications, and computer systems; special technical operations; interagency-interorganizational coordination; and distribution. Many CONPLANs are adapted to become fully developed OPLANs.55 Planning practitioners integrate strategic guidance, concept development, plan development, and plan assessment in both campaign and contingency planning.

Section III: Recommended Integration Areas for EBMgt

The Military Planning Scholar-Practitioner

The first article in this series demonstrated the feasibility of integrating EBMgt and MJDM. The recommendations from that article focused on the development of scholar-practitioners knowledgeable in the application of evidence-based framework and MJDM methodologies.56 Each U.S. military Service component trains and develops its own military planners in intermediate-level education (ILE) and war college programs. Military planners are assigned to combatant command staffs and other major command headquarters to execute the Joint planning process. The recommendation to incorporate professional development and graduate-level courses on the evidence-based framework into ILE and war college programs will result in the emergence of the military planning scholar-practitioner. This scholar-practitioner will have the skill set to facilitate the integration of EBMgt and MJDM at multiple levels of command.

Integration Areas

This section describes the key areas where scholarship can be integrated into operational and contingency planning: the commander’s critical informational requirements (CCIRs), posture plans, flexible response options (FROs) and flexible deterrent options (FDOs), and staff estimates. All four areas were included in Joint Planning.57 They were identified where the systematic review can be incorporated to allow science to inform MJDM. Each of these four areas are explained in separate subsections below.

Commander’s Critical Information Requirements

Commanders Critical Information Requirements (CCIRs) are elements of discretionary information that commanders develop to inform timely decision-making.58 Commanders apply a diverse use of CCIRs in both campaign and contingency planning. In contingency planning, CCIRs are dynamic and heavily situation-dependent. For example, CCIRs can be focused on a combat operation such as those involving the location of enemy command and control centers, specific enemy units, or the securing of a specific objective. In campaign planning during normal operations, the CCIR can be a standalone list of informational requirements that are regularly reported to commanders at daily update briefings. The list may include casualties among off-duty personnel or media encounters with military personnel. In practice, CCIRs are commonly reported as they occur. In these instances, staff estimates are updated as appropriate.

In terms of integrating MJDM and the evidence-based framework, a CCIR can be used to identify potential research questions that inform staff estimates, posture plans, flexible response options, and flexible deterrent options. It is incumbent on the military planning scholar-practitioner to cross-reference potential research questions against the CCIR to reconcile whether the science is capable of supplementing those informational requirements. In some cases, these research questions need to be fully researched with a systematic review methodology and incorporated into the staff estimate. In cases where a CCIR is supplemented with a scientific study, it may become a standing CCIR for a different OPLAN. In those cases, the military planning scholar-practitioner will need to address and make adaptations to the research questions and whatever subsequent research is required.

Posture Plans

Posture plans are key elements of combatant command campaigns and strategies. They describe the forces, footprint, and agreements that a commander needs to successfully execute the campaign. Combatant commands prepare plans that outline their strategy and link to national and theater objectives with the means to achieve them. These strategic objectives can include power projections in conjunction with economic factors as well as cultural considerations. In all of these instances, scholarship can be an asset to informing decision-making. The posture plan is the primary document used to advocate for changes to posture, to support resource decisions, and to identify departmental oversight responsibilities. As with the evidence-based framework, posture plans identify status and gaps, explore risks, and recommend required changes and proposed initiatives.59

Flexible Response and Deterrent Options

The basic purpose of FROs is to preempt and/or respond to attacks against the United States and/or U.S. interests worldwide. FROs are intended to facilitate early decision-making by developing a wide range of prospective actions carefully tailored to produce desired effects, congruent with national security policy objectives. A FRO communicates what means are available to the president and secretary of defense, with actions appropriate and adaptable to existing circumstances.60 FROs also provide the DOD the necessary planning framework to fast-track the requisite authorities and approvals necessary to address dynamic and evolving threats.61

For example, at the microeconomic level, social science research informs decisions related to the ethical, legal, and social implementations (ELSIs) that influence individual and organizational behaviors regardless of whether or not the implications are civil or military. At the macroeconomic level, research informs the influence of ELSIs on nations, states, and economies. For example, research on the effects of exposure to violence, the limits of cognition under sleep deprivation, or the predictors for fight or flight responses are all inputs that inform individual behaviors. Research on predicting the bullwhip effect on supply chains, cultural impediments in group dynamics, or the impact of information technology on organizational efficiency are inputs that inform organizational behaviors. Research on energy efficiency, production capacity, or political systems are inputs that shape the strategic culture of nations and states. Insights gained from such inputs help combatant commanders and their staffs to develop FROs with the aid of scientific research.

FDOs are preplanned, deterrence-oriented actions that are tailored to signal and influence an adversary’s actions before or during a crisis.62 FDOs are developed for each instrument of national power--diplomatic, informational, military, and economic--but are most effective when combined across these instruments. FDOs facilitate early strategic decision-making, rapid de-escalation, and crisis resolution by laying out a wide range of interrelated response paths.

As mentioned above, studies in social science research speak to the ELSIs of microeconomic behaviors and shape strategic culture at the macroeconomic level. Whereas these insights are valuable in developing FROs, they offer even greater potential for developing FDOs where sweet (cultural) and sticky (economic) power projections are on the table with sharp (military) options.

Staff Estimates

A staff estimate is a continual “evaluation of how factors in a staff section’s functional area support and impact [the planning and execution of] the mission.”63 Staff estimates are critical to the development of and adaptations to the operational art of MJDM. As previously stated, the commander’s estimate provides the strategic scope and purpose intent of both current and future operations. Estimates assess and translate training, doctrine, and resources into the inputs for course of action (COA) development. Each staff section prepares an estimate framed with a particular skill set related to human resources, information, operations, logistics, civil-military operations, and communications.64

The same taxonomy of microeconomic and macroeconomic research inputs that inform FROs and FDOs can inform staff estimates. Because staff estimates provide the essential evidence that informs COA development, incorporating the science in each staff section element ensures that scientific studies will be considered in the final COA adopted. It will be incumbent that the military planner scholar-practitioner develop research questions during mission analysis.

Discussion

Although this article does not present research from a systematic review, it furthers the understanding of how systematic review methodologies can be used to inform MJDM. Because the systematic review is a critical tool in conveying evidence in the evidence-based framework, furthering the understanding of the systematic review process was the impetus for this article. This discussion will continue in articles three and four in this series. The third article will explore the evaluation of evidence and make recommendations on how this could occur in an integration of MJDM and the evidence-based framework. The final article will explore the importance of building a data set and present database search methodologies that add rigor and transparency to database interrogation.

If the DOD is to make decisions with the best evidence available in accordance with current presidential directives, the systematic review is the logical tool for integrating the evidence-based framework into MJDM methodologies. Although the systematic review is an accepted methodology within academia, it is not well understood outside of academic settings. The systematic review creates evidence for decision-making, and that evidence is typically related to a specific problem area. Therefore, it is critical that the scholar-practitioner remain steadfast in upholding the integrity of the methodologies of any article presenting a systematic review, as each article is focused on the research itself.

Summary

The integration of MJDM and the evidence-based framework begins with a recognition of the evidence-based framework and how it is applied. Applying the evidence-based framework is contingent on a thorough knowledge of the continuously adapting dynamics of decision-making. As a former military planner, the author is aware that the DOD has a long history of developing MJDM practitioners that begins with ILE and continues through senior Service colleges. In these courses, military practitioners learn to apply decision-making methodologies specific to the DOD. However, these courses have not incorporated scholarly research or a methodology to integrate it into MJDM. A natural integration of MJDM and the evidence-based framework is to develop a military planner scholar-practitioner. The key to developing this scholar-practitioner is teaching the evidence-based framework with specific emphasis on systematic reviews. This should be done in ILE and war college programs run by each U.S. military Service component.

Endnotes

- Joseph R. Biden Jr., “Memorandum on Restoring Trust in Government through Scientific Integrity and Evidence-Based Policymaking,” White House, 27 January 2021.

- Biden, “Memorandum on Restoring Trust in Government through Scientific Integrity and Evidence-Based Policymaking.”

- Foundations for Evidence-Based Policymaking Act of 2018, Pub. L. 115-435, 132 Stat. 5529 (2019).

- Eric Barends, Denise M. Rousseau, and Rob B. Briner, eds., CEBMa Guideline for Critically Appraised Topics in Management and Organizations (Amsterdam, Netherlands: Center for Evidence-Based Management, 2017), 3.

- Cynthia Grant and Azadeh Osanloo, “Understanding, Selecting, and Integrating a Theoretical Framework in Dissertation Research: Creating the Blueprint for Your ‘House’,” Administrative Issues Journal: Connecting Education, Practice, and Research 4, no. 2 (2014): 12–26, https://doi.org/10.5929/2014.4.2.9.

- Eric Barends, Denise M. Rousseau, and Rob B. Briner, Evidence-Based Management: The Basic Principles (Amsterdam, Netherlands: Center for Evidence-Based Management, 2014).

- Barends, Rousseau, and Briner, Evidence-Based Management, 5.

- Margaret J. Foster and Sarah T. Jewell, eds., Assembling the Pieces of a Systematic Review: A Guide for Librarians (Lanham, MD: Rowman & Littlefield, 2017), 38.

- Harold E. Briggs and Bowen McBeath. “Evidence-Based Management: Origins, Challenges, and Implications for Social Service Administration,” Administration in Social Work 33, no. 3 (2009): 245–48, https://doi.org/10.1080/03643100902987556.

- Grant and Osanloo, “Understanding, Selecting, and Integrating a Theoretical Framework in Dissertation Research,” 13.

- “Systematic Reviews & Other Review Types,” Temple University Libraries, accessed 14 October 2021.

- Grant and Osanloo, “Understanding, Selecting, and Integrating a Theoretical Framework in Dissertation Research,” 16–17.

- David E. McCullin, “The Impact of Organizational Complex Adaptive System Constraints on Strategy Selection: A Systematic Review of the Literature” (DM diss., University of Maryland Global Campus, 2020).

- David E. McCullin, “Exploring Evidence-Based Management in Military Planning Processes as a Critically Appraised Topic,” Expeditions with MCUP (2021): https://doi.org/10.36304/ExpwMCUP.2021.04.

- Biden, “Memorandum on Restoring Trust in Government through Scientific Integrity and Evidence-Based Policymaking.”

- Joint Planning, Joint Publication 5-0 (Washington, DC: Joint Chiefs of Staff, 2017).

- Rob B. Briner, David Denyer, and Denise M. Rousseau[0], “Evidence-Based Management: Concept Cleanup Time?,” Academy of Management Perspectives 23, no. 4 (November 2009): 19–32, https://doi.org/10.5465/amp.23.4.19.

- Andrew H. Van de Ven and Paul E. Johnson, “Knowledge for Theory and Practice,” Academy of Management Review 31, no. 4 (October 2006): 802–21, https://doi.org/10.2307/20159252.

- McCullin, “Exploring Evidence-Based Management in Military Planning Processes as a Critically Appraised Topic.”

- Barends, Rousseau, and Briner, Evidence-Based Management, 12–14.

- Barends, Rousseau, and Briner, Evidence-Based Management, 4.

- McCullin, “Exploring Evidence-Based Management in Military Planning Processes as a Critically Appraised Topic.”

- Guy Paré et al., “Synthesizing Information Systems Knowledge: A Typology of Literature Reviews,” Information & Management 52, no. 2 (March 2015):183–99, https://doi.org/10.1016/j.im.2014.08.008.

- David Gough, “Weight of Evidence: A Framework for the Appraisal of the Quality and Relevance of Evidence,” Research Papers in Education 22, no. 2 (2007): 218–28, https://doi.org/10.1080/02671520701296189.

- Mark Petticrew and Helen Roberts, Systematic Reviews in the Social Sciences: A Practical Guide (Oxford, UK: Blackwell Publishing, 2006).

- “Universities and Business Schools that Offer Courses Where Elements of EMB Are Taught,” Center for Evidence Based Management, accessed 14 October 2021.

- “Systematic Reviews & Other Review Types.”

- “Systematic Review Centre for Laboratory Animal Experimentation (SYRCLE),” Norecopa, accessed 14 October 2021.

- “Public Health: Systematic Reviews,” University of Texas Libraries, accessed 14 October 2021.

- “Systematic Reviews: Home,” Uniformed Services University, accessed 14 October 2021.

- “Centre for Reviews and Dissemination,” University of York, accessed 14 October 2021.

- “Systematic Reviews,” Center for Evidence-Based Crime Policy, George Mason University, accessed 14 October 2021.

- “Systematic Reviews,” New York University Health Sciences Library, accessed 14 October 2021.

- “Systematic Reviews,” Dahlgren Memorial Library, Georgetown University, accessed 14 October 2021.

- “What Is a Systematic Review?,” Center for Evidence Based Management, accessed 14 October 2021.

- Barends, Rousseau, and Briner, CEBMa Guideline for Critically Appraised Topics in Management and Organizations, 3.

- Barends, Rousseau, and Briner, CEBMa Guideline for Critically Appraised Topics in Management and Organizations, 3.

- Petticrew and Roberts, Systematic Reviews in the Social Sciences, 19.

- David N. Boote and Penny Beile, “Scholars before Researchers: On the Centrality of the Dissertation Literature Review in Research Preparation,” Educational Researcher 34, no. 6 (2005): 3–15, https://doi.org/10.3102/0013189X034006003.

- Barends, Rousseau, and Briner, CEBMa Guideline for Critically Appraised Topics in Management and Organizations, 3.

- Norman G. Lederman and Judith S. Lederman, “What Is a Theoretical Framework?: A Practical Answer,” Journal of Science Teacher Education 26 (2015): 597, https://doi.org/10.1007/s10972-015-9443-2.

- Lederman and Lederman, “What Is a Theoretical Framework?,” 593.

- McCullin, “Exploring Evidence-Based Management in Military Planning Processes as a Critically Appraised Topic.”

- Paul J. DiMaggio and Walter W. Powell, “The Iron Cage Revisited: Institutional Isomorphism and Collective Rationality in Organizational Fields,” American Sociological Review 48, no. 2 (April 1983): 147–60, https://doi.org/10.2307/2095101.

- For a greater explanation of the term properties, see McCullin, “Exploring Evidence-Based Management in Military Planning Processes as a Critically Appraised Topic.”

- Gen David H. Berger, Commandant’s Planning Guidance: 38th Commandant of the Marine Corps (Washington, DC: Headquarters Marine Corps, 2019).

- Biden, “Memorandum on Restoring Trust in Government through Scientific Integrity and Evidence-Based Policymaking.”

- Joint Planning, I-1-5.

- McCullin, “Exploring Evidence-Based Management in Military Planning Processes as a Critically Appraised Topic.”

- Joint Planning, II-12.

- For more on operational art, see Joint Operations, JP 3-0 (Washington, DC: Joint Chiefs of Staff, 2018), II-3.

- Joint Planning, VIII-7.

- Joint Planning, VII-6.

- Joint Planning, III-40.

- Joint Planning, VIII-1.

- McCullin, “Exploring Evidence-Based Management in Military Planning Processes as a Critically Appraised Topic.”

- Joint Planning, GL 4-8.

- For more information on CCIRs, see Commander’s Critical Informational Requirements (CCIRs) Insights and Best Practices Focus Paper, 4th ed. (Suffolk, VA: Joint Staff J7, 2020).

- Joint Planning, G-1.

- Joint Planning, E-4.

- Joint Planning, E-6.

- Joint Planning, III-27.

- Joint Planning, III-27.

- Joint Planning, III-27.